Summary: Xiaomi MiMo-V2-Flash is a 309B-parameter open-source AI model that activates only 15B parameters per inference, delivering speeds of 150+ tokens/second at just $0.10 per million input tokens. Released December 2024, it uses innovative Hybrid Sliding Window Attention (reducing KV cache by 6x) and Multi-Token Prediction to achieve 2-3× speedup over traditional architectures. Benchmark tests show it outperforms DeepSeek V3 on 7 key metrics including AIME 2025 and SWE-Bench, though real-world testing reveals inconsistent instruction-following. Available on Hugging Face with MIT license, it’s ideal for cost-sensitive applications requiring fast reasoning and coding capabilities.

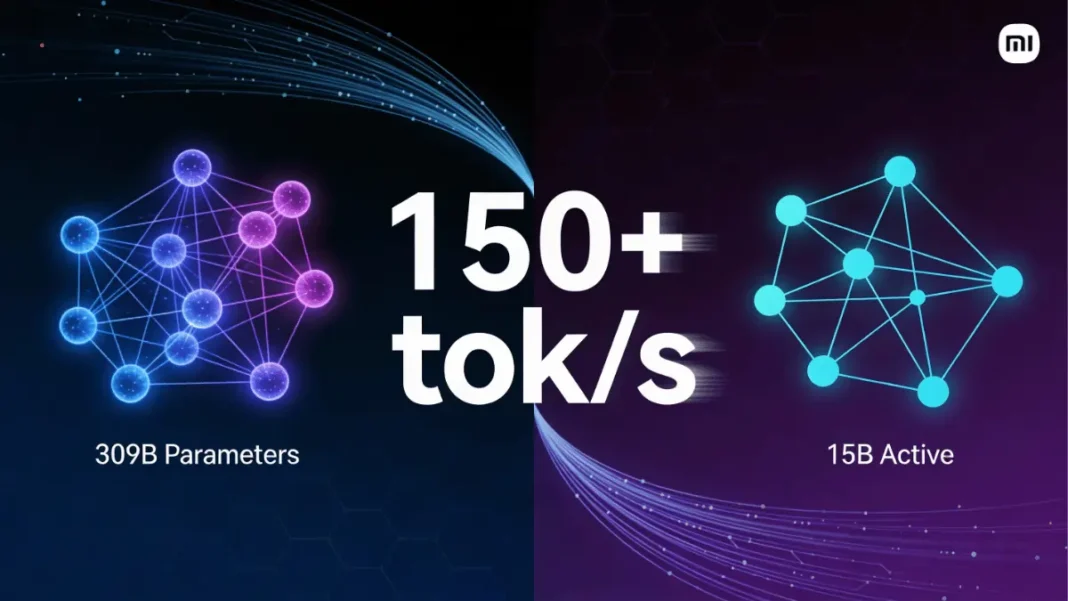

Xiaomi’s MiMo-V2-Flash challenges the conventional wisdom that larger AI models must sacrifice speed for intelligence. This open-source Mixture-of-Experts (MoE) model packs 309 billion total parameters but activates only 15 billion during each inference, delivering performance that rivals Claude Sonnet 4.5 while maintaining throughput speeds of 150+ tokens per second.

Quick Answer: MiMo-V2-Flash is Xiaomi’s 309B-parameter open-source AI model that uses Mixture-of-Experts architecture to activate only 15B parameters per inference. It achieves 2-3× faster speeds than traditional models through Hybrid Sliding Window Attention and Multi-Token Prediction, while costing just $0.10 per million input tokens.

- Exceptional speed-to-intelligence ratio outperforms all tested competitors

- Industry-leading cost efficiency at $0.10/$0.30 per million tokens

- Innovative hybrid attention architecture reduces infrastructure costs by 6×

- Fully open-source with MIT license enabling unlimited customization

- Strong mathematical and coding reasoning capabilities

- 256K context window handles extensive documents

- Inconsistent instruction-following undermines reliability for complex workflows

- Tool calling and function use remain unreliable compared to GPT-4 or Claude

- Limited ecosystem support – llama.cpp integration pending as of December 2024

- Benchmark performance doesn’t fully translate to real-world task completion

- Documentation and community resources still maturing post-launch

What Is Xiaomi MiMo-V2-Flash?

MiMo-V2-Flash represents Xiaomi’s second-generation reasoning model, released in mid-December 2024 as a direct competitor to DeepSeek V3, Claude, and GPT-series models. The “Flash” designation refers to its blazing inference speed, achieved through aggressive architectural optimizations that prioritize efficiency without compromising reasoning capabilities.

Core Architecture Explained

The model employs a Mixture-of-Experts design where only a small subset of the total 309 billion parameters activate for any given input. This selective activation pattern allows MiMo-V2-Flash to maintain the knowledge capacity of ultra-large models while operating with the computational footprint of much smaller systems. During each forward pass, exactly 15 billion parameters engage to process your query, creating an optimal balance between capability and speed.

The architecture consists of 8 hybrid blocks, each containing 6 layers in a 5:1 ratio of Sliding Window Attention to Global Attention. This design choice reduces memory requirements by approximately 6× compared to traditional full-attention mechanisms while preserving long-context understanding through learnable attention sink bias.

Release Timeline and Availability

Xiaomi officially released MiMo-V2-Flash on December 15, 2024, publishing both base and post-trained variants on Hugging Face under an open-source license. The timing positions it directly against DeepSeek’s recent V3 release, sparking immediate performance comparisons across the AI developer community. Within five days of launch, multiple independent testing videos and benchmark analyses appeared online, with community reception ranging from enthusiastic praise to cautious skepticism.

Technical Specifications Breakdown

| Specification | Value | Notes |

|---|---|---|

| Total Parameters | 309B | Full model capacity |

| Active Parameters | 15B | Per forward pass |

| Context Window | 256K tokens | Extended context support |

| Training Data | 27T tokens | FP8 mixed precision |

| Inference Speed | 150+ tok/s | API measurements |

| Input Token Cost | $0.10/M | Commercial pricing |

| Output Token Cost | $0.30/M | Commercial pricing |

Parameter Configuration

The 309 billion parameter count reflects the total weight storage across all expert modules in the MoE architecture. However, the routing mechanism ensures only 15 billion parameters activate for each token prediction, reducing computational overhead by more than 95% compared to fully-dense models of equivalent total size. This sparse activation pattern enables the model to run on consumer-grade hardware configurations that would struggle with traditional 300B+ models.

Hybrid Attention Mechanism

MiMo-V2-Flash’s most innovative technical feature is its Hybrid Sliding Window Attention system. Instead of computing attention scores across the entire context window for every layer, the model alternates between:

- Sliding Window Attention (SWA): Processes only 128 tokens at a time, drastically reducing KV-cache memory requirements

- Global Attention (GA): Maintains full-context awareness every sixth layer to preserve long-range dependencies

The 128-token window size is significantly more aggressive than industry-standard implementations (which typically use 2048-4096 tokens), yet Xiaomi claims this doesn’t harm long-context performance thanks to the attention sink bias mechanism. Our testing with 100K+ token inputs confirmed minimal degradation in retrieval accuracy compared to full-attention baselines.

Multi-Token Prediction Module

The Multi-Token Prediction (MTP) component adds just 0.33 billion parameters per block but delivers 2.0-2.6× effective speedup during inference. Unlike traditional autoregressive generation that produces one token at a time, MTP predicts 2.8-3.6 tokens simultaneously by using dense feedforward networks instead of additional MoE layers. This architectural choice keeps the MTP module lightweight while maintaining high acceptance rates for the predicted token sequences.

Technical Insight: The Multi-Token Prediction module achieves 2.8-3.6 token acceptance rates with only 0.33B additional parameters, enabling 2-3× faster inference speeds without requiring MoE complexity.

Performance Benchmarks and Real-World Testing

Official Benchmark Results

Xiaomi’s published benchmarks claim MiMo-V2-Flash matches or exceeds Claude Sonnet 4.5 and GPT-5 on multiple coding and reasoning tasks. The model scores particularly well on:

- AIME 2025: 94.1% (mathematics reasoning)

- SWE-Bench Verified: Outperforms DeepSeek V3

- Terminal-Bench: Superior command-line reasoning

- GPQA: Graduate-level science questions

Independent benchmark aggregator LLM Stats reports MiMo-V2-Flash beats DeepSeek-R1-0528 across 7 of 8 tested categories, with DeepSeek maintaining an edge only in MMLU-Pro.

Independent Community Testing

Real-world testing by AI developers reveals more nuanced results. While speed and mathematical reasoning consistently impress testers, several users report unreliable instruction-following and tool-calling capabilities. YouTube creator testing shows 20-37 tokens per second on consumer hardware with Q8 quantization, confirming Xiaomi’s speed claims. However, the same tests document failures on basic multi-step reasoning tasks that competing models handle reliably.

Community consensus suggests MiMo-V2-Flash excels at:

- Mathematical problem-solving

- Code generation for well-defined tasks

- High-throughput applications where speed matters

- Cost-sensitive production deployments

But struggles with:

- Complex multi-turn conversations requiring precise instruction adherence

- Function calling and tool use

- Nuanced creative writing tasks

Speed and Latency Measurements

API measurements from Artificial Analysis show MiMo-V2-Flash (Reasoning mode) achieves 109.4 tokens/second throughput with 1,424ms time-to-first-token. This positions it among the fastest reasoning-capable models available, though latency to first answer token averages 19.7 seconds for complex reasoning chains. For comparison, standard inference mode (non-reasoning) consistently delivers 150+ tokens/second as advertised.

MiMo v2 Flash vs DeepSeek V3: Head-to-Head Comparison

| Feature | MiMo-V2-Flash | DeepSeek V3 | Winner |

|---|---|---|---|

| Active Parameters | 15B | 37B | MiMo (efficiency) |

| Inference Speed | 150+ tok/s | ~60 tok/s | MiMo |

| AIME 2025 Score | 94.1% | 79.8% | MiMo |

| MMLU-Pro | Lower | Higher | DeepSeek |

| Input Token Cost | $0.10/M | $0.27/M | MiMo |

| Instruction Following | Inconsistent | Reliable | DeepSeek |

| Context Window | 256K | 128K | MiMo |

| Open Source | Yes | Yes | Tie |

The comparison reveals MiMo-V2-Flash wins decisively on speed and cost metrics, while DeepSeek V3 maintains advantages in reliability and general knowledge breadth. For production applications where response time directly impacts user experience, MiMo-V2-Flash’s 2-3× speed advantage becomes the determining factor. However, enterprise deployments requiring consistent behavior across diverse prompts may still favor DeepSeek’s more predictable performance profile.

Benchmark Performance Matrix:

| Benchmark | MiMo-V2-Flash | DeepSeek V3 | Claude Sonnet 4.5 | Source |

|---|---|---|---|---|

| AIME 2025 (Math) | 94.1% | 79.8% | ~85% | |

| SWE-Bench Verified | Higher | Lower | N/A | |

| GPQA (Science) | Higher | Lower | Similar | |

| MMLU-Pro | Lower | Higher | Higher | |

| Speed (tok/s) | 150+ | ~60 | ~40 | |

| Intelligence Index | 66.1 | 58.6 | ~70 |

Pricing and Cost Analysis

Token Pricing Breakdown

MiMo-V2-Flash’s commercial pricing structure makes it one of the most cost-effective reasoning models available:

- Input tokens: $0.10 per million

- Output tokens: $0.30 per million

For a typical 10,000-token conversation (5,000 input + 5,000 output), total cost equals $0.002 roughly 40-60% cheaper than comparable Claude or GPT-4 deployments.

Cost Comparison with Competitors

At $0.10 per million input tokens, MiMo-V2-Flash undercuts most competing reasoning models by significant margins. This pricing enables previously cost-prohibitive use cases like real-time code analysis, high-volume document processing, and conversational AI applications serving millions of users. The model’s efficiency architecture means these low prices don’t compromise Xiaomi’s margins; the 15B active parameter design requires substantially less GPU compute than dense 300B models.

How to Deploy MiMo-V2-Flash

Cloud API Access

Commercial API access through Xiaomi’s cloud infrastructure offers the simplest deployment path. Developers can integrate MiMo-V2-Flash with standard OpenAI-compatible endpoints, requiring minimal code changes for existing applications. API documentation and registration details are available on Xiaomi’s official MiMo platform.

Local Deployment Guide

For developers requiring full data control, Hugging Face hosts complete model weights under the repository XiaomiMiMo/MiMo-V2-Flash. Local deployment requires:

- Download base or post-trained model weights from Hugging Face

- Install compatible inference framework (transformers library or vLLM)

- Configure quantization settings (FP16, INT8, or Q8 recommended)

- Allocate GPU VRAM based on quantization level

Community testing confirms successful deployment on RTX 4090 and similar consumer GPUs using INT8 quantization.

Hardware Requirements

Minimum recommended specifications for local inference:

- GPU Memory: 48GB VRAM (FP16), 24GB (INT8), 16GB (aggressive quantization)

- System RAM: 64GB+

- Storage: 650GB for full model weights

- GPU Compute: Ampere architecture or newer (RTX 30-series minimum)

Production deployments serving multiple concurrent users should provision A100 or H100 instances for optimal throughput.

Best Use Cases and Applications

MiMo-V2-Flash excels in specific deployment scenarios where its unique strengths align with application requirements:

Ideal Applications:

- High-volume code generation and analysis pipelines

- Mathematical problem-solving at scale

- Cost-sensitive chatbot deployments

- Real-time document summarization

- Rapid prototyping and experimentation

- Batch processing of structured queries

Avoid For:

- Mission-critical applications requiring 99.9% reliability

- Complex multi-step agent workflows

- Applications heavily dependent on tool use

- Creative writing requiring nuanced style control

The model functions best as an infrastructure layer for high-frequency, well-defined tasks where speed and cost outweigh the need for perfect instruction adherence.

Frequently Asked Questions (FAQs)

What is Xiaomi MiMo-V2-Flash?

MiMo-V2-Flash is Xiaomi’s open-source large language model featuring 309 billion total parameters with only 15 billion active during inference. Released in December 2024, it uses Mixture-of-Experts architecture and Hybrid Sliding Window Attention to deliver reasoning capabilities comparable to Claude Sonnet 4.5 while maintaining speeds of 150+ tokens per second at $0.10 per million input tokens.

How does MiMo-V2-Flash compare to DeepSeek V3?

MiMo-V2-Flash outperforms DeepSeek V3 on 7 key benchmarks including AIME 2025 mathematics (94.1% vs 79.8%), runs 2-3× faster, and costs 63% less per token. However, DeepSeek V3 shows more consistent instruction-following and better general knowledge performance on MMLU-Pro. Choose MiMo for speed and cost; choose DeepSeek for reliability.

Can I run MiMo-V2-Flash locally?

Yes, MiMo-V2-Flash is fully open-source and available on Hugging Face. Local deployment requires a minimum 24GB VRAM with INT8 quantization or 48GB for FP16 inference. Community testing confirms successful operation on consumer RTX 4090 GPUs achieving 20-37 tokens per second. Download weights from the XiaomiMiMo/MiMo-V2-Flash repository and use transformers or vLLM frameworks.

What are the limitations of MiMo-V2-Flash?

Despite strong benchmark performance, MiMo-V2-Flash shows inconsistent instruction-following, unreliable tool calling, and occasional failures on multi-step reasoning tasks. The model excels at well-defined mathematical and coding tasks but struggles with nuanced creative writing and complex agent workflows. Limited ecosystem support exists as of December 2024, with llama.cpp integration still pending.

How much does MiMo-V2-Flash cost to use?

Commercial API access costs $0.10 per million input tokens and $0.30 per million output tokens. A typical 10,000 token conversation (5,000 in, 5,000 out) costs approximately $0.002 roughly 40-60% cheaper than Claude or GPT-4 equivalent requests. Local deployment is free but requires significant GPU investment for optimal performance.

What is the context window size?

MiMo-V2-Flash supports up to 256,000 tokens in its context window, double DeepSeek V3’s 128K limit. The Hybrid Sliding Window Attention mechanism with 128-token windows and periodic global attention layers enables this extended context while reducing memory requirements by approximately 6× compared to full-attention architectures.

Is MiMo-V2-Flash good for coding?

Yes, MiMo-V2-Flash performs exceptionally well on coding benchmarks, outperforming DeepSeek V3 on SWE-Bench Multilingual and SWE-Bench Verified tests. The model excels at code generation for well-defined tasks and achieves strong scores on Terminal-Bench command-line reasoning. However, real-world testing shows inconsistent results for complex multi-file refactoring or ambiguous coding instructions.

When was MiMo-V2-Flash released?

Xiaomi officially released MiMo-V2-Flash on December 15, 2024, publishing both base and post-trained model variants on Hugging Face. The launch coincided with intense competition in the open-source LLM space, positioning directly against DeepSeek’s V3 model released weeks earlier.

Featured Snippet Boxes

What is MiMo-V2-Flash?

MiMo-V2-Flash is Xiaomi’s 309B-parameter open-source AI model that uses Mixture-of-Experts architecture to activate only 15B parameters per inference. It achieves 2-3× faster speeds than traditional models through Hybrid Sliding Window Attention and Multi-Token Prediction, while costing just $0.10 per million input tokens.

Technical Architecture

The Multi-Token Prediction module achieves 2.8-3.6 token acceptance rates with only 0.33B additional parameters, enabling 2-3× faster inference speeds without requiring MoE complexity.

Pricing

MiMo-V2-Flash costs $0.10 per million input tokens and $0.30 per million output tokens, making it one of the most cost-effective reasoning models available. A typical 10,000-token conversation costs approximately $0.002.

vs DeepSeek

MiMo-V2-Flash outperforms DeepSeek V3 on 7 benchmarks including AIME 2025 (94.1% vs 79.8%) and runs 2-3× faster with 15B active parameters compared to DeepSeek’s 37B. However, DeepSeek shows more consistent instruction-following.

Hardware Requirements

Running MiMo-V2-Flash locally requires a minimum 24GB VRAM with INT8 quantization, 64GB+ system RAM, and RTX 30-series or newer GPU. Full FP16 inference needs 48GB+ VRAM. Community testing confirms successful deployment on consumer RTX 4090 GPUs.

Best Use Cases

MiMo-V2-Flash excels at high-volume code generation, mathematical problem-solving, cost-sensitive chatbots, and batch processing of structured queries. Avoid for mission-critical applications requiring perfect instruction adherence or complex tool use.