OpenAI introduced IndQA, a groundbreaking benchmark specifically designed to evaluate how well artificial intelligence systems understand and reason about questions rooted in Indian languages and cultural contexts. Announced at the DevDay Exchange event in Bengaluru on November 4, 2025, this marks OpenAI’s first region-specific AI evaluation framework, a recognition of India’s position as ChatGPT’s second-largest market and its potential to become the largest.

Unlike conventional multilingual benchmarks that focus primarily on translation or multiple-choice tasks, IndQA probes deeper into cultural nuance, contextual understanding, and reasoning abilities across 2,278 expert-crafted questions spanning 12 Indian languages and 10 cultural domains. The benchmark was developed in collaboration with 261 domain experts from across India, including linguists, journalists, scholars, artists, and industry practitioners who brought authentic cultural perspectives to the evaluation process.

Table of Contents

What Is the IndQA Benchmark?

The IndQA benchmark (Indian Question-Answering benchmark) is an AI evaluation framework that tests language models on their ability to understand and answer questions about Indian culture, history, everyday life, and specialized knowledge domains all within the linguistic and cultural context of India. Rather than testing simple language proficiency, IndQA evaluates whether AI systems can grasp what matters to people in Indian communities.

OpenAI developed IndQA to address a critical gap in AI evaluation: about 80% of the global population does not speak English as their primary language, yet most AI benchmarks inadequately measure non-English language capabilities. For a country like India, with approximately one billion people who don’t use English as their primary language and 22 official languages (including at least seven with over 50 million speakers), this gap is particularly significant.

Beyond Translation: Testing Cultural Understanding

IndQA represents a paradigm shift in multilingual AI evaluation. Traditional benchmarks like MMMLU (Massive Multitask Language Understanding in Multiple Languages) typically rely on translated versions of English questions or straightforward multiple-choice formats. These approaches fail to capture the cultural context, idiomatic expressions, historical references, and region-specific knowledge that define authentic communication in Indian languages.

Each IndQA question is natively authored by experts in the target language rather than translated, ensuring authentic cultural grounding. Questions probe reasoning-heavy, culturally nuanced scenarios that require models to demonstrate genuine understanding rather than pattern matching or memorization.

What makes IndQA different from other AI benchmarks?

IndQA features natively authored questions (not translations) across 12 Indian languages, covering culturally specific topics like Indian architecture, regional cuisine, and vernacular literature. Each question uses rubric-based grading with expert-defined criteria, testing reasoning and cultural understanding rather than simple translation accuracy.

Why OpenAI Created IndQA for India

OpenAI’s decision to build its first region-specific benchmark around India stems from strategic, demographic, and technical considerations. Understanding these motivations reveals broader trends in how AI companies are approaching global expansion beyond English-speaking markets.

The Saturation Problem with Existing Benchmarks

Existing multilingual benchmarks have become saturated, with top AI models clustering near maximum scores, making it difficult to measure meaningful progress. When models achieve 95%+ accuracy on a benchmark, that evaluation tool loses its ability to distinguish between marginal improvements and genuine capability advances.

MMMLU and similar benchmarks also focus predominantly on academic knowledge, translation tasks, and standardized test-style questions. They don’t adequately evaluate what OpenAI calls “the things that matter to people where they live” context-dependent cultural knowledge, regional variations, and everyday reasoning scenarios.

India’s Growing AI Market and Language Diversity

India represents a compelling starting point for culturally grounded AI benchmarks for several reasons:

- Market size: India is OpenAI’s second-largest market globally, with millions using ChatGPT daily and a rapidly growing developer community

- Linguistic diversity: 22 official languages and hundreds of spoken dialects create complex multilingual challenges

- Digital growth: In October 2025, OpenAI offered ChatGPT Go subscriptions free for one year to Indian users, resulting in paid subscribers more than doubling in the first month

- Cultural richness: India’s diverse cultural domains from classical literature to regional cuisines provide fertile ground for testing cultural AI understanding

Srinivas Narayanan, CTO of B2B Applications at OpenAI, emphasized that the aim is to ensure models “accurately reflect the nuances that every culture values,” with plans to replicate the IndQA approach in other regions.

How IndQA Works

IndQA employs a sophisticated, multi-stage evaluation methodology that combines expert knowledge with systematic quality controls. Understanding this process illuminates why the benchmark represents a higher bar for AI performance than traditional metrics.

The Rubric-Based Evaluation System

Rather than simple right/wrong grading, IndQA uses a rubric-based approach where each response is evaluated against expert-defined criteria specific to that question. This mirrors how human educators grade essay responses in examinations.

Each criterion spells out what an ideal answer should include or avoid, with weighted point values based on importance. A model-based grader checks whether each criterion is met, and the final score represents the sum of points earned out of total possible points. This granular approach captures partial credit and nuanced understanding rather than binary scoring.

Expert-Driven Question Creation Process

OpenAI partnered with organizations to identify 261 domain experts across India’s 10 cultural domains. These experts possess native-level fluency in their target language (and English) plus deep subject matter expertise in their specialty area.

The question creation workflow included:

- Expert authoring: Domain specialists drafted difficult, reasoning-focused prompts tied to their regions and specialties

- Adversarial testing: Questions were tested against OpenAI’s strongest models (GPT-4o, OpenAI o3, GPT-4.5, and partially GPT-5)

- Selective retention: Only questions where a majority of these top models failed were kept, preserving “headroom for progress”

- Criteria definition: Experts provided detailed grading rubrics for each question

- Ideal answers: Reference answers and English translations were added for auditability

- Peer review: Iterative review cycles ensured quality before final sign-off

Adversarial Filtering Against Top Models

The adversarial filtering stage is crucial for maintaining benchmark difficulty. By testing each question against frontier models like GPT-4.5 and GPT-5, OpenAI ensured the benchmark wouldn’t be immediately saturated.

However, this approach also introduces a potential bias: questions are adversarially selected against OpenAI’s own models, which could theoretically disadvantage OpenAI models compared to competitors in benchmark comparisons. OpenAI acknowledges this caveat in their methodology documentation.

How does IndQA’s rubric-based grading work?

Each IndQA question includes expert-written criteria defining what a complete answer should contain. Criteria receive weighted points based on importance. A model-based grader evaluates whether each criterion is satisfied, then calculates the final score as points earned divided by total possible points similar to essay grading in exams.

Languages and Cultural Domains Covered

IndQA’s comprehensive scope distinguishes it from narrower multilingual evaluations. The benchmark deliberately spans both major Indian languages and culturally significant domains that matter to Indian users.

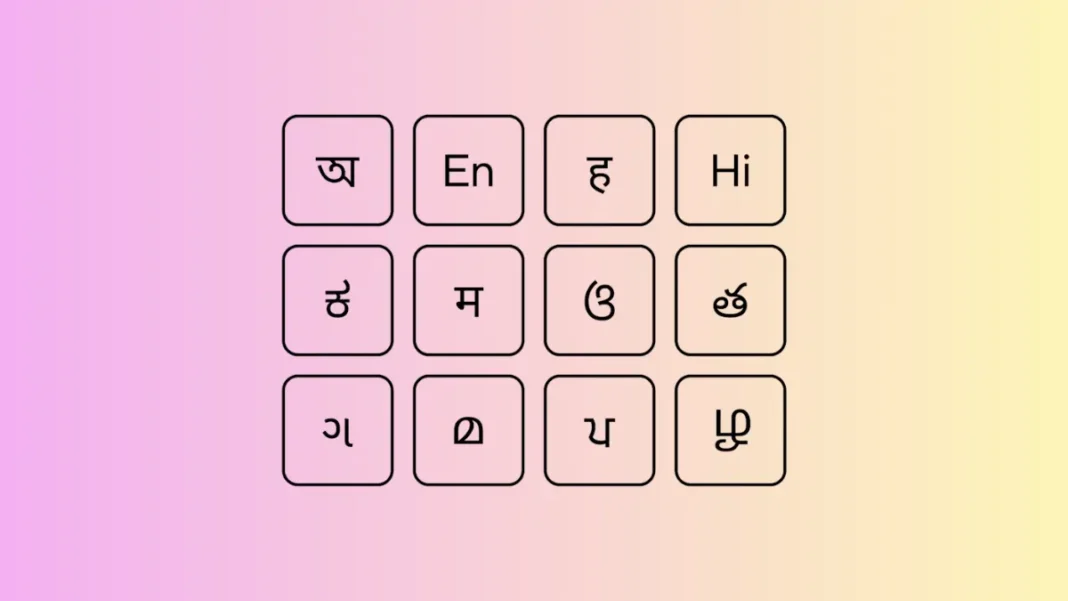

12 Indian Languages Included

The IndQA benchmark covers the following languages:

- Bengali (220+ million speakers)

- English (included for cultural context)

- Gujarati (55+ million speakers)

- Hindi (340+ million speakers)

- Hinglish (code-switching variant given its conversational prevalence)

- Kannada (45+ million speakers)

- Malayalam (35+ million speakers)

- Marathi (83+ million speakers)

- Odia (35+ million speakers)

- Punjabi (33+ million speakers)

- Tamil (75+ million speakers)

- Telugu (82+ million speakers)

The inclusion of Hinglish is particularly noteworthy, as it reflects the reality of code-switching in everyday Indian conversations mixing Hindi and English within sentences, a phenomenon that traditional benchmarks ignore.

10 Cultural Domains Tested

IndQA evaluates knowledge across diverse cultural areas:

- Architecture & Design: Regional architectural styles, temple design, traditional building methods

- Arts & Culture: Classical and folk art forms, cultural practices, artistic traditions

- Everyday Life: Daily routines, social norms, regional customs, practical knowledge

- Food & Cuisine: Regional dishes, cooking methods, culinary history, food culture

- History: Regional history, historical figures, significant events, cultural evolution

- Law & Ethics: Legal frameworks, ethical considerations, social justice issues

- Literature & Linguistics: Vernacular literature, linguistic features, notable authors, literary movements

- Media & Entertainment: Cinema, music, entertainment industry, cultural icons

- Religion & Spirituality: Religious practices, spiritual traditions, philosophical concepts

- Sports & Recreation: Cricket, regional sports, athletes, sporting culture

This domain diversity ensures AI models are tested on the breadth of knowledge that Indian users actually care about, rather than just academic topics.

IndQA vs Other AI Benchmarks

Comparing IndQA to established multilingual benchmarks clarifies its unique value proposition and evaluation approach.

Key Differences from MMMLU and MGSM

| Feature | IndQA | MMMLU | MGSM |

|---|---|---|---|

| Question Type | Open-ended, reasoning-heavy | Multiple choice | Mathematical reasoning |

| Cultural Focus | Deep cultural grounding | Academic knowledge | Problem-solving |

| Question Origin | Natively authored | Mostly translated | Translated |

| Languages | 12 Indian languages | 100+ languages (shallow) | 10+ languages |

| Grading Method | Rubric-based, weighted criteria | Binary (correct/incorrect) | Binary (correct/incorrect) |

| Expert Involvement | 261 domain experts | Limited | Limited |

| Current Saturation | Low (designed for headroom) | High (top models near ceiling) | Moderate |

| Evaluation Focus | Cultural understanding + reasoning | Factual knowledge | Math skills |

IndQA’s rubric-based grading and natively authored questions represent the key differentiators. While MMMLU covers more languages, it does so with less depth and cultural authenticity per language.

Performance Results on IndQA

Early benchmark results reveal that even the most advanced AI models struggle with culturally grounded Indian language questions, validating IndQA’s difficulty level.

GPT-5 Leads but Room for Improvement Remains

According to performance data shared at the DevDay Exchange event:

- GPT-5 (Thinking High): 34.9% accuracy the highest performing model

- Gemini 2.5 Pro: 34.3% accuracy nearly tied with GPT-5

- Grok 4: 28.5% accuracy

- Earlier models like GPT-4o, OpenAI o3, and GPT-4.5 scored lower (specific scores not disclosed)

These relatively low scores demonstrate that IndQA successfully avoids saturation even the best models answer correctly less than 40% of the time. This provides substantial “headroom” for measuring improvement as models evolve.

OpenAI’s own analysis shows their model family has improved significantly on Indian languages over the past couple of years, though substantial room for enhancement remains.

Domain and Language-Specific Performance

Performance varies considerably across IndQA’s different languages and cultural domains, though OpenAI cautions against direct cross-language comparisons. Because questions are not identical across languages, each set is natively authored for cultural authenticity; language-to-language score differences don’t directly measure relative language capabilities.

Instead, IndQA is designed to measure improvement over time within a model family or configuration for each specific language. This longitudinal approach helps developers track whether their language-specific optimization efforts are working.

What scores do AI models achieve on IndQA?

The highest-performing model, GPT-5 (Thinking High), scores 34.9% on IndQA, followed by Gemini 2.5 Pro at 34.3% and Grok 4 at 28.5%. These low scores indicate the benchmark successfully maintains difficulty and avoids saturation, providing room to measure future improvements.

The 261 Experts Behind IndQA

The quality and authenticity of IndQA fundamentally depends on the diverse expertise of its contributor network.

Who Contributed to the Benchmark?

The 261 Indian experts who authored and reviewed IndQA questions represent a remarkable cross-section of Indian cultural knowledge:

- A Nandi Award-winning Telugu actor and screenwriter with over 750 films

- A Marathi journalist and editor at Tarun Bharat

- A scholar of Kannada linguistics and dictionary editor

- An International Chess Grandmaster who coaches top-100 chess players

- A Tamil writer, poet, and cultural activist advocating for social justice and literary freedom

- An award-winning Punjabi music composer

- A Gujarati heritage curator and conservation specialist

- An award-winning Malayalam poet and performance artist

- A professor of history specializing in Bengal’s cultural heritage

- A professor of architecture focusing on Odishan temples

This expert diversity ensures questions authentically reflect regional variations, cultural nuances, and domain-specific knowledge that only insiders would understand. It also demonstrates OpenAI’s commitment to community-driven benchmark development rather than purely algorithmic or translation-based approaches.

What IndQA Means for Indian AI Users

The introduction of IndQA signals important shifts in how AI companies approach non-English markets and cultural representation.

For Indian developers and businesses, IndQA provides a standardized way to evaluate whether language models meet their specific needs before integration. Rather than relying on generic English-focused benchmarks, organizations can assess model performance on culturally relevant tasks.

For educators and students, the benchmark validates that AI systems need deeper cultural understanding to be truly useful in Indian contexts. This may influence how educational institutions approach AI literacy and integration.

For content creators and digital marketers, IndQA’s focus on cultural nuance underscores the importance of context-aware AI assistance rather than simple translation. Tools that score well on IndQA are more likely to generate culturally appropriate content.

The benchmark also reflects OpenAI’s strategic commitment to India evidenced by the free one-year ChatGPT Go subscription offer announced alongside IndQA. Sam Altman has stated that India “may well become our largest market,” calling the country “incredibly fast-growing” in AI adoption.

Limitations and Future Scope

OpenAI transparently acknowledges several important limitations of the IndQA benchmark.

Cross-language comparisons are not appropriate because questions differ across languages. A high score in Tamil and a lower score in Bengali don’t necessarily mean the model is better at Tamil the question sets are different.

Adversarial filtering bias exists because questions were selected based on failure rates against OpenAI’s own models (GPT-4o, OpenAI o3, GPT-4.5, and GPT-5). This could theoretically disadvantage OpenAI models compared to competitors, though the extent of this effect is unclear.

Limited language coverage means IndQA doesn’t yet evaluate all 22 scheduled Indian languages. Languages like Assamese, Urdu, Konkani, and others remain unrepresented, though expansion may come in future versions.

Academic focus persists to some extent while culturally grounded, the benchmark still relies on structured question-answer formats that may not fully represent real-world conversational AI usage.

OpenAI hopes IndQA will “inform and inspire new benchmark creation from the research community” and serve as a model for creating similar culturally grounded evaluations in other regions. The company specifically notes that “IndQA-style questions are especially valuable in languages or cultural domains that are poorly covered by existing AI benchmarks”.

Key Takeaways

OpenAI’s IndQA benchmark represents a meaningful step toward culturally inclusive AI evaluation, moving beyond translation-focused metrics to test genuine understanding of Indian languages and cultural contexts. With only 34.9% accuracy from the top-performing GPT-5 model, IndQA successfully avoids the saturation problem plaguing existing multilingual benchmarks, providing a clear path for measuring future improvements.

The collaboration with 261 Indian experts across diverse domains demonstrates that effective multilingual AI requires deep community involvement, not just algorithmic translation. As OpenAI expands this approach to other regions, IndQA may serve as a template for culturally grounded benchmarks worldwide.

For India’s growing AI community already OpenAI’s second-largest market with potential to become first IndQA signals that major AI companies are taking regional needs seriously, investing resources to ensure their models work authentically in local contexts.

Frequently Asked Questions (FAQs)

What does IndQA stand for?

IndQA stands for Indian Question-Answering benchmark, a specialized AI evaluation framework testing language models on Indian languages and cultural knowledge.

How many questions are in the IndQA benchmark?

IndQA contains 2,278 questions created by 261 domain experts across India, spanning 12 languages and 10 cultural domains.

Which languages does IndQA cover?

IndQA covers Bengali, English, Gujarati, Hindi, Hinglish, Kannada, Malayalam, Marathi, Odia, Punjabi, Tamil, and Telugu with Hinglish included specifically to address code-switching patterns.

How is IndQA different from MMMLU?

Unlike MMMLU’s translated multiple-choice questions, IndQA features natively authored, open-ended questions graded with rubric-based criteria, focusing on cultural reasoning rather than factual recall.

Why did OpenAI create IndQA?

OpenAI created IndQA because existing multilingual benchmarks are saturated (top models near maximum scores) and don’t adequately test cultural understanding, a critical gap for India, OpenAI’s second-largest market with nearly one billion non-English speakers.

Can I access the IndQA benchmark dataset?

As of November 2025, OpenAI has announced IndQA publicly but has not indicated whether the dataset will be open-sourced or remain proprietary for internal evaluation purposes.

Featured Snippet Boxes

What is the IndQA benchmark?

IndQA (Indian Question-Answering benchmark) is OpenAI’s first region-specific AI evaluation framework, testing language models on 2,278 culturally grounded questions across 12 Indian languages and 10 cultural domains including literature, cuisine, history, and everyday life. Created with 261 Indian experts, it uses rubric-based grading rather than binary scoring.

Why is IndQA important for AI development?

IndQA addresses critical gaps in multilingual AI evaluation by testing cultural understanding, not just translation accuracy. With 80% of people worldwide not using English as their primary language, benchmarks like IndQA help developers build AI that works authentically across diverse cultures rather than imposing English-centric patterns on non-English contexts.

Who created the IndQA benchmark?

OpenAI developed IndQA in collaboration with 261 domain experts from across India, including linguists, journalists, scholars, award-winning artists, chess grandmasters, architecture professors, and cultural activists. These experts natively authored questions in their languages and specialties, ensuring cultural authenticity rather than relying on translation.

Source: OpenAI