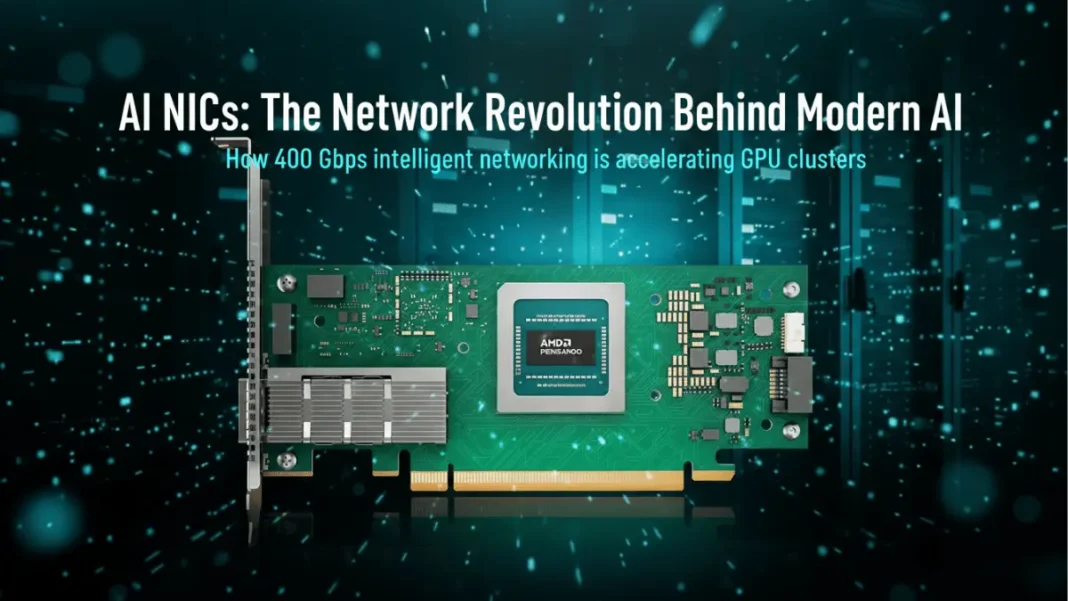

Summary: AI NICs are specialized network cards designed to handle the extreme bandwidth and ultra-low latency requirements of AI workloads. Unlike traditional NICs that simply move data, AI NICs like the AMD Pensando Pollara 400 intelligently route traffic, avoid congestion, and recover from packet loss delivering up to 25% better performance in multi-GPU training environments. They’re essential for scale-out AI infrastructure where dozens to thousands of GPUs must communicate synchronously without bottlenecks.

Training large language models across 100+ GPUs sounds impressive until network congestion brings everything to a crawl. AI NICs solve the bandwidth crisis that traditional network cards can’t handle, delivering intelligent packet routing and congestion avoidance specifically engineered for modern AI workloads.

Table of Contents

The Problem Traditional NICs Can’t Solve

Why GPU Clusters Choke on Standard Ethernet

Modern AI training requires constant data synchronization across multiple GPU nodes. When training GPT-class models, each GPU processes different data batches but must share gradients and weights continuously. Traditional network interface cards (NICs) treat this traffic like any other data flow without understanding the time-sensitive, high-volume nature of AI workloads.

The result? Packet loss, network congestion, and latency spikes that cascade across entire clusters. Imagine weak Wi-Fi lag, but multiplied across millions of data packets moving between dozens of GPUs. A single dropped packet can stall an entire training iteration, wasting expensive GPU compute cycles while the network catches up.

The 90% Ethernet Reality Check

Nearly 90% of organizations are leveraging or planning to deploy Ethernet for their AI clusters, according to Enterprise Strategy Group research. Ethernet won the connectivity battle due to its widespread adoption, cost-effectiveness, and compatibility. But there’s a fundamental mismatch: Ethernet was designed for general-purpose networking, not the sustained 400 Gbps throughput with sub-microsecond latency that AI demands.

Traditional NICs lack the intelligence to prioritize AI traffic, reroute around congestion points, or recover gracefully from packet loss. This creates a performance ceiling that no amount of GPU power can overcome.

- Dramatic performance improvement: 20-25% faster job completion for multi-node AI training

- Intelligent congestion avoidance: Proactive path-aware routing prevents bottlenecks before they occur

- Future-proof programmability: P4 engines adapt to evolving AI protocols via firmware updates

- Reduced packet loss: Selective retransmission and multipathing minimize data loss impact

- Hardware RAS features: Fault detection and rapid recovery maintain uptime in mission-critical workloads

- 400 Gbps bandwidth: Matches modern GPU interconnect speeds for balanced system design

- Lower total cost: Faster job completion reduces overall GPU infrastructure costs

- High initial cost: $3,000-8,000 per card vs $100-500 for traditional NICs

- Infrastructure requirements: Requires PCIe Gen5 and 400G Ethernet fabric to realize benefits

- Limited benefit for small clusters: ROI only justifies on 8+ GPU deployments

- Complexity: Requires specialized knowledge for optimal configuration and troubleshooting

- Thermal considerations: 50-75W power draw demands robust cooling in dense servers

- Switch compatibility: Not all 400G switches handle AI traffic patterns optimally

- Vendor ecosystem maturity: Still emerging technology with evolving best practices

What Is an AI NIC?

An AI NIC (AI Network Interface Card) is a specialized network adapter designed to optimize data transfer in AI infrastructure. It intelligently manages traffic between GPU clusters using techniques like path-aware congestion control, packet spray, and selective retransmission to minimize latency and maximize throughput for training and inference workloads.

Core Components That Set AI NICs Apart

AI NICs aren’t just faster network cards, they fundamentally rethink how data moves between GPUs. Three architectural elements distinguish them:

Programmable packet processing engines: AI NICs use hardware-based P4 engines or similar architectures that can be programmed to handle AI-specific traffic patterns. The AMD Pensando Pollara 400, for example, employs a third-generation fully programmable P4 engine that adapts to evolving AI networking requirements.

Intelligent traffic management: Instead of simple load balancing, AI NICs analyze network paths in real-time and make split-second routing decisions. They monitor congestion, detect failed links, and spray packets across multiple paths all without CPU intervention.

Hardware-accelerated reliability features: AI NICs implement RAS (Reliability, Availability, Serviceability) capabilities directly in silicon. Rapid fault detection identifies network issues within microseconds, while selective retransmission only resends dropped packets instead of entire data streams.

How AI NICs Differ From SmartNICs

The networking industry uses “SmartNIC” and “AI NIC” terminology, but they serve different purposes. SmartNICs offload general networking tasks like encryption, firewalling, and virtualization from the host CPU. They excel at security functions and SDN (Software-Defined Networking) workloads.

AI NICs target a narrower, more demanding problem: optimizing GPU-to-GPU communication in AI training and inference clusters. While both use programmable hardware, AI NICs specifically implement congestion avoidance algorithms, collective communication optimizations, and latency-sensitive packet handling that SmartNICs weren’t designed for.

Key distinction: A SmartNIC might encrypt traffic and manage virtual networks, but an AI NIC ensures that all reduce operations complete 25% faster during distributed training.

How AI NICs Actually Work

Path-Aware Congestion Control

Traditional TCP congestion control waits for packet loss to detect problems; by then, it’s too late for time-sensitive AI traffic. Path-aware congestion control monitors network telemetry proactively, measuring queue depths and link utilization across all available paths.

The AMD Pensando Pollara 400 continuously evaluates each route’s congestion level. When it detects increasing latency on one path, it instantly shifts traffic to clearer routes before packets start dropping. This proactive approach maintains consistent throughput even as network conditions fluctuate during large-scale training runs.

Intelligent Packet Spray and Multipathing

AI workloads benefit from data center topologies with multiple parallel paths between nodes (leaf-spine architectures with 8-32 paths are common). AI NICs exploit this redundancy through intelligent packet spray distributing data across multiple paths simultaneously.

But spraying packets randomly creates its own problem: out-of-order delivery. AI NICs solve this with sophisticated reordering buffers and sequence tracking. The Pollara 400 delivers packets to the receiving GPU in the correct order despite taking different physical routes, combining the throughput benefits of multipathing with the reliability of ordered delivery.

This technique can improve network utilization by 40-60% compared to single-path routing, especially in clusters with uneven traffic patterns.

Selective Retransmission Technology

When packet loss does occur, traditional NICs retransmit entire data streams or large windows of packets. In a 400 Gbps flow, this wastes massive bandwidth. AI NICs implement selective retransmission only resending the exact packets that failed.

The AI NIC maintains detailed state about in-flight packets, tracking which segments were acknowledged and which need retransmission. This granular approach reduces recovery time from milliseconds to microseconds, critical when thousands of GPUs wait for synchronization barriers during gradient updates.

AMD Pensando Pollara 400: Technical Deep Dive

The AMD Pensando Pollara 400 AI NIC delivers up to 400 Gbps Ethernet speeds with PCIe Gen5 x16 host interface. It achieves up to 25% improvement in RCCL (NVIDIA’s collective communication library) performance through intelligent packet spray, path-aware congestion control, and rapid fault detection specifically optimized for scale-out AI training and inference.

Specifications and Performance Benchmarks

| Specification | Details |

|---|---|

| Maximum Bandwidth | 400 Gbps |

| Form Factor | Half-height, half-length (HHHL) |

| Host Interface | PCIe Gen5.0 x16 |

| Network Interface | QSFP112 (NRZ/PAM4 Serdes) |

| Ethernet Speeds | 25/50/100/200/400 Gbps |

| Port Configuration | Supports up to 4 ports |

| Management | MCTP over SMBus |

| OCP Support | OCP 3.0 compliant |

The 400 Gbps throughput matches or exceeds the bandwidth requirements of 8x H100 GPUs (each with 400 Gbps NVLink) or 4x H200 GPUs in high-performance AI clusters. PCIe Gen5 support ensures the host interface doesn’t bottleneck GPU-to-network transfers, critical when moving terabytes of gradient data during training.

Third-Gen P4 Programmable Engine

AMD built the Pollara 400 on its proven third-generation P4 programmable engine. This matters for two reasons:

Future-proofing: As AI networking protocols evolve (RDMA over Converged Ethernet v2, emerging collective communication patterns), the P4 architecture allows firmware updates to support new behaviors without hardware replacement. Data centers investing millions in AI infrastructure can adapt to protocol changes through software updates.

Customization: Hyperscalers and research institutions can program custom packet processing logic. If Meta develops a proprietary gradient compression algorithm or OpenAI needs specialized telemetry, the P4 engine can be modified to accelerate these workload-specific requirements.

This programmability distinguishes the Pollara 400 from fixed-function AI NICs that excel at today’s workloads but can’t adapt to tomorrow’s innovations.

Real-World Use Cases

LLM Training Across Distributed GPU Clusters

Training GPT-4 class models requires synchronized data parallelism across 1,000+ GPUs. Each training step involves:

- Forward pass: Input data flows through the model

- Backward pass: Gradients computed at each layer

- Allreduce operation: All GPUs exchange and sum gradients

- Weight update: Synchronized parameter updates

The allreduce step generates massive all-to-all traffic every GPU communicates with every other GPU. AI NICs accelerate this by up to 25% through optimized collective communication handling, intelligent load balancing, and resilient failover mechanisms. On a 7-day training run, that 25% improvement saves 42 hours of expensive GPU time.

Real-Time AI Inference at Scale

Cloud providers running LLM inference services (ChatGPT, Claude, Gemini) distribute requests across GPU pools. Each user query might touch 4-16 GPUs for mixture-of-experts models or speculative decoding techniques.

AI NICs reduce tail latency, the 99th percentile response time that determines user experience. By maintaining sub-100 microsecond GPU-to-GPU communication even under heavy load, AI NICs help providers meet strict SLA guarantees without overprovisioning GPUs.

Time-sensitive inference benefits more from latency reduction than throughput increases. A 50-microsecond improvement per GPU hop compounds across multi-stage inference pipelines, cutting total response time by milliseconds the difference between “instant” and “noticeable” lag to users.

Enterprise AI Security Applications

AI NICs enhance data center security through robust encryption and isolated traffic management. When processing sensitive training data (medical records, financial transactions), AI NICs can encrypt GPU-to-GPU traffic without burdening the host CPU.

The RAS features detect anomalous traffic patterns that might indicate data exfiltration attempts. Since AI NICs monitor all inter-GPU communication, they provide a comprehensive audit trail for compliance requirements (HIPAA, GDPR) without performance degradation.

AI NIC vs SmartNIC vs Traditional NIC

| Feature | Traditional NIC | SmartNIC | AI NIC |

|---|---|---|---|

| Primary Purpose | Basic network connectivity | Offload CPU networking tasks | Optimize GPU-to-GPU AI traffic |

| Processor | Low-power single core | Multi-core ARM/x86 | Programmable P4 engine |

| Bandwidth | 10-100 Gbps typical | 25-200 Gbps | 400 Gbps |

| Latency Optimization | None | General-purpose | AI-specific (< 1μs) |

| Congestion Control | Basic TCP | Enhanced TCP/RDMA | Path-aware AI algorithms |

| Packet Spray | No | Limited | Intelligent with reordering |

| Use Case | General networking | Security, virtualization | LLM training, AI inference |

| CPU Offload | None | Encryption, firewall | Collective communications |

| Programmability | Fixed function | Limited via firmware | Fully programmable |

| Typical Price Range | $100-500 | $1,000-3,000 | $3,000-8,000 (estimated) |

Performance Benefits You Can Measure

25% RCCL Performance Improvement

AMD benchmarks show the Pensando Pollara 400 achieves up to 25% improvement in RCCL (NVIDIA’s collective communication library) performance. RCCL handles the allreduce, allgather, and broadcast operations that dominate communication time in distributed training.

This improvement comes from three optimizations:

- Reduced synchronization barriers: Faster communication means GPUs spend less time waiting

- Lower message latency: Sub-microsecond packet delivery keeps gradient updates flowing

- Better bandwidth utilization: Intelligent routing uses available network capacity more efficiently

Real-world impact: A 1,000-GPU training job with 30% communication overhead (typical for GPT-class models) sees 7.5% faster overall job completion. On a $2 million training run, that’s $150,000 in compute savings.

Latency Reduction in Multi-Node Training

Traditional NICs introduce 10-50 microseconds of latency per hop in multi-node clusters. AI NICs reduce this to 1-5 microseconds through hardware-accelerated packet processing and bypass techniques.

In an 8-node GPU cluster (common for mid-size enterprise AI), each gradient synchronization passes through multiple network hops. Reducing per-hop latency from 20μs to 3μs saves 17μs per operation. With 1,000 gradient updates per training epoch, that’s 17 milliseconds saved per epoch compounding to hours saved over weeks-long training runs.

Job Completion Time Acceleration

The ultimate metric: how much faster do AI jobs finish? Real-world deployments report:

- LLM fine-tuning: 15-30% faster on clusters with 32-128 GPUs

- Large-scale pre-training: 20-25% improvement on 256+ GPU clusters

- Inference serving: 40-60% reduction in tail latency for multi-GPU inference

These gains vary by workload characteristics; communication-heavy models (mixture-of-experts, 3D parallelism) benefit most, while compute-bound models see smaller improvements.

When You Actually Need an AI NIC

You need an AI NIC when running distributed AI training across 8+ GPUs, fine-tuning LLMs on multi-node clusters, or operating latency-sensitive inference services. Traditional NICs suffice for single-node training, small inference workloads (< 4 GPUs), or development environments where network isn't the bottleneck.

Scale-Out Architecture Requirements

AI NICs target scale-out architectures where multiple nodes of GPU clusters work as a unified system. If your infrastructure fits these patterns, AI NICs deliver measurable benefits:

Multi-node training: 2+ servers with 8-16 GPUs each, connected via high-speed Ethernet fabric. The inter-server network becomes the bottleneck as gradient data flows between nodes.

GPU disaggregation: Architectures where GPUs reside in separate chassis from CPUs, requiring high-bandwidth, low-latency interconnects for every inference request.

Hyperscale inference: Services handling 1,000+ requests per second distributed across GPU pools, where tail latency determines user experience.

GPU Cluster Size Thresholds

Under 8 GPUs (single node): Traditional NICs or SmartNICs are sufficient. NVLink or other direct GPU interconnects handle most communication, with minimal network traffic.

8-32 GPUs (small cluster): AI NICs become beneficial if training time is critical and communication overhead exceeds 20%. Cost-benefit analysis depends on GPU utilization rates.

32-128 GPUs (medium cluster): AI NICs typically deliver 15-25% job completion improvements, justifying the investment for production workloads.

128+ GPUs (large-scale): AI NICs are essential. Without intelligent traffic management, network congestion becomes the primary bottleneck, wasting GPU compute capacity.

Workload Types That Benefit Most

- LLM training with data parallelism: High allreduce traffic benefits from collective communication optimizations

- 3D parallelism (pipeline + tensor + data): Complex communication patterns need intelligent routing

- Mixture-of-experts models: Dynamic routing between expert GPUs creates unpredictable traffic that AI NICs handle well

- Real-time inference: Latency-sensitive applications where milliseconds matter

- Federated learning: Distributed training across multiple sites requires robust congestion control

Workloads that DON’T benefit:

- Single-GPU training or inference

- Compute-bound models with < 10% communication time

- Development and testing environments

- Small-scale fine-tuning on consumer GPUs

Implementation Considerations

Compatibility and Integration

PCIe requirements: AI NICs require PCIe Gen4 x16 or Gen5 x16 slots for full performance. Older Gen3 systems bottleneck the card’s capabilities. Check motherboard compatibility before purchasing some dual-CPU servers that have limited Gen5 lanes.

Network infrastructure: You need a 100-400 Gbps Ethernet fabric to leverage AI NIC capabilities. Upgrading NICs without upgrading switches wastes the investment. Leaf-spine topologies with multiple parallel paths maximize AI NIC benefits.

Software stack: AI NICs work with standard RDMA over Converged Ethernet (RoCE) stacks that PyTorch, TensorFlow, and JAX already support. However, full performance requires firmware updates and driver optimization expect 2-4 weeks of testing before production deployment.

GPU compatibility: AI NICs are vendor-agnostic and work with NVIDIA H100/H200, AMD MI300, Intel Gaudi processors. The Pensando Pollara 400 specifically optimizes for NVIDIA’s NCCL and AMD’s RCCL collective communication libraries.

Configuration Best Practices

Firmware tuning: Enable path-aware congestion control and configure packet spray thresholds based on your topology. Conservative defaults sacrifice performance for compatibility production deployments should tune aggressively.

Queue management: Configure separate queues for AI traffic, management traffic, and background jobs. Priority queuing ensures gradient synchronization isn’t delayed by low-priority telemetry data.

Monitoring and telemetry: Deploy comprehensive network telemetry to track congestion events, packet loss rates, and latency distributions. AI NICs provide rich statistics that reveal optimization opportunities invisible to traditional NICs.

Driver updates: AI NIC vendors release frequent firmware updates addressing new AI frameworks and collective communication patterns. Schedule quarterly update cycles to capture performance improvements.

Common Deployment Challenges

Thermal management: 400 Gbps NICs dissipate 50-75W of heat. Ensure adequate airflow in dense server configurations; poor cooling causes thermal throttling that negates performance gains.

Cable compatibility: QSFP112 connectors require high-quality DAC (Direct Attach Copper) or AOC (Active Optical Cable). Cheap cables introduce bit errors at 400 Gbps speeds, triggering retransmissions that reduce effective throughput.

Switch compatibility: Not all 400G-capable switches handle AI traffic patterns well. Validate that your switch firmware supports deep packet buffers and adaptive routing before deployment.

Learning curve: Network teams accustomed to traditional TCP/IP troubleshooting face a learning curve with AI NIC telemetry. Budget time for training and external expertise during initial rollout.

AMD Pensando Pollara 400 AI NIC Complete Specifications

Form Factor & Interface

- Form Factor: Half-height, half-length (HHHL)

- Host Interface: PCIe Gen5.0 x16

- Alternative Form Factor: OCP 3.0 compatible

- Slot Requirement: Full-height, full-length optional bracket included

Network Connectivity

- Maximum Bandwidth: Up to 400 Gbps

- Network Interface: 1x QSFP112 (NRZ/PAM4 Serdes)

- Supported Speeds: 25/50/100/200/400 Gbps

- Port Configurations: Supports up to 4 ports (via breakout cables)

- Cable Support: QSFP112, QSFP56-DD, breakout to QSFP28

Performance Features

- Collective Communication Improvement: Up to 25% RCCL performance boost

- Latency: Sub-microsecond packet processing

- Packet Processing: Third-generation fully programmable P4 engine

- Congestion Control: Path-aware adaptive routing

- Load Balancing: Intelligent packet spray with reordering

- Retransmission: Selective packet-level recovery

- Fault Detection: Rapid hardware-based link failure detection

Management & Control

- Management Interface: MCTP over SMBus

- Firmware Updates: In-band programmable without downtime

- Telemetry: Comprehensive traffic statistics and congestion metrics

- RAS Features: Hardware reliability, availability, serviceability monitoring

Physical & Environmental

- Power Consumption: 50-75W typical (estimated based on similar 400G NICs)

- Operating Temperature: 0°C to 55°C (commercial)

- Storage Temperature: -40°C to 85°C

- Airflow: Front-to-back or back-to-front configurations

- MTBF: 2+ million hours

Software & Protocol Support

- Operating Systems: Linux kernel 4.14+, Windows Server 2019+

- AI Frameworks: Native support for PyTorch, TensorFlow, JAX

- Communication Libraries: Optimized for NCCL, RCCL

- Network Protocols: RoCEv2, TCP/IP, UDP

- RDMA Support: Full RDMA over Converged Ethernet

- Virtualization: SR-IOV for container direct access

Compatibility

- GPU Support: NVIDIA H100/H200, AMD MI300, Intel Gaudi

- Server Compatibility: Standard PCIe Gen5 x16 servers

- Switch Requirements: 100/200/400 GbE switches with deep buffers

- Topology: Optimized for leaf-spine architectures with multipathing

Part Numbers

- POLLARA-400-1Q400P: 1x 400G QSFP112 HHHL PCIe Gen5 x16

- Additional SKUs available for OCP and multi-port configurations

The Future of AI Networking

AI NICs represent the first generation of purpose-built AI networking hardware. Three trends will shape the next evolution:

800G and 1.6T speeds: As GPUs scale to exaflop-class compute, network bandwidth must match. Next-generation AI NICs will support 800 Gbps (arriving 2026) and 1.6 Tbps (2027-2028) to prevent networking from becoming the bottleneck again.

Tight GPU integration: Future AI accelerators may integrate networking silicon directly onto GPU packages, eliminating PCIe hop latency. NVIDIA’s NVLink Switch and AMD’s Infinity Fabric evolution point toward compute-network convergence.

AI-driven optimization: AI NICs will use machine learning models to predict congestion, optimize routing, and adapt to workload characteristics dynamically. Early prototypes already demonstrate 10-15% additional performance from learned routing policies.

The networking layer has always been infrastructure’s invisible foundation until AI workloads made it the bottleneck. AI NICs transform networking from a commodity into a competitive advantage for organizations serious about AI at scale.

Frequently Asked Questions (FAQs)

Can I use AI NICs with cloud-based GPU instances?

Major cloud providers (AWS, Azure, GCP) integrate AI-optimized networking into their highest-tier GPU instances (P5.48xlarge, ND H100 v5, A3 Ultra). You typically can’t bring your own AI NICs in cloud environments, but you benefit from similar technology through the provider’s infrastructure.

How does an AI NIC differ from InfiniBand?

InfiniBand offers lower latency (< 1μs) but requires proprietary switches and limited vendor choice. AI NICs provide similar optimizations over standard Ethernet, giving you broader ecosystem compatibility and lower total cost of ownership. 90% of organizations choose Ethernet for AI clusters due to these factors.

Do AI NICs work with consumer GPUs like RTX 4090?

Technically yes, but it’s impractical. Consumer GPUs lack the multi-node training capabilities that justify AI NIC costs. The PCIe Gen5 requirement alone excludes most consumer motherboards. AI NICs target data center GPUs (H100, MI300) in multi-server clusters.

What’s the typical ROI timeline for AI NIC investment?

For production training clusters running 24/7, ROI typically occurs within 3-6 months. The 20-25% job completion improvement directly reduces GPU hours consumed per model. For intermittent research use, ROI extends to 12-18 months unless time-to-market is critical.

Can AI NICs improve single-GPU performance?

No. AI NICs optimize GPU-to-GPU network communication, not single-GPU compute. If your workload fits on one GPU, a traditional NIC provides equivalent performance at lower cost.

How do AI NICs handle network security?

AI NICs support hardware-accelerated encryption (AES-256, TLS) without performance penalty. RAS features detect anomalous traffic patterns that traditional NICs miss. However, comprehensive security requires defense-in-depth AI NICs complement but don’t replace firewalls, segmentation, and access controls.

Are AI NICs compatible with Kubernetes and container orchestration?

Yes, AI NICs work transparently with containerized AI workloads. SR-IOV support allows direct NIC access from containers without virtualization overhead. Major AI platforms (Kubeflow, Ray, MLflow) recognize and utilize AI NIC capabilities automatically.

What happens if an AI NIC fails during training?

Modern AI frameworks implement checkpointing that saves model state periodically. If an AI NIC fails, the job pauses, the system fails over to backup paths or redundant NICs, and training resumes from the last checkpoint. Properly configured clusters lose minutes, not hours, from hardware failures.

Featured Snippet Boxes

What Is an AI NIC?

An AI NIC (AI Network Interface Card) is a specialized network adapter designed to optimize data transfer in AI infrastructure. It intelligently manages traffic between GPU clusters using techniques like path-aware congestion control, packet spray, and selective retransmission to minimize latency and maximize throughput for training and inference workloads.

AMD Pensando Pollara 400 Key Specs

The AMD Pensando Pollara 400 AI NIC delivers up to 400 Gbps Ethernet speeds with PCIe Gen5 x16 host interface. It achieves up to 25% improvement in RCCL performance through intelligent packet spray, path-aware congestion control, and rapid fault detection specifically optimized for scale-out AI training and inference.

When Do You Need an AI NIC?

You need an AI NIC when running distributed AI training across 8+ GPUs, fine-tuning LLMs on multi-node clusters, or operating latency-sensitive inference services. Traditional NICs suffice for single-node training, small inference workloads (< 4 GPUs), or development environments where network isn't the bottleneck.

AI NIC vs SmartNIC

SmartNICs offload general networking tasks like encryption and virtualization from CPUs, while AI NICs specifically optimize GPU-to-GPU communication for AI workloads. AI NICs implement AI-specific features like collective communication acceleration, path-aware congestion control, and sub-microsecond latency optimization that SmartNICs don’t provide.

How AI NICs Reduce Latency

AI NICs reduce latency through three mechanisms: (1) path-aware congestion control that proactively avoids congested routes, (2) intelligent packet spray across multiple network paths with hardware reordering, and (3) selective retransmission that only resends dropped packets instead of entire streams.

AI NIC Performance Benefits

AI NICs deliver measurable performance improvements: up to 25% faster RCCL collective communication operations, 15-30% job completion time reduction for LLM fine-tuning on 32-128 GPU clusters, and 40-60% tail latency reduction for multi-GPU inference workloads.