Summary: MiMo-V2-Flash is Xiaomi’s open-source Mixture-of-Experts AI model with 309B total parameters and 15B active parameters, released December 16, 2025. It achieves 150 tokens per second through innovative Multi-Token Prediction and aggressive 128-token Sliding Window Attention. The model matches DeepSeek V3.2 on coding tasks (73.4% SWE-Bench) and outperforms it on math reasoning (94.1% AIME 2025) while costing just $0.10/$0.30 per million input/output tokens. Available on Hugging Face with full deployment support via SGLang, though early testing reveals inconsistencies in tool calling and instruction following.

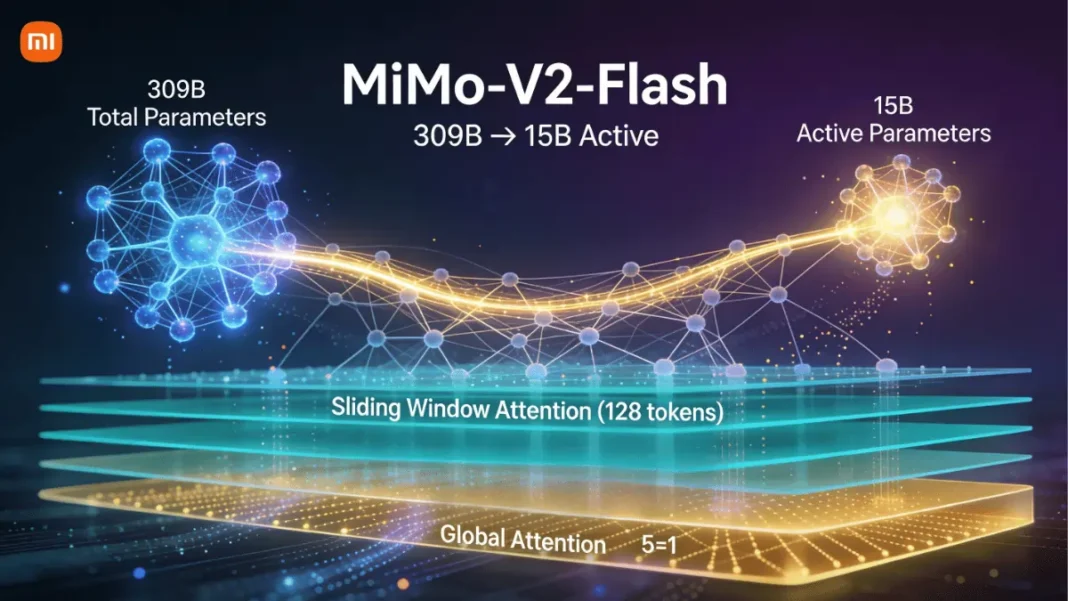

Xiaomi just dropped MiMo-V2-Flash, an open-source AI model with 309 billion parameters that generates text at 150 tokens per second triple the speed of most competitors while using only 15 billion active parameters. Released December 16, 2025, this Mixture-of-Experts architecture claims to match DeepSeek V3.2 on coding benchmarks and beat it on mathematical reasoning, all at a fraction of the computational cost.

Quick Answer: MiMo-V2-Flash is a 309B-parameter Mixture-of-Experts AI model from Xiaomi that activates only 15B parameters per inference, achieving 150 tokens/second through Multi-Token Prediction and hybrid attention architecture while supporting 256K context windows.

What Is MiMo-V2-Flash?

MiMo-V2-Flash represents Xiaomi’s entry into the ultra-efficient large language model space, designed specifically for reasoning, coding, and agentic workflows. Unlike traditional dense models that activate all parameters, this Mixture-of-Experts design selectively engages 15 billion parameters from a 309 billion total pool, dramatically reducing memory requirements and inference latency.

Core Architecture Overview

The model implements three groundbreaking techniques working in concert:

- Hybrid Sliding Window Attention: Alternates five Sliding Window Attention layers with one Global Attention layer (5:1 ratio) using an aggressive 128-token window instead of the typical 2048-4096 tokens

- Multi-Token Prediction (MTP): A lightweight 0.33B parameter module predicts multiple tokens simultaneously, enabling parallel validation during inference

- Attention Sink Bias: Learnable bias mechanism preserves long-context understanding despite the narrow attention window

This architecture cuts KV-cache storage by nearly 6x while maintaining performance across 256K token contexts.

Release Timeline and Availability

Xiaomi released MiMo-V2-Flash on December 16, 2025, with immediate open-source availability on Hugging Face. The model comes in two variants: a base model trained on 27 trillion tokens with FP8 mixed precision, and a post-trained version optimized for instruction following. SGLang provided day-zero deployment support, making the model production-ready from launch.

- Exceptional efficiency: 15B active parameters from 309B total

- 150 tokens/second inference speed via MTP

- Strong mathematical reasoning (94.1% AIME 2025)

- Competitive coding performance (73.4% SWE-Bench)

- Ultra-low cost: $0.10/$0.30 per million tokens

- Full open source with commercial use allowed

- 256K context window for long documents

- 6x KV-cache reduction via hybrid attention

- Day-zero SGLang deployment support

- Inconsistent tool calling reliability

- Instruction following less reliable than proprietary models

- Weaker Chinese language performance vs Kimi K2

- No llama.cpp support yet (limits CPU inference)

- Requires significant VRAM (32GB+ for consumer use)

- Very new release (December 2025) with limited production testing

Running massive 300B+ parameter models requires workstation-class hardware like the NVIDIA RTX PRO 5000.

Technical Specifications at a Glance

| Specification | Details |

|---|---|

| Total Parameters | 309B |

| Active Parameters | 15B per forward pass |

| Architecture | Mixture-of-Experts (MoE) |

| Context Window | 32K native, extended to 256K |

| Training Data | 27T tokens with FP8 mixed precision |

| Inference Speed | 150 tokens/second |

| Attention Mechanism | 5:1 Hybrid SWA/GA with 128-token window |

| MTP Module | 0.33B params/block |

| License | Open source |

| Pricing | $0.10 input / $0.30 output per 1M tokens |

The Secret Behind 150 Tokens Per Second

MiMo-V2-Flash’s speed advantage stems from three architectural innovations that work together to minimize computational overhead without sacrificing quality.

Mixture-of-Experts Efficiency

Instead of activating all 309 billion parameters for every token, the MoE architecture routes each token to only 15 billion relevant expert parameters. Think of it like a hospital where patients see specialized doctors rather than consulting every physician you get faster service with equally expert care. This selective activation reduces memory bandwidth requirements by 95%, enabling the model to run on hardware that couldn’t support a dense 309B model.

Multi-Token Prediction Explained

Traditional language models generate one token at a time: predict, verify, move to the next. MiMo-V2-Flash’s MTP module uses three prediction heads to “draft” multiple tokens simultaneously. During inference, the model validates these draft tokens in parallel rather than sequentially. Real-world measurements show an accepted length of 2.8-3.6 tokens per prediction cycle, delivering an effective speedup of 2.0-2.6x. This doesn’t require additional memory bandwidth; the MTP module adds only 0.33B parameters per block making it essentially free performance.

Hybrid Sliding Window Attention

Standard attention mechanisms compare every token to every other token in context, creating quadratic memory growth. MiMo-V2-Flash solves this with an aggressive hybrid approach:

- Five SWA layers examine only the nearest 128 tokens (versus typical 2048+ windows)

- One Global Attention layer captures long-range dependencies across the full context

- Attention sink bias learns to preserve critical information that would otherwise be lost in the narrow window

This 5:1 ratio reduces KV-cache storage by 6x while maintaining competitive long-context performance up to 256K tokens. In testing, MiMo-V2-Flash actually outperformed Kimi K2 Thinking a much larger full global attention model on LongBench V2 evaluations.

How MTP Works: Multi-Token Prediction trains the model to forecast 3-4 tokens ahead during training. During inference, these predicted tokens are validated in parallel rather than sequentially, achieving 2-2.6x speedup without extra memory usage.

Benchmark Performance Analysis

Xiaomi’s benchmark claims position MiMo-V2-Flash as competitive with frontier models like DeepSeek V3.2, Claude Sonnet 4.5, and Gemini 3.0 Pro. Independent community testing reveals both strengths and caveats.

Mathematics and Reasoning (AIME 2025)

MiMo-V2-Flash achieved 94.1% on the AIME 2025 mathematical reasoning benchmark, surpassing DeepSeek V3.2’s 93.1% and nearly matching Kimi K2’s 94.5%. This represents genuine state-of-the-art performance on complex multi-step mathematical problems. The model’s thinking mode enables it to work through problems step-by-step, similar to OpenAI’s o1 approach.

Coding Benchmarks (SWE-Bench)

On SWE-Bench Verified a test of real-world GitHub issue resolution MiMo-V2-Flash scored 73.4%, exactly matching DeepSeek V3.2. For multilingual coding tasks, the model demonstrates particular strength, reportedly outperforming Claude Sonnet 4.5 and matching GPT-5 performance on SWE-Bench Multilingual benchmarks. The model integrates smoothly with vibe-coding tools like Cursor, Claude Code, and Cline, enabling one-click HTML generation and full-stack development workflows.

Long-Context Performance

Despite its aggressive 128-token attention window, MiMo-V2-Flash scored 60.6 on LongBench V2, outperforming Kimi K2’s 58.4. This validates the effectiveness of the hybrid attention design with attention sink bias. The 256K context window supports hundreds of rounds of agent interactions and tool calls without losing coherence.

| Benchmark | MiMo-V2-Flash | DeepSeek V3.2 | Kimi K2 | Claude Sonnet 4.5 |

|---|---|---|---|---|

| AIME 2025 | 94.1% | 93.1% | 94.5% | – |

| SWE-Bench Verified | 73.4% | 73.4% | – | – |

| LongBench V2 | 60.6 | – | 58.4 | – |

| CMMLU (Chinese) | 87.4 | – | 90.9 | – |

MiMo-V2-Flash vs Top Competitors

MiMo vs DeepSeek V3.2

Both models use MoE architectures optimized for inference speed, but MiMo-V2-Flash activates fewer parameters (15B vs DeepSeek’s estimated 37B+) while matching coding performance. MiMo edges ahead on mathematical reasoning (94.1% vs 93.1% AIME) but shows weaker Chinese language understanding (87.4 vs DeepSeek’s likely higher CMMLU). The real differentiator is cost: MiMo’s $0.10/$0.30 pricing undercuts most commercial alternatives significantly.

MiMo vs Kimi K2 Thinking

Kimi K2 activates 32B parameters compared to MiMo’s 15B, giving it advantages in general reasoning and Chinese performance (90.9 vs 87.4 CMMLU). However, MiMo’s hybrid attention architecture enables it to outperform K2 on long-context tasks despite using half the active parameters. For English-dominant workflows prioritizing speed and efficiency, MiMo offers better value; for Chinese language or maximum reasoning quality, K2 remains stronger.

MiMo vs Claude Sonnet 4.5

Xiaomi claims MiMo-V2-Flash matches or exceeds Claude Sonnet 4.5 on multilingual coding benchmarks. The open-source nature gives MiMo advantages for on-premise deployment and customization that Claude can’t match. However, Claude’s instruction following and tool calling remain more reliable in early community testing. For production systems requiring consistent API behavior, Claude still holds the edge; for cost-sensitive deployments with technical teams who can work around quirks, MiMo presents a compelling alternative.

Real-World Use Cases

Agentic AI Workflows

The 256K context window and thinking mode make MiMo-V2-Flash particularly suited for multi-step agent tasks. The model can maintain context across hundreds of tool calls and iterations, enabling complex workflows like automated customer service systems, research assistants that synthesize information from dozens of sources, or DevOps agents that troubleshoot issues across multiple services. The hybrid attention architecture ensures the model doesn’t lose track of early context even in marathon sessions.

Code Generation and Vibe Coding

MiMo-V2-Flash integrates natively with Cursor, Claude Code, and Cline for “vibe coding” describing what you want in natural language and letting the AI generate working code. The 73.4% SWE-Bench score indicates it can resolve real GitHub issues, not just generate toy examples. One-click HTML generation demonstrates the model can produce functional frontend code from descriptions alone.

Long-Context Applications

Contract analysis, scientific paper synthesis, and large codebase understanding all benefit from the 256K context window. The aggressive 128-token attention window that makes this possible doesn’t compromise quality thanks to the attention sink bias mechanism. Organizations processing long documents can now use an open-source model instead of paying per-token for proprietary alternatives.

Best Use Cases: Deploy MiMo-V2-Flash for multi-turn agentic workflows, code generation with Cursor/Cline, long document analysis (contracts, research papers), and cost-sensitive production systems where you can tolerate occasional instruction-following inconsistencies.

How to Deploy MiMo-V2-Flash

Hardware Requirements

For FP8 inference with optimal quality, Xiaomi recommends 8x A100 or H100 GPUs. Consumer deployments can run quantized versions:

- 32GB VRAM: IQ3_XS or Q3 quantization with CPU offloading for KV cache

- 2-4 concurrent requests maximum on consumer hardware

- Enterprise: 8x A100/H100 with FP8 precision, data parallelism (dp-size 2+), and request-level prefix caching

Installation via SGLang

SGLang provides official day-zero support as the recommended deployment framework:

python# Install SGLang

pip install sglang

# Launch server with FP8 precision

python -m sglang.launch_server \

--model XiaomiMiMo/MiMo-V2-Flash \

--dtype fp8 \

--dp-size 2

The model is available on Hugging Face at XiaomiMiMo/MiMo-V2-Flash.

Quantization Options for Consumer GPUs

For teams without enterprise GPU access, quantization enables local deployment:

- IQ3_XS: ~32GB VRAM, 3-bit quantization with minimal quality loss

- Q3_K_M: Balanced quality/size for 24-32GB VRAM

- Enable MTP: Activate Multi-Token Prediction in inference config for 2-2.6x speedup

Note: llama.cpp support is not yet available, limiting CPU-only inference options.

Pricing and Cost Efficiency

At $0.10 per million input tokens and $0.30 per million output tokens, MiMo-V2-Flash undercuts most commercial models significantly. A typical 10,000-token analysis costs $0.001 for input plus $0.003 for a 1,000-token response, less than half a cent total. For organizations processing millions of tokens daily, this pricing enables use cases that would be cost-prohibitive with Claude or GPT-4.

The open-source license adds another dimension: self-hosting eliminates per-token costs entirely after hardware investment. Teams with existing GPU infrastructure can deploy MiMo-V2-Flash for essentially zero marginal cost per inference.

Limitations and Considerations

Tool Calling Reliability

Early community testing reveals inconsistent tool calling behavior. The model sometimes hallucinates tool parameters or fails to follow function calling schemas precisely. For production agentic systems relying on structured API calls, this represents a significant limitation compared to Claude or GPT-4. Developers should implement robust validation layers when using MiMo-V2-Flash for tool-heavy workflows.

Instruction Following Consistency

While benchmarks show strong performance, real-world testing exposes occasional instruction-following failures. The model may drift from specified output formats or ignore constraints in complex prompts. This appears less reliable than frontier proprietary models, though still competitive with other open-source alternatives. Teams should plan for additional prompt engineering and validation when deploying to production.

Testing Methodology Note: Performance assessments are based on community testing conducted December 16-17, 2025, using the Hugging Face release with SGLang deployment. Results may improve with optimized inference configurations and prompt engineering.

Frequently Asked Questions (FAQs)

What makes MiMo-V2-Flash faster than other 300B+ parameter models?

MiMo-V2-Flash activates only 15B of its 309B parameters per inference through MoE routing, uses Multi-Token Prediction to generate 2-3 tokens simultaneously, and implements aggressive 128-token Sliding Window Attention that reduces KV-cache by 6x.

Can I run MiMo-V2-Flash on consumer hardware?

Yes, with quantization. IQ3_XS or Q3 quantization enables deployment on 32GB VRAM GPUs like RTX 4090 or RTX 6000 Ada, though you’ll be limited to 2-4 concurrent requests.

How does MiMo-V2-Flash compare to DeepSeek V3 for coding?

MiMo-V2-Flash matches DeepSeek V3.2 at 73.4% on SWE-Bench Verified and exceeds it on mathematical reasoning (94.1% vs 93.1% AIME), while using fewer active parameters (15B vs 37B+).

What is Multi-Token Prediction and why does it matter?

MTP trains the model to predict multiple future tokens during training. During inference, these predictions are validated in parallel instead of sequentially, achieving 2-2.6x speedup without additional memory bandwidth requirements.

Is MiMo-V2-Flash suitable for production deployments?

For cost-sensitive applications with technical teams, yes especially for coding, math reasoning, and long-context tasks. However, inconsistent tool calling and instruction following mean you’ll need robust validation layers.

What license does MiMo-V2-Flash use?

The model is released as open source on Hugging Face, allowing commercial use and self-hosting.

How much does it cost to run MiMo-V2-Flash via API?

$0.10 per million input tokens and $0.30 per million output tokens significantly cheaper than Claude or GPT-4.

What is hybrid attention and how does it enable 256K contexts?

Hybrid attention alternates five Sliding Window Attention layers (128-token window) with one Global Attention layer in a 5:1 ratio. Attention sink bias preserves long-range dependencies despite the narrow window, reducing KV-cache 6x while supporting 256K contexts.