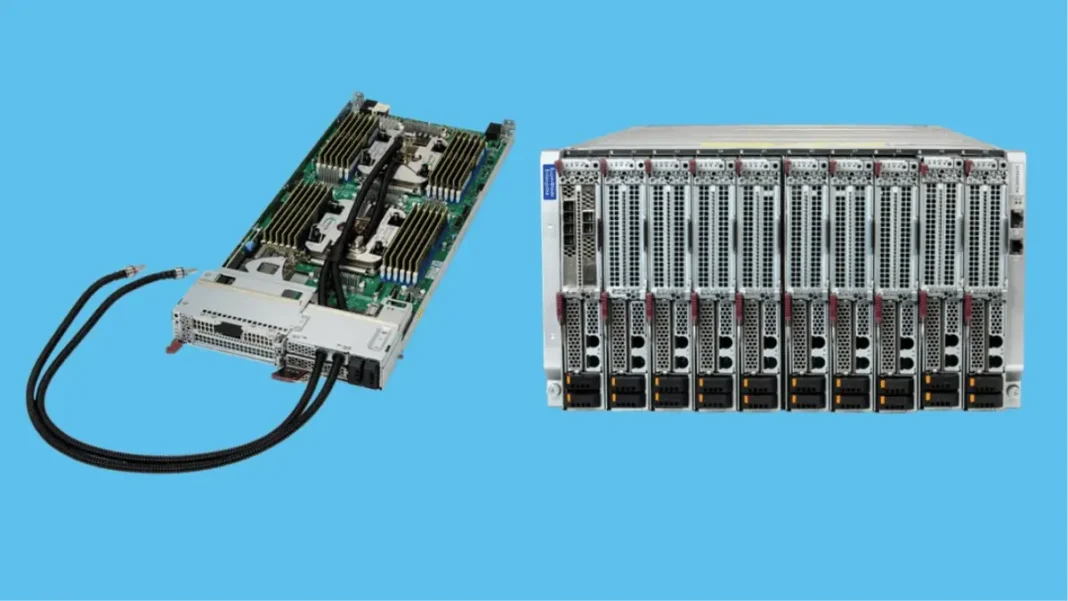

Supermicro unveiled its densest blade server yet the SBI-622BA-1NE12-LCC 6U SuperBlade powered by dual Intel Xeon 6900 series processors with up to 256 P-cores. The system supports both air cooling (5 nodes per 6U) and direct liquid cooling (10 nodes per 6U), delivering up to 100 servers and 25,600 cores in a single rack. Announced December 31, 2025, the SuperBlade targets HPC and AI workloads across manufacturing, finance, scientific research, and climate modeling.

What’s New

The 6U SuperBlade represents Supermicro’s “most core-dense” blade platform to date, according to CEO Charles Liang. Each blade supports dual Intel Xeon 6900 processors up to 128 P-cores per CPU at 500W TDP plus up to 3TB of DDR5-6400 RDIMM or 1.5TB of DDR5-8800 MRDIMM. The 32-inch-deep enclosure fits standard 19-inch racks, eliminating the need for deep-rack infrastructure.

Key hardware specs include four PCIe 5.0 NVMe drives, two hot-swap E1.S SSDs, two M.2 drives, and three PCIe 3.0 x16 slots for 400G InfiniBand or GPU acceleration. Integrated 25G Ethernet switches with 100G uplinks reduce external cabling by up to 93% compared to traditional 1U servers.

Why It Matters

Data centers running memory-intensive AI inference or HPC simulations can now deploy 10× the compute density of rack-mount servers in the same footprint. Shared power supplies, fans, and chassis management lower operational costs and power consumption by up to 50% versus discrete servers. Direct liquid cooling (CPU-only or CPU/DIMM/VRM cold plates) enables sustained 500W-per-socket operation without thermal throttling.

The hot-swappable blade design and remote chassis management module (CMM) let admins control power capping, BIOS configs, and KVM access per blade even when CPUs are offline.

Intel Xeon 6900: P-Core Performance

Intel’s Xeon 6900 “Granite Rapids” processors use a chiplet design with up to three compute tiles on the Intel 3 process node. Each processor supports 12 memory channels, 96 PCIe 5.0/CXL 2.0 lanes, and up to 504MB L3 cache. Key advantages for the SuperBlade include:

- 8800 MT/s MRDIMM support for bandwidth-hungry AI training

- Six UPI 2.0 links at 24 GT/s for dual-socket coherency

- Intel AMX with FP16 for accelerated matrix operations

Air vs. Liquid Cooling

| Config | Nodes per 6U | Cores per Rack | Use Case |

|---|---|---|---|

| Air-cooled | 5 blades | 12,800 cores | Standard HPC, moderate TDP |

| Liquid-cooled | 10 blades | 25,600 cores | AI training, max TDP, dense racks |

Liquid cooling delivers 2× the node density and supports full 500W CPU operation, while air cooling suits lower-power or budget-conscious deployments.

What’s Next

The SBI-622BA-1NE12-LCC is now listed on Supermicro’s product page with detailed specifications. Pricing and volume availability have not been disclosed. Supermicro previously showcased liquid-cooled roadmaps at SC25 in November 2024, signaling broader adoption of direct-to-chip cooling across its portfolio.

Organizations planning 2026 data center upgrades should evaluate rack power and cooling infrastructure liquid-cooled configurations require CDU or facility water loops, while air-cooled models work with existing CRAC systems.

Featured Snippet Boxes

What is Supermicro’s 6U SuperBlade?

It’s a high-density blade server enclosure holding up to 10 server blades powered by dual Intel Xeon 6900 processors (256 P-cores per blade). A single 6U chassis replaces up to 10 traditional rack servers.

How many cores fit in one rack?

Up to 25,600 P-cores across 100 server blades (10 enclosures × 10 blades) when using liquid cooling. Air-cooled configurations support 12,800 cores.

Which workloads benefit most?

HPC simulations, AI training and inference, financial modeling, climate/weather forecasting, and scientific research requiring high memory bandwidth and core count.

Does it require special rack infrastructure?

No. The 32-inch-deep enclosure fits standard 19-inch racks. Liquid-cooled models need facility water loops; air-cooled versions work with existing cooling.