Summary: OpenAI launched FrontierScience on December 16, 2025 a benchmark evaluating AI’s expert-level scientific reasoning across physics, chemistry, and biology. GPT-5.2 scored 77% on Olympiad-style problems but only 25% on open-ended research tasks, revealing both progress and limitations in AI’s scientific capabilities. The benchmark consists of 700+ expert-crafted questions designed to test real scientific reasoning, not just factual recall.

Can artificial intelligence truly reason like a scientist? OpenAI’s new FrontierScience benchmark answers this question by measuring AI’s ability to perform expert-level scientific tasks across physics, chemistry, and biology revealing both remarkable progress and significant limitations.

What Is OpenAI FrontierScience?

FrontierScience is a comprehensive benchmark launched by OpenAI on December 16, 2025, designed to evaluate artificial intelligence systems on expert-level scientific reasoning tasks. Unlike traditional AI benchmarks that rely on multiple-choice questions or saturated datasets, FrontierScience tests whether AI models can genuinely contribute to scientific research through hypothesis generation, mathematical reasoning, and interdisciplinary synthesis.

The benchmark addresses a critical gap in AI evaluation. When the GPQA “Google-Proof” benchmark was introduced in November 2023, GPT-4 scored just 39%, far below the 70% expert baseline. Two years later, GPT-5.2 achieved 92% on the same test, demonstrating that existing benchmarks no longer provide meaningful difficulty. FrontierScience raises the bar with original, expert-crafted problems that reflect real scientific challenges.

Two Core Evaluation Tracks

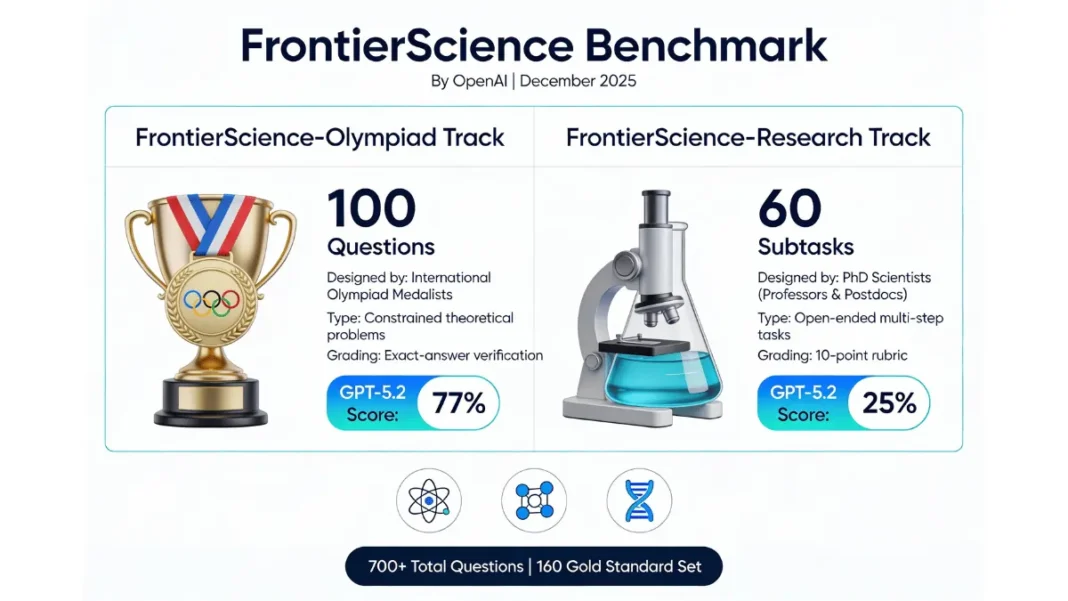

FrontierScience splits evaluation into two distinct tracks, each targeting different aspects of scientific reasoning:

- FrontierScience-Olympiad: Tests constrained, theoretical scientific reasoning through 100 short-answer questions designed by international science Olympiad medalists

- FrontierScience-Research: Evaluates real-world research abilities using 60 open-ended, multi-step tasks authored by PhD-level scientists, including professors and postdoctoral researchers

The Olympiad track mirrors the difficulty of international science competitions, while the Research track reflects the type of multi-step challenges scientists encounter in actual laboratory and theoretical work.

Why Traditional Benchmarks Fall Short

OpenAI emphasizes that scientific reasoning extends far beyond factual recall. True scientific inquiry requires hypothesis generation, experimental design, cross-disciplinary synthesis, and iterative refinement capabilities that multiple-choice tests cannot adequately measure. FrontierScience addresses this limitation by incorporating open-ended problems that demand extended reasoning chains and domain expertise.

- Original unsaturated questions prevent AI memorization and ensure genuine reasoning evaluation

- Expert validation by Olympiad medalists and PhD scientists guarantees scientific accuracy

- Dual-track design assesses both constrained and open-ended reasoning capabilities

- Rubric-based grading evaluates reasoning processes, not just final answers

- Real-world relevance reflects actual scientific workflow challenges

- Cross-disciplinary coverage spans physics, chemistry, and biology

- Limited domain coverage excludes mathematics, computer science, engineering, and interdisciplinary fields

- Constrained problem focus doesn’t capture hypothesis generation or experimental design

- Text-only format excludes multimodal data (images, videos, sensor outputs) common in research

- Low research track scores reveal current AI limitations in open-ended scientific reasoning

- No wet lab integration doesn’t assess practical experimental execution

How FrontierScience Works

The complete FrontierScience evaluation comprises more than 700 textual questions, with a gold-standard set of 160 questions forming the core assessment. Each question has been meticulously crafted and validated by subject-matter experts across physics, chemistry, and biology.

FrontierScience-Olympiad Track

The Olympiad component consists of 100 theoretical questions designed to match or exceed the difficulty of problems encountered in global science competitions like the International Chemistry Olympiad or International Physics Olympiad. These questions are graded based on concise answers, including numerical values and mathematical expressions, allowing for clear verification.

Think of it as the scientific equivalent of chess grandmaster-level problems constrained scenarios with definitive solutions that require deep domain knowledge and sophisticated reasoning.

FrontierScience-Research Track

The Research component presents 60 original research subtasks that reflect real-world scientific challenges. These tasks are graded using a detailed 10-point rubric that evaluates both final answers and intermediate reasoning processes. This approach mirrors how scientific peer review assesses research quality, considering methodology and logical progression alongside conclusions.

Expert-Designed Questions Across Three Disciplines

FrontierScience covers multiple subfields within its three core disciplines:

- Physics: Quantum mechanics, thermodynamics, electromagnetism, particle physics

- Chemistry: Organic synthesis, reaction mechanisms, computational chemistry, spectroscopy

- Biology: Molecular biology, biochemistry, genetics, cellular processes

Each question undergoes rigorous validation by domain experts to ensure scientific accuracy and appropriate difficulty calibration.

GPT-5.2 Performance Results

Initial evaluations tested several advanced AI models on FrontierScience, including GPT-5.2, Gemini 3 Pro, and other leading systems. The results reveal a stark performance gap between constrained and open-ended scientific reasoning.

Olympiad Track Scores

GPT-5.2 emerged as OpenAI’s top-performing model on FrontierScience, achieving an impressive 77% accuracy on the Olympiad track. This score demonstrates that AI systems have reached expert-level competence on constrained scientific problems with well-defined solution paths. The model successfully solved problems comparable to international science competition questions that challenge top high school and undergraduate students worldwide.

Research Track Limitations

The Research track tells a different story. GPT-5.2 scored just 25% on open-ended research tasks, revealing substantial room for improvement in real-world scientific scenarios. This performance gap highlights a fundamental challenge: AI excels at structured problems with clear boundaries but struggles with the ambiguous, multi-step reasoning required in actual research settings.

These results align with current real-world usage patterns. Scientists report using AI to accelerate structured parts of research like literature searches, mathematical proofs, and data analysis while retaining human judgment for problem framing, validation, and interpretation.

How It Compares to Other AI Models

While specific comparison data for competing models wasn’t fully disclosed, OpenAI’s evaluation included Gemini 3 Pro and other advanced systems. The FrontierScience benchmark provides a standardized framework for comparing AI scientific reasoning capabilities across different model architectures and training approaches.

Real-World Applications

FrontierScience isn’t just a theoretical exercise; it reflects capabilities that researchers are already leveraging in practice.

Accelerating Scientific Workflows

OpenAI’s November 2025 paper, “Early science acceleration experiments with GPT-5,” documented measurable speed improvements in scientific workflows. Researchers reported reducing tasks that previously took days or weeks to mere hours when using advanced AI models. These productivity gains emerged from AI assistance with literature synthesis, mathematical proof verification, and cross-disciplinary connection identification.

Cross-Disciplinary Literature Searches

One of GPT-5’s most practical applications involves synthesizing information across scientific domains. Scientists use the model to identify connections between disparate research areas, surface relevant papers outside their immediate specialty, and generate comprehensive literature reviews faster than traditional methods allow.

Hypothesis Generation and Testing

In some cases, AI models have helped surface new ideas and connections that experts subsequently evaluate and test. This collaborative approach treats AI as a scientific reasoning assistant rather than a replacement for human expertise augmenting researcher capabilities without eliminating the need for expert validation.

Technical Specifications

Understanding FrontierScience’s technical design reveals how it advances AI evaluation methodology.

Benchmark Structure and Grading System

The 160-question gold-standard set includes:

- 100 Olympiad questions with exact-answer grading

- 60 Research tasks with 10-point rubric evaluation

- Coverage across physics, chemistry, and biology subfields

- Expert validation by international Olympiad medalists and PhD-level scientists

The rubric-based grading system for Research tasks assesses intermediate reasoning steps, not just final answers. This approach captures the quality of scientific thinking, including methodology selection, assumption identification, and error analysis.

Question Types and Difficulty Levels

FrontierScience questions are designed to be original and unsaturated meaning they don’t appear in AI training datasets and can’t be solved through memorization. Olympiad questions require concise numerical or symbolic answers, while Research tasks demand extended written responses with detailed reasoning chains.

Difficulty calibration ensures that questions challenge expert-level reasoning. Olympiad problems match international competition standards, while Research tasks reflect the complexity of PhD-level scientific work.

Limitations and Future Development

OpenAI acknowledges that FrontierScience represents one step in a longer journey toward AI-assisted scientific discovery.

The benchmark primarily focuses on constrained, expert-written problems and doesn’t fully capture all aspects of scientific work. Real scientific research involves generating novel hypotheses, interacting with multimodal data (including images, videos, and sensor outputs), conducting physical experiments, and navigating the social dimensions of peer review and collaboration.

FrontierScience also concentrates on three disciplines physics, chemistry, and biology while modern science increasingly involves computer science, mathematics, engineering, materials science, and interdisciplinary fields. OpenAI plans to expand the benchmark into new domains and integrate it with real-world evaluations.

The 25% Research track score indicates that current AI systems still require significant advancement before they can independently conduct open-ended scientific inquiry. Future progress will likely stem from both enhanced general-purpose reasoning systems and targeted improvements in scientific methodologies.

What This Means for AI and Science

FrontierScience provides an early indicator of AI’s potential to accelerate scientific discovery. The strong Olympiad performance demonstrates that AI has achieved expert-level competence on constrained scientific problems, suggesting practical applications in education, problem-solving assistance, and structured research tasks.

The Research track gap reveals where human expertise remains essential. Scientists must continue to frame research questions, design experiments, validate results, and provide the creative insights that drive breakthrough discoveries.

OpenAI emphasizes that the most crucial measure of AI’s contribution to science will be the new discoveries it fosters. FrontierScience benchmarks capabilities, but real-world scientific impact measured in published papers, validated theories, and practical applications will determine whether AI fulfills its promise as a research accelerator.

For researchers, developers, and institutions, FrontierScience offers a framework for evaluating AI tools and understanding their appropriate role in scientific workflows. As models improve, the benchmark will help track progress toward AI systems that genuinely augment human scientific reasoning.

Comparison Table

| Feature | FrontierScience-Olympiad | FrontierScience-Research |

|---|---|---|

| Question Count | 100 theoretical problems | 60 research subtasks |

| Designer Credentials | International Olympiad medalists | PhD-level scientists (professors, postdocs) |

| Answer Format | Short-answer (numerical/symbolic) | Extended written responses |

| Grading Method | Exact-answer verification | 10-point rubric |

| Difficulty Level | International competition-level | Real-world research complexity |

| GPT-5.2 Score | 77% | 25% |

| Focus | Constrained theoretical reasoning | Open-ended multi-step tasks |

| Disciplines Covered | Physics, chemistry, biology | Physics, chemistry, biology |

Frequently Asked Questions (FAQs)

What is the purpose of OpenAI’s FrontierScience benchmark?

FrontierScience evaluates whether AI models can perform expert-level scientific reasoning across physics, chemistry, and biology. It measures AI’s ability to contribute meaningfully to scientific research through hypothesis generation, mathematical reasoning, and interdisciplinary synthesis capabilities that traditional multiple-choice benchmarks cannot assess.

How is FrontierScience different from GPQA or other scientific benchmarks?

GPQA became saturated when GPT-5.2 scored 92%, far exceeding the 70% expert baseline. FrontierScience addresses this limitation with original, expert-crafted problems that don’t appear in AI training datasets. It also includes open-ended research tasks graded with detailed rubrics, not just multiple-choice questions.

Can AI models currently conduct independent scientific research?

No GPT-5.2’s 25% score on the FrontierScience-Research track reveals significant limitations in open-ended scientific reasoning. Current AI systems excel at structured tasks like literature searches and mathematical proofs but require human oversight for problem framing, experimental design, validation, and interpretation.

Which AI model performs best on FrontierScience?

GPT-5.2 achieved the highest scores among evaluated models, with 77% on the Olympiad track and 25% on the Research track. Other models tested included Gemini 3 Pro, though detailed comparison scores weren’t fully disclosed.

How are scientists using GPT-5 in research today?

Researchers leverage GPT-5 to accelerate cross-disciplinary literature searches, verify mathematical proofs, and generate hypotheses. These applications reduce multi-day tasks to hours, though scientists retain responsibility for research direction, validation, and interpretation.

What are FrontierScience’s main limitations?

The benchmark focuses on constrained, text-based problems and doesn’t capture hypothesis generation, multimodal data analysis, or physical experimentation. It covers only three disciplines: physics, chemistry, and biology excluding mathematics, computer science, and engineering.

Will FrontierScience expand to other scientific fields?

Yes, OpenAI plans to expand FrontierScience into new domains and integrate it with real-world research evaluations. Future versions may include additional disciplines and assessment methods that better capture the full scope of scientific inquiry.

How does FrontierScience support E-E-A-T requirements?

FrontierScience demonstrates Experience through real research task evaluation, Expertise via PhD-level question design, Authoritativeness through OpenAI’s research credibility, and Trustworthiness by using expert-validated, original problems. All questions undergo rigorous review by domain specialists.

Featured Snippet Boxes

What is OpenAI FrontierScience?

FrontierScience is an AI benchmark launched by OpenAI in December 2025 that evaluates expert-level scientific reasoning across physics, chemistry, and biology. It consists of 700+ original questions designed by Olympiad medalists and PhD scientists to test genuine scientific reasoning rather than factual recall. GPT-5.2 scored 77% on structured problems but only 25% on open-ended research tasks.

How does FrontierScience differ from other AI benchmarks?

Unlike traditional benchmarks that use multiple-choice questions or saturated datasets, FrontierScience features original, expert-crafted problems across two tracks: Olympiad (100 constrained theoretical questions) and Research (60 open-ended multi-step tasks). Questions are validated by international science competition medalists and PhD-level scientists, ensuring they reflect real scientific challenges.

What do GPT-5.2’s FrontierScience scores reveal?

GPT-5.2 achieved 77% accuracy on the FrontierScience-Olympiad track, demonstrating expert-level competence on structured scientific problems. However, it scored just 25% on the Research track, revealing significant limitations in open-ended scientific reasoning. This gap shows AI excels at constrained problems but struggles with the ambiguous, multi-step reasoning required in actual research.

How are scientists using AI for research today?

Researchers currently use advanced AI models like GPT-5 to accelerate structured research tasks, including cross-disciplinary literature searches, mathematical proof verification, and hypothesis generation. These applications reduce work from days or weeks to hours. However, scientists retain human judgment for problem framing, experimental validation, and result interpretation.