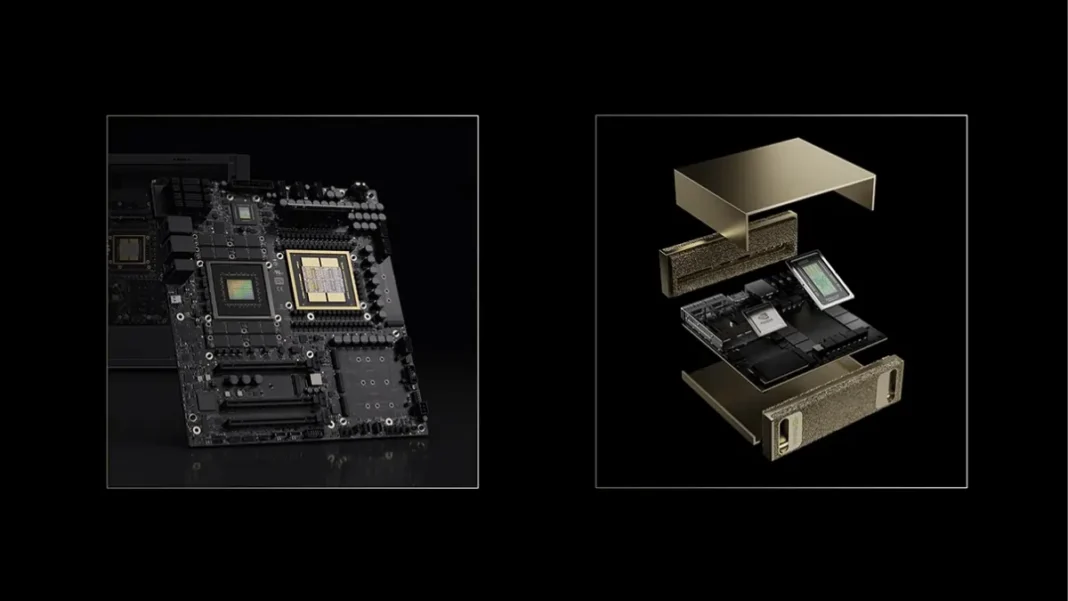

NVIDIA announced DGX Spark and DGX Station at CES 2026, bringing data-center-class AI computing to desktop systems. The Grace Blackwell-powered machines enable developers to run open-source AI models with up to 1 trillion parameters locally, marking a significant shift in how frontier models are deployed and accessed.

What’s New

NVIDIA introduced two desktop AI supercomputers: DGX Spark for mainstream AI development and DGX Station for enterprise-scale frontier models. DGX Spark handles models up to 200 billion parameters, while DGX Station runs trillion-parameter models including DeepSeek-V3.2, Mistral Large 3, Meta Llama 4 Maverick, and Qwen3.

Both systems use the Grace Blackwell architecture with NVFP4 precision, which compresses AI models by up to 70% without losing intelligence. DGX Station features the GB300 Grace Blackwell Ultra superchip with 775GB of coherent memory and petaflop-level performance.

NVIDIA confirmed collaborations with llama.cpp deliver a 35% average performance boost when running state-of-the-art models on DGX Spark. The systems come preconfigured with NVIDIA AI software, CUDA-X libraries, and support for frameworks like vLLM and SGLang.

Why It Matters

DGX Spark and Station eliminate the need for cloud-based infrastructure for many AI workloads, giving developers local control over data, IP, and model iterations. Organizations can now prototype and fine-tune large language models on desktop hardware before deploying to production environments.

For video creators, DGX Spark delivers 8x faster video generation compared to a MacBook Pro with M4 Max, enabling local offloading of AI rendering tasks. Enterprises gain secure, on-premises AI inference capabilities without recurring cloud compute costs.

DGX Spark vs. DGX Station

| Feature | DGX Spark | DGX Station |

|---|---|---|

| Chip | GB10 Superchip | GB300 Ultra Superchip |

| Memory | 128GB unified | 775GB coherent |

| Max Model Size | 200B parameters | 1T parameters |

| Performance | 1 petaflop | Data-center class |

| Target Users | Developers, researchers | Enterprise AI labs |

| Networking | Standard | ConnectX-8 SuperNIC 800Gb/s |

Industry Adoption

Hugging Face, IBM, and JetBrains confirmed DGX Spark integration for local AI agents, OpenRAG deployments, and secure code assistance. Open-source projects vLLM and SGLang validated DGX Station for GB300-specific development and large-scale model testing.

will.i.am’s TRINITY self-balancing vehicle uses DGX Spark for real-time vision language model inference in urban mobility applications. The system also powers RTX Remix workflows for 3D asset generation and CUDA coding assistants with Nsight on-device.

What’s Next

DGX Spark and GB10 systems ship now from Acer, Amazon, ASUS, Dell Technologies, GIGABYTE, HP, Lenovo, Micro Center, MSI, and PNY. DGX Station launches in spring 2026 from ASUS, Boxx, Dell, GIGABYTE, HP, MSI, and Supermicro.

NVIDIA AI Enterprise software support for DGX Spark becomes available at the end of January 2026, including model installation libraries and GPU optimization drivers. Six new DGX Spark playbooks covering Nemotron 3 Nano, robotics training, and genomics workflows are now available.

Featured Snippet Boxes

What is NVIDIA DGX Spark?

DGX Spark is a desktop AI supercomputer powered by the GB10 Grace Blackwell Superchip with 128GB memory and 1 petaflop of AI performance. It runs open-source AI models up to 200 billion parameters locally and costs significantly less than traditional data center systems.

How much memory does DGX Station have?

DGX Station features 775GB of coherent memory with FP4 precision support, enabling it to run trillion-parameter frontier models like DeepSeek-V3.2 and Meta Llama 4 Maverick entirely on-device.

When can I buy DGX Spark and DGX Station?

DGX Spark is available now from major manufacturers including Dell, HP, ASUS, Lenovo, and MSI. DGX Station ships in spring 2026 from ASUS, Dell, GIGABYTE, HP, MSI, and Supermicro.

Can DGX Spark connect to cloud services?

Yes. Developers can prototype and fine-tune models locally on DGX Spark, then seamlessly deploy them to NVIDIA DGX Cloud or any accelerated cloud infrastructure. Two DGX Spark systems can be linked via ConnectX networking for larger workloads.