Quick Brief

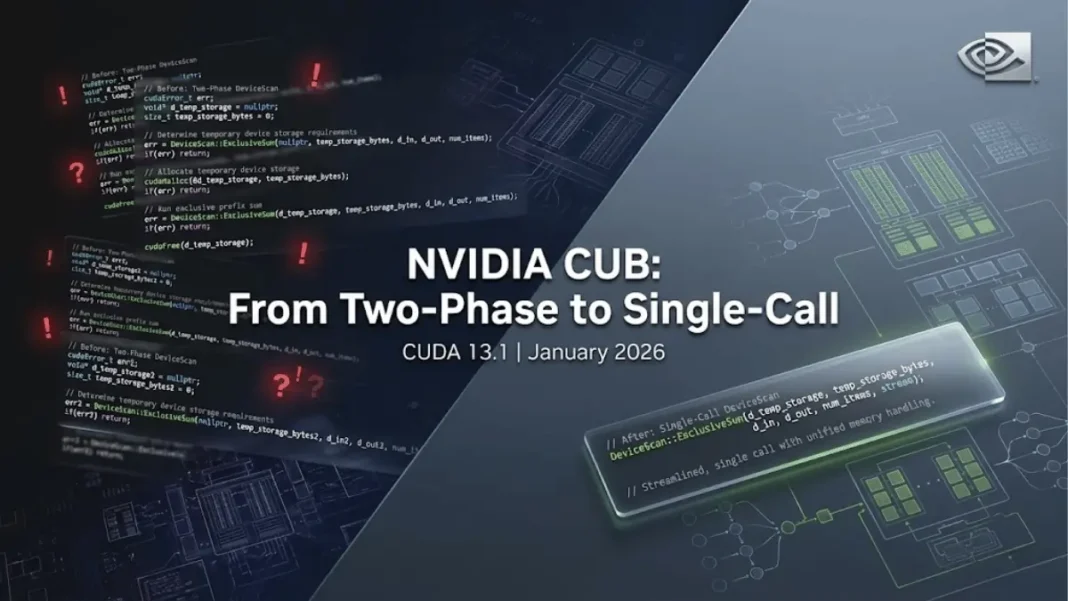

- The Launch: NVIDIA deployed a single-call API for its CUB library in CUDA Toolkit 13.1 (released January 12, 2026), eliminating the traditional two-phase memory allocation workflow

- The Impact: Developers using PyTorch, TensorFlow, and custom GPU kernels gain zero-overhead simplification of GPU primitive operations without sacrificing performance

- The Context: CUB serves as the foundational primitive layer across NVIDIA’s AI accelerator ecosystem, where the company maintains over 80% market share in discrete GPU accelerators

NVIDIA released a simplified single-call API for its CUB (CUDA Unbound) library as part of CUDA Toolkit 13.1, released January 12, 2026, removing the need for developers to manually manage two-phase memory allocation workflows that have defined GPU primitive programming since CUB’s inception. The update addresses a longstanding developer pain point where every CUB operation sorting, scanning, or histogram generation required duplicate function calls to first estimate memory requirements, then execute the algorithm.

CUB’s Role in NVIDIA’s Accelerated Computing Stack

CUB functions as the foundational device-side primitive layer within NVIDIA’s CUDA Core Compute Libraries (CCCL), version 3.1.4 in the latest release. Unlike Thrust, which provides host-side interfaces similar to C++ STL for rapid prototyping, CUB enables developers to embed highly optimized algorithms directly into custom CUDA kernels. This architectural distinction makes CUB the preferred solution for performance-critical applications where milliseconds matter including PyTorch’s tensor operations and real-time inference pipelines deployed across NVIDIA’s ecosystem.

The library handles standard GPU algorithms (scan, reduce, sort, histogram) with maximum hardware utilization while abstracting away manual thread management complexity. Major frameworks already depend on CUB: PyTorch wraps CUB calls using preprocessor macros to automate the two-phase pattern, dedicating internal codebase resources to maintain these workarounds.

Eliminating the Two-Phase Allocation Bottleneck

The traditional CUB workflow required developers to invoke each primitive twice: first with a null pointer to calculate temporary storage bytes, then again with allocated memory to execute the actual computation. This design separated memory allocation from execution, allowing advanced users to reuse or share memory buffers across multiple algorithms, a flexibility valuable to a “non-negligible subset” but cumbersome for the majority user base.

cpp// OLD: Two-phase API

cub::DeviceScan::ExclusiveSum(nullptr, temp_storage_bytes, d_input, d_output, num_items);

cudaMalloc(&d_temp_storage, temp_storage_bytes);

cub::DeviceScan::ExclusiveSum(d_temp_storage, temp_storage_bytes, d_input, d_output, num_items);

// NEW: Single-call API

cub::DeviceScan::ExclusiveSum(d_input, d_output, num_items);

NVIDIA’s performance benchmarks demonstrate zero overhead between the legacy two-phase calls and the new single-call implementation across varying input sizes. Memory allocation still occurs internally using asynchronous device memory resources, maintaining the same underlying efficiency while hiding boilerplate code.

The signature ambiguity of the two-phase API created additional friction: because estimation and execution calls shared identical function signatures, developers lacked compile-time clarity on which parameters must remain consistent between phases. The d_input and d_output arguments only activate during the second call, yet nothing in the API surface prevents modification between phases.

AdwaitX Analysis: Infrastructure Productivity Impact

This API evolution directly addresses technical debt accumulation in production codebases. PyTorch maintains CUB wrapper macros that handle automatic memory management and dual invocations, but macros obscure control flow and complicate debugging creating maintenance overhead for framework maintainers. TensorFlow and other GPU-accelerated libraries face identical wrapper requirements when integrating CUB primitives.

The single-call API eliminates repetitive boilerplate code by removing duplicate function calls while preserving full backward compatibility with existing two-phase calls. For organizations managing thousands of custom CUDA kernels, this translates to measurable developer velocity gains and reduced onboarding friction for engineers new to GPU programming.

NVIDIA’s CUDA ecosystem maintains over 80% market share in AI accelerators despite competition from AMD ROCm, Intel oneAPI, and custom TPUs, a dominance rooted in 19 years of developer tooling investment and ecosystem lock-in effects. Continuous API refinements like CUB’s single-call model reinforce these switching costs by improving developer experience within the CUDA platform, making migration to alternative GPU solutions less attractive even as hardware competition intensifies.

Advanced Configuration Through Environment Objects

Beyond simplification, the new API introduces an extensible env argument that consolidates execution parameters into a type-safe object. Developers can now combine custom CUDA streams, memory resources, and future configuration options (deterministic execution, user-defined tuning policies) in a single composable interface:

cppcuda::stream custom_stream{cuda::device_ref{0}};

auto memory_prop = cuda::std::execution::prop{cuda::mr::get_memory_resource, cuda::device_default_memory_pool(cuda::device_ref{0})};

auto env = cuda::std::execution::env{custom_stream.get(), memory_prop};

DeviceScan::ExclusiveSum(d_input, d_output, num_items, env);

This architectural shift moves CUB toward a “control panel” model where execution features compose flexibly rather than requiring rigid function parameter sequences. CUDA 13.1 ships with single-call support for five algorithm families: DeviceReduce (Reduce, Sum, Min/Max/ArgMin/ArgMax), DeviceScan (ExclusiveSum, ExclusiveScan), with additional primitives tracked in the NVIDIA/cccl GitHub repository.

Technical Specifications: CUDA 13.1 Component Versions

| Component | Version | Architecture Support | Platform Availability |

|---|---|---|---|

| CUB | 3.1.4 | x86_64, arm64-sbsa | Linux, Windows |

| Thrust | 3.1.4 | x86_64, arm64-sbsa | Linux, Windows |

| libcu++ | 3.1.4 | x86_64, arm64-sbsa | Linux, Windows |

| CUDA Runtime | 13.1.80 | x86_64, arm64-sbsa | Linux, Windows, WSL |

| NVCC Compiler | 13.1.115 | x86_64, arm64-sbsa | Linux, Windows, WSL |

| Required Linux Driver | 590.48.01 | x86_64, arm64-sbsa | Linux |

Source: NVIDIA CUDA Toolkit 13.1 Update 1 Release Notes

The CUDA 13.1 release mandates C++17 minimum for bundled CCCL libraries and transitions the host compiler from GCC 14 to GCC 15 on Linux systems. These toolchain requirements align with modern C++ standards adoption while maintaining compatibility with established enterprise development environments.

Deployment Roadmap for Production Environments

Organizations running GPU-accelerated workloads should evaluate single-call API adoption in three phases:

- Immediate (Q1 2026): Update development environments to CUDA 13.1 and validate compatibility with existing kernels using the unchanged two-phase API

- Incremental Migration (Q2-Q3 2026): Refactor high-frequency CUB calls in performance-critical paths, prioritizing operations wrapped in custom macros

- Full Integration (Q4 2026+): Adopt environment-based execution configuration for new kernel development, leveraging memory resource customization for multi-stream workloads

PyTorch and TensorFlow maintainers will likely integrate single-call APIs in upcoming releases to reduce internal wrapper complexity, though timeline specifics remain unannounced. Developers can track CCCL evolution through NVIDIA’s GitHub tracking issues, where the CUB team publishes environment-based overload progress.

Meta increased AI infrastructure spending to $60-65 billion for 2025, underscoring enterprise commitment to CUDA-based toolchains in production environments.

Frequently Asked Questions (FAQs)

What is the NVIDIA CUB library?

CUB provides GPU-optimized device-side primitives (scan, reduce, sort) for integrating high-performance algorithms into custom CUDA kernels, part of NVIDIA’s CUDA Core Compute Libraries.

How does the CUB single-call API improve performance?

It introduces zero runtime overhead while eliminating repetitive boilerplate code by automating memory allocation internally, maintaining identical performance to two-phase calls.

When was CUDA 13.1 released?

NVIDIA released CUDA Toolkit 13.1 Update 1 on January 12, 2026, featuring CUB 3.1.4 with single-call API support.

What is the difference between CUB and Thrust?

Thrust offers host-side STL-like interfaces for rapid prototyping, while CUB provides device-side primitives for embedding optimized algorithms directly into custom kernels.