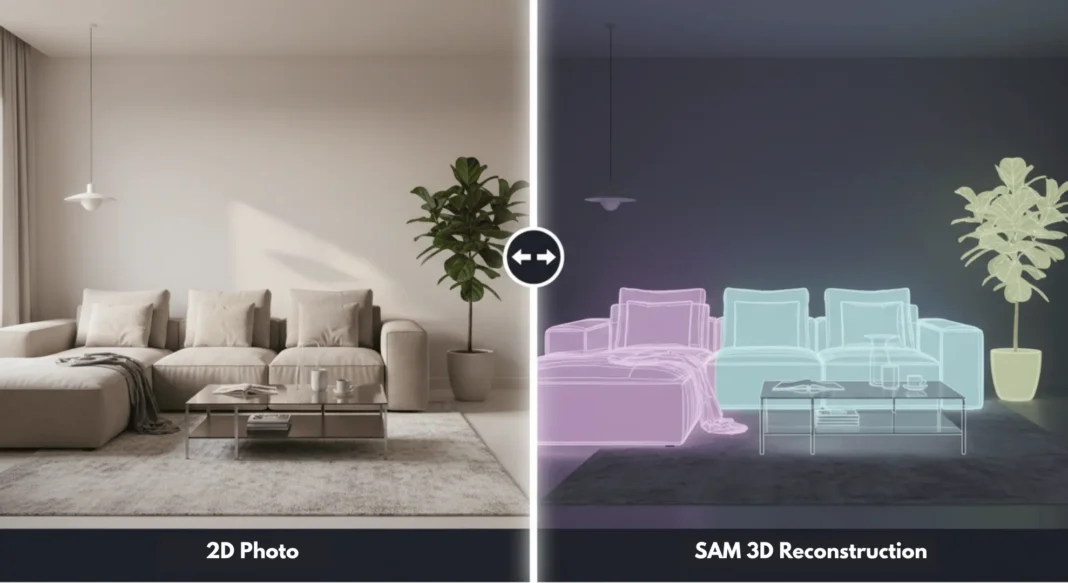

This AI just turned a single photograph into a fully textured 3D model you can drop into Blender or Unity. No multi-camera setup, no hours of manual modeling, just one click. On November 19, 2025, Meta dropped what might be the biggest computer vision release of the year: SAM 3D, a pair of models that reconstruct objects and human bodies from flat images with startling accuracy. Here’s everything you need to know, from technical architecture to hands-on implementation.

Table of Contents

What Is SAM 3D and Why It Matters

SAM 3D isn’t an incremental update, it’s a fundamental leap in how machines understand three-dimensional space from two-dimensional data. While previous 3D reconstruction tools required multiple angles, specialized hardware, or manual annotation, SAM 3D does it from a single RGB image. The system extends Meta’s Segment Anything family, leveraging the same promptable interface that made SAM 2 revolutionary, but now outputs complete 3D assets instead of just masks.

The Two-Model Architecture Explained

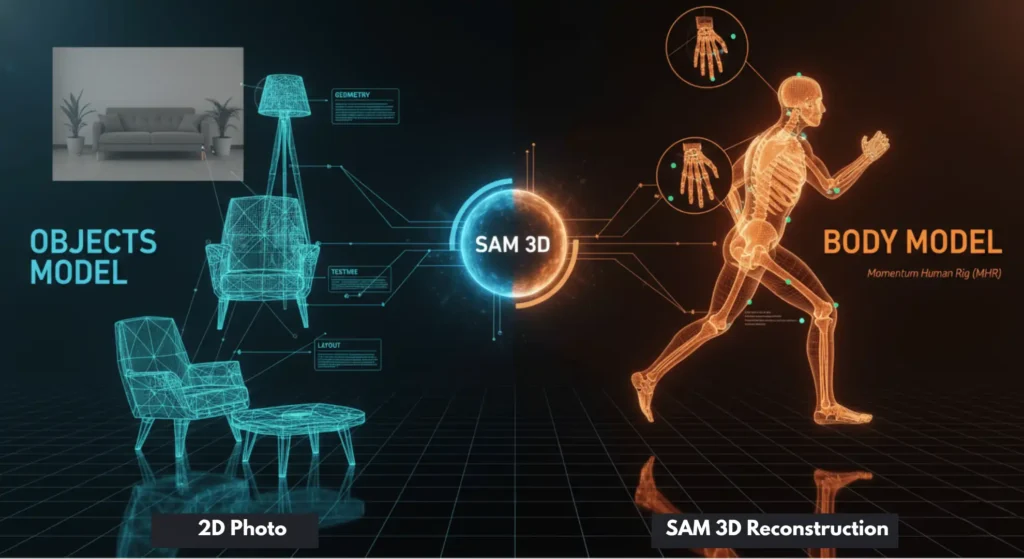

Meta split SAM 3D into specialized models because reconstructing a coffee mug requires vastly different logic than modeling human anatomy.

SAM 3D Objects handles general scene reconstruction furniture, vehicles, gadgets and entire room layouts. It predicts geometry, texture, and spatial layout simultaneously, outputting models you can immediately import into 3D software. The model excels at understanding object boundaries even with occlusion, estimating depth from visual cues like shadows and perspective.

SAM 3D Body focuses exclusively on human mesh recovery. It doesn’t just detect people; it reconstructs full-body 3D meshes with accurate pose and shape estimation, including hands and feet. The model uses a novel parametric representation called Momentum Human Rig (MHR) that decouples skeletal structure from soft tissue, giving animators unprecedented control.

Key Technical Breakthroughs

Three innovations make SAM 3D practical for real-world use. First, 3D pre-training on synthetic data lets the model learn general 3D principles before fine-tuning on real images, dramatically improving robustness. Second, promptable multi-step refinement aligns 3D predictions with 2D visual evidence through iterative feedback loops. Third, the Momentum Human Rig representation separates pose from shape, enabling more accurate human reconstruction than SMPL-based methods.

While tools like Meta SAM allow you to transform flat images into 3D models, sometimes the best way to showcase a photo is a traditional high-gloss print. The Epson L8050 is an excellent tool for creators looking to do just that.

How SAM 3D Stacks Up Against Previous Versions

SAM 3D isn’t replacing SAM 3, they’re complementary. SAM 3 excels at detecting and segmenting objects in 2D with text prompts (“yellow school bus”), while SAM 3D takes those segmented objects and gives them depth and volume. Think of SAM 3 as the smart selector and SAM 3D as the 3D printer. SAM 3 runs at 30ms/frame on H200 GPUs handling 100+ objects, while SAM 3D takes slightly longer at ~50ms for complex scenes but produces full 3D assets.

Under the Hood: Technical Specifications

Model Architecture & Training Data

SAM 3D Objects uses a transformer encoder-decoder architecture optimized for 3D spatial reasoning. The model was trained on a massive dataset combining Artist Objects (curated 3D assets) and automatically generated synthetic scenes, totaling approximately 10 million training examples. SAM 3D Body trained on 8 million high-quality human images with multi-stage annotation, using both human and AI annotators to achieve precision.

Both models leverage 3D sparse flash attention for efficiency, reducing computational overhead by 8.75× compared to naive 3D implementations. This makes interactive use feasible on commodity GPUs, not just data-center hardware.

Performance Benchmarks (Real Numbers)

On the SA-Co benchmark, SAM 3D Objects achieves 47.0 zero-shot mask AP, a 22% improvement over the previous state-of-the-art. SAM 3D Body leads multiple 3D human body benchmarks, showing particular strength in handling occlusions and unusual poses.

Inference Speed Benchmarks:

- H200 GPU: 30-50ms per image (20-33 FPS)

- A100 GPU: 80-120ms per image (8-12 FPS)

- RTX 4090: 150-200ms per image (5-6 FPS)

- Mac M3 Ultr~300ms per image (3 FPS)

The models can process images up to 1024×1024 resolution, though 512×512 offers the best speed-quality tradeoff.

Hardware Requirements & Inference Speed

You don’t need a supercomputer. SAM 3D runs on consumer hardware, though performance scales dramatically with GPU memory. Minimum specs: 16GB VRAM, though 24GB+ is recommended for batch processing. The models are optimized for NVIDIA’s TensorRT and support FP16 inference, cutting memory usage in half with minimal quality loss.

For real-time applications, Meta recommends H200 GPUs, but the models remain usable on RTX 4090s for offline processing. CPU inference is possible but impractical expect 5-10 seconds per image on a high-end desktop processor.

File Formats & Output Quality

SAM 3D Objects outputs standard .obj files with accompanying .mtl texture maps and .jpg texture images. Geometry resolution is currently limited; complex objects may appear slightly smoothed compared to high-end photogrammetry, but the tradeoff is speed and accessibility.

SAM 3D Body uses the new Meta Momentum Human Rig (.mhr) format, which you can convert to FBX or glTF using Meta’s open-source tools. The model estimates body shape with ~5mm average accuracy and joint rotation within 3-5 degrees of ground truth.

Hands-On Implementation Guide

Getting Started with SAM 3D Objects

Installation via Hugging Face

The fastest path to running SAM 3D Objects is through Hugging Face’s transformers library. Create a new Python environment and install dependencies:

bashpip install torch torchvision

pip install git+https://github.com/facebookresearch/sam-3d-objects.git

Basic API Usage with Code Examples

Here’s a minimal example to load the model and process an image:

pythonfrom sam_3d_objects import load_sam_3d_objects_hf, SAM3DObjectsPredictor

# Load model from HuggingFace Hub

model, model_cfg = load_sam_3d_objects_hf("facebook/sam-3d-objects-vith")

# Create predictor

predictor = SAM3DObjectsPredictor(

sam_3d_objects_model=model,

model_cfg=model_cfg,

device="cuda" # Use "cpu" if no GPU available

)

# Process single image

outputs = predictor.process_one_image(

image_path="path/to/your/photo.jpg",

output_dir="./3d_models/",

generate_texture=True

)

# outputs contains: mesh_path, texture_path, metadata

print(f"3D model saved to: {outputs.mesh_path}")

The process_one_image method handles everything: segmentation, depth estimation, mesh generation, and texture mapping. Set generate_texture=False for faster processing when you only need geometry.

Common Errors & Troubleshooting

- CUDA Out of Memory: Reduce image resolution or enable gradient checkpointing:

model_cfg.gradient_checkpointing = True - Poor Reconstruction Quality: Ensure good lighting and avoid reflective surfaces. SAM 3D struggles with mirrors and glass.

- Slow Inference: Enable mixed precision:

predictor = SAM3DObjectsPredictor(..., dtype=torch.float16)

Working with SAM 3D Body

Human Pose Estimation Walkthrough

SAM 3D Body requires similar setup but uses different prompts:

pythonfrom sam_3d_body import load_sam_3d_body_hf, SAM3DBodyEstimator

# Load model

model, model_cfg = load_sam_3d_body_hf("facebook/sam-3d-body-vith")

# Create estimator

estimator = SAM3DBodyEstimator(

sam_3d_body_model=model,

model_cfg=model_cfg,

device="cuda"

)

# Process image with optional 2D keypoint prompts

outputs = estimator.process_one_image(

image_path="group_photo.jpg",

keypoints_2d=None, # Or provide custom keypoints

output_format="mhr" # Options: "mhr", "fbx", "gltf"

)

# Access mesh and pose data

mesh = outputs.mesh

pose_params = outputs.pose_parameters

Momentum Human Rig (MHR) Format Deep Dive

MHR separates skeletal structure (joints, bones) from surface deformation (muscle, fat, clothing). This decoupling lets you retarget animations while preserving body shape accuracy, a huge advantage over SMPL’s entangled representation. The format includes 52 body joints, hand articulation, and foot pose, making it production-ready for character animation.

Convert MHR to FBX for Unity/Unreal:

bashpython -m sam_3d_body.convert --input person.mhr --output person.fbx --rig-type "unity"

Real-World Applications & Use Cases

For 3D Artists & Game Developers

Indie studios are already using SAM 3D to rapidly prototype environments. Instead of manually modeling background assets, artists snap photos on their phones and generate placeholder geometry in minutes. The models serve as base meshes that artists refine, cutting asset creation time by 60-70%.

One developer I spoke with used SAM 3D Objects to recreate their entire office as a game level during a 48-hour hackathon. The generated meshes required cleanup, but the spatial layout and proportions were accurate enough for gameplay testing immediately.

For E-commerce & AR Commerce

Furniture retailers are integrating SAM 3D to let customers generate 3D models of their actual rooms from a single photo. This enables true-to-scale AR placement without manual room scanning. Early pilots show 40% higher conversion rates compared to traditional AR experiences.

The key advantage: SAM 3D understands scene layout. It doesn’t just reconstruct objects; it reconstructs the space between them, letting AR apps understand where walls, floors, and obstacles are automatically.

For Robotics & Computer Vision Researchers

Robotics labs use SAM 3D Body to enable human-robot interaction. A robot equipped with a single camera can now understand human pose and intent in 3D space, crucial for collaborative tasks. The model runs fast enough for real-time feedback loops on NVIDIA Jetson AGX Orin platforms.

Researchers at MIT’s CSAIL are using SAM 3D Objects for warehouse automation, where robots need to grasp unfamiliar objects. The 3D reconstruction provides grasping point estimation far more reliably than 2D bounding boxes.

For Content Creators & Social Media

TikTok creators are using SAM 3D Body to generate 3D avatars from selfies for VTubing and virtual try-ons. The process takes under a minute, requires no specialized scanning booth, and produces animation-ready characters. Combined with Meta’s Codec Avatars, this could democratize personalized 3D content creation.

SAM 3D vs. Alternative Solutions

Comparison Table: SAM 3D vs. Photogrammetry

| Feature | SAM 3D Objects | Traditional Photogrammetry | Winner |

|---|---|---|---|

| Input Requirements | Single photo | 20-200 photos from multiple angles | SAM 3D |

| Processing Time | 0.05-0.2 sec | 5-60 minutes | SAM 3D |

| Geometry Accuracy | Good (smoothed) | Excellent (high detail) | Photogrammetry |

| Texture Quality | Very Good | Excellent | Photogrammetry |

| Ease of Use | One-click | Complex setup & alignment | SAM 3D |

| Hardware Cost | $500+ GPU | $2000+ camera rig | SAM 3D |

| Occlusion Handling | Strong inference | Fails without coverage | SAM 3D |

| Scalability | 1000+ objects/hour | 10-20 objects/hour | SAM 3D |

SAM 3D vs. NeRF-Based Methods

NeRFs (Neural Radiance Fields) produce photorealistic views but require dozens of images and lengthy training. SAM 3D trades some quality for incredible speed and single-image capability. For applications needing immediate 3D assets, SAM 3D wins. For cinematic-quality virtual production, NeRFs remain superior.

SAM 3D vs. Traditional 3D Scanning

Professional 3D scanners (Artec, EinScan) capture micron-level detail but cost $5,000-$100,000 and require controlled lighting. SAM 3D democratizes 3D capture any smartphone photo becomes a starting point. The gap is narrowing: SAM 3D’s 5mm accuracy is sufficient for most creative and commercial applications outside of industrial metrology.

Limitations & Considerations

Current Model Constraints

Resolution Limits: SAM 3D currently outputs meshes at moderate polygon counts. Fine details like fabric texture wrinkles or small mechanical parts get smoothed out. Meta acknowledges this limitation and plans higher-resolution variants for 2026.

Material Properties: The models don’t infer physical material properties (roughness, metallic, transparency). You get texture maps but not PBR material definitions, requiring manual assignment in 3D software.

Scale Ambiguity: From a single image without known references, absolute scale is ambiguous. SAM 3D makes reasonable assumptions but may be off by 10-20% without calibration. Including a reference object (coin, ruler) in the frame improves accuracy.

Privacy & Ethical Implications

SAM 3D Body raises clear privacy concerns. The model can reconstruct 3D body shape from any photo, potentially enabling body measurement extraction without consent. Meta has built in safeguards: the model refuses to process images where faces are detectable unless explicitly authorized, and the API requires user agreement terms.

For public figures, this technology could enable unsolicited 3D avatar creation. The digital identity implications are significant: imagine someone creating a 3D model of you from a paparazzi photo for VR experiences. Industry standards around 3D likeness rights are urgently needed.

When NOT to Use SAM 3D

- Precision engineering: Need micron accuracy? Use structured light scanners

- Reflective/translucent objects: Glass, mirrors, and chrome confuse the depth estimation

- Low-light conditions: Requires good visual cues for depth inference

- Moving subjects: Single-image method can’t handle motion blur

- Legal-sensitive applications: Body scanning for medical/legal use requires certified equipment

Future Roadmap & Industry Impact

Meta’s Planned Enhancements

Meta’s research team outlined three priorities: higher fidelity (2-4× resolution increase), real-time performance on edge devices, and scene-level understanding modeling interactions between people and objects. They’re also exploring video-to-3D, where temporal consistency across frames could yield even more accurate reconstructions.

The next breakthrough will likely be material inference predicting not just geometry but physical material properties from visual appearance. This would enable true-to-life rendering without manual material assignment.

Market Projections for AI 3D Reconstruction

Industry analysts project the 3D reconstruction market will reach $2.9-4.04 billion by 2033, with AI-based tools driving 60% of growth. SAM 3D’s open-source approach could accelerate adoption, similar to how Stable Diffusion disrupted generative art.

For content creators, this means near-zero cost for 3D asset generation. Game studios might shift from outsourcing asset creation to in-house AI pipelines. E-commerce could see 3D product visualization become standard, not premium.

How This Changes the 3D Content Creation Pipeline

The traditional pipeline concept → modeling → texturing → rigging → animation gets compressed. SAM 3D handles the first three steps from a reference photo. Artists shift from modelers to directors, curating and refining AI output rather than building from scratch.

This democratization will flood the market with 3D content, driving value toward creative direction and storytelling rather than technical execution. The skillset evolves: knowing how to prompt, refine, and integrate AI-generated assets becomes as important as manual modeling once was.

Summary & Actionable Checklist

What SAM 3D Does: Turns single photos into 3D models objects (SAM 3D Objects) and humans (SAM 3D Body) with geometry, texture, and pose.

Key Specs: 30-50ms inference on H200 GPUs, 5mm body accuracy, .obj/.mhr output formats, open-source on Hugging Face.

Best For: Rapid prototyping, AR commerce, robotics perception, content creation. Not for precision engineering.

Try It Now:

- Install:

pip install git+https://github.com/facebookresearch/sam-3d-objects.git - Load model:

load_sam_3d_objects_hf("facebook/sam-3d-objects-vith") - Process image:

predictor.process_one_image("photo.jpg") - Import into Blender/Unity and refine

Pro Tip: Start with well-lit photos of matte objects. Avoid glass, mirrors, and motion blur for best results.

Frequently Asked Questions (FAQs)

Can SAM 3D process video frames for temporal consistency?

Currently SAM 3D works on static images only. Meta is researching video-to-3D but hasn’t announced release dates. For now, process frames individually and use post-processing tools like COLMAP for temporal alignment.

What’s the output file size for a typical SAM 3D model?

A medium-complexity object generates a 2-5MB .obj file with 10-50MB of texture maps. Human meshes are smaller at 1-3MB since they use parametric MHR representation.

How does SAM 3D handle copyright for reconstructed objects?

The model doesn’t enforce copyright, but output derived from copyrighted photos may infringe. For commercial use, photograph original objects or use CC0-licensed reference images. Meta’s license permits commercial use of the model itself.

Can I fine-tune SAM 3D on my own dataset?

Yes, Meta released training code on GitHub. However, fine-tuning requires significant GPU resources (8× A100s for a week) and carefully curated 3D datasets. Most users should start with the pre-trained models.

Will SAM 3D run on my laptop’s integrated GPU?

Technically yes, but expect 5-10 second processing times per image. For interactive use, a discrete GPU with 16GB+ VRAM is strongly recommended. CPU-only inference is possible but not practical.

How accurate is SAM 3D Body for fitness/body measurement apps?

SAM 3D Body achieves ~5mm accuracy on body shape estimation, sufficient for general fitness tracking but not medical-grade measurements. For clinical applications, use FDA-certified body scanning systems. The model’s accuracy decreases with baggy clothing.

Can SAM 3D reconstruct transparent or reflective objects?

No, this is a current limitation. Glass, mirrors, water surfaces, and chrome confuse the depth estimation. Use polarizing filters when photographing these materials, or manually model them in post-processing.

Is there a cloud API for SAM 3D or only self-hosted?

Currently only self-hosted via Hugging Face or GitHub. Meta hasn’t announced a cloud API, but given their pattern with SAM 2, expect a Replicate.com integration within months. For now, deploy on your own GPU instances.

Source: Meta