Summary: The NVIDIA RTX PRO 5000 72GB Blackwell is now generally available, bringing professional grade AI capabilities to desktop workstations. Built on the Blackwell architecture with 5th-generation Tensor Cores and 72GB of GDDR7 memory, this GPU delivers 2,142 TOPS of AI performance enough to run large language models and agentic AI workflows locally. With 50% more memory than the 48GB variant, it bridges the gap between entry-level professional GPUs and the flagship RTX PRO 6000, offering 3.5x faster image generation and 2x faster text generation compared to previous-generation hardware. Priced around $5,000 and available through partners like Ingram Micro and Leadtek, it targets AI developers, data scientists, and creative professionals who need data-center-class performance without cloud dependencies.

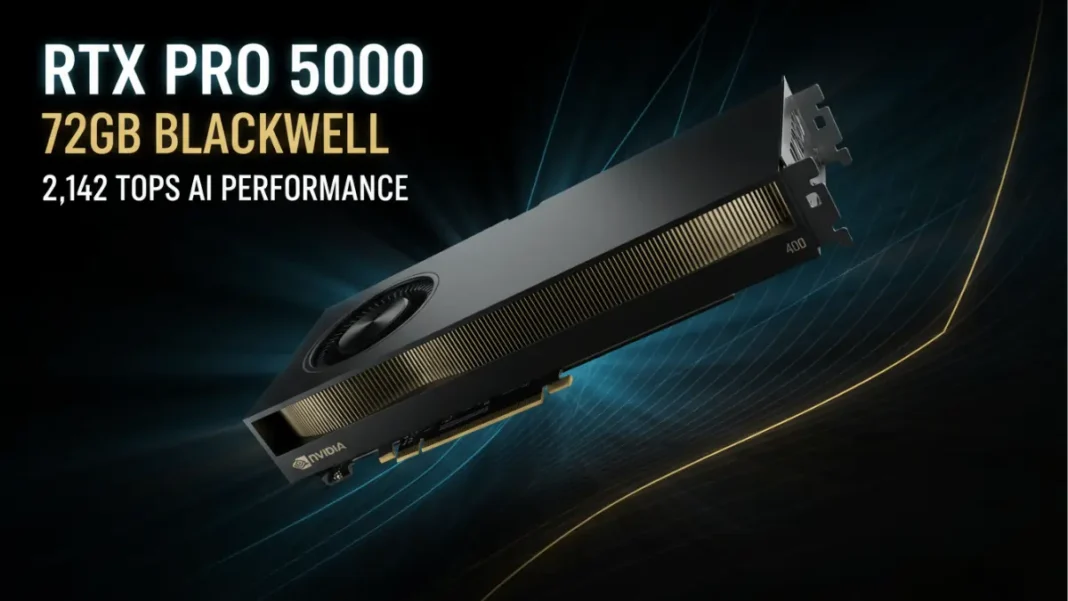

NVIDIA has officially launched the RTX PRO 5000 72GB Blackwell GPU for general availability, delivering professional-grade AI performance designed specifically for memory-intensive agentic AI workflows directly on desktop workstations. This expanded memory configuration addresses the growing demands of local LLM development, multimodal AI pipelines, and complex generative design all without relying on cloud infrastructure.

What Is the RTX PRO 5000 72GB Blackwell?

The RTX PRO 5000 72GB is NVIDIA’s newest professional desktop GPU built on the Blackwell architecture, featuring 72GB of GDDR7 memory, a 50% increase over the existing 48GB model. It sits between the RTX PRO 5000 48GB and the flagship RTX PRO 6000 in NVIDIA’s professional GPU lineup, targeting developers and engineers who need substantial memory capacity but don’t require the full 96GB offered by the top-tier model. The GPU utilizes the same GB202 silicon found in the GeForce RTX 5090 and RTX PRO 6000, with 110 streaming multiprocessors enabled out of the chip’s maximum 192 SMs.

Built specifically for the era of agentic AI where autonomous AI systems chain multiple tools, use retrieval-augmented generation, and process multimodal data simultaneously the RTX PRO 5000 72GB addresses the memory bottleneck that has limited local AI development. Companies like InfinitForm and Versatile Media are already deploying these GPUs to accelerate generative design optimization and virtual production workflows.

Technical Specifications at a Glance

Memory Configuration Breakdown

The defining feature of the RTX PRO 5000 72GB is its memory subsystem. The GPU features 72GB of GDDR7 memory with ECC (error-correcting code) protection, providing 1,344 GB/sec of memory bandwidth. This GDDR7 implementation represents a significant generational leap over GDDR6 and GDDR6X, delivering 30-50% better power efficiency while enabling higher data transfer rates. For AI developers working with large language models that can exceed 70 billion parameters, or 3D artists manipulating massive scene files, this expanded memory capacity eliminates the need to partition datasets or offload processing to slower system RAM.

The 72GB configuration uses the same memory bandwidth as the 48GB variant and both deliver 1,344 GB/sec meaning the performance difference comes primarily from capacity rather than throughput. This makes the 72GB model ideal for scenarios where model size, context window length, or asset complexity exceeds 48GB, but where the workload doesn’t demand the 1,792 GB/sec bandwidth of the RTX PRO 6000.

Blackwell Architecture Deep Dive

The RTX PRO 5000 72GB leverages NVIDIA’s Blackwell architecture, which introduces several key innovations for AI and graphics workloads. At its core are 5th-generation Tensor Cores that deliver up to 3x the performance of previous-generation hardware, with new support for FP4 and FP6 precision arithmetic alongside standard FP8, FP16, and FP32 formats. These ultra-low precision modes enable substantially higher inference throughput for LLMs and generative models that can tolerate reduced numerical precision, effectively doubling or tripling the number of tokens processed per second.

The GPU also features 4th-generation RT Cores that deliver up to 2x the ray tracing performance of Ada Lovelace-based GPUs, accelerating path tracing in renderers like Arnold, V-Ray, and Blender by up to 4.7x. Three 9th-generation NVENC encoders and three 6th-generation NVDEC decoders handle video encoding and decoding, while DisplayPort 2.1 support enables driving displays at up to 8K 240Hz or 16K 60Hz. The entire package runs at a 300W TDP, matching the power envelope of the 48GB variant.

The GPU delivers 2,142 TOPS (tera operations per second) of AI performance, making it capable of running models like Llama 3 70B, Stable Diffusion XL, and other large-scale AI workloads entirely on-device. This performance level enables real-time inference for agentic AI systems that need to query multiple models, maintain large context windows, and process multimodal inputs simultaneously.

Why 72GB of GDDR7 Memory Matters

The Agentic AI Memory Challenge

Agentic AI represents a fundamental shift from simple chatbot interactions to autonomous systems that plan, use tools, and execute multi-step workflows. These systems often need to keep multiple AI models loaded simultaneously, perhaps a language model for planning, a code generation model for scripting, a vision model for analyzing images, and a retrieval system for searching knowledge bases. Each component consumes GPU memory, and as context windows expand to 128K tokens or beyond, memory requirements skyrocket.

Traditional generative AI workflows might load a single 13-billion-parameter model that occupies 26GB of VRAM when quantized to 8-bit precision. Agentic systems, by contrast, might need 40-60GB of active memory to maintain model weights, intermediate activations, KV caches for long contexts, and tool outputs. The RTX PRO 5000 72GB provides headroom for these complex pipelines while maintaining data privacy and low latency by processing everything locally rather than sending data to cloud APIs.

Companies developing agentic AI systems cite memory capacity as the primary hardware constraint. The 72GB configuration allows developers to prototype systems that would otherwise require data center GPUs like the A100 or H100, reducing iteration time and infrastructure costs. For enterprises operating in regulated industries or handling sensitive data, this local processing capability is non-negotiable.

GDDR7 vs GDDR6: Performance Gains

GDDR7 represents the first major evolution in GPU memory technology since GDDR6 launched in 2018. Compared to GDDR6 and GDDR6X, GDDR7 offers higher gigatransfer speeds, improved power efficiency, and reduced latency through advanced signaling techniques. Samsung’s GDDR7 implementation delivers 30% better power efficiency, while Micron and SK Hynix claim up to 50% efficiency gains over GDDR6.

For AI workloads, this efficiency translates to sustained performance under thermal constraints. The RTX PRO 5000 72GB can maintain peak memory bandwidth for extended training or inference sessions without throttling, unlike older GDDR6-based cards that might reduce clock speeds to manage heat. The bandwidth improvement also accelerates data-intensive operations like loading model weights, shuffling training batches, and transferring generated outputs all critical for responsive agentic AI systems.

Real-World Performance Benchmarks

AI Workload Performance

NVIDIA’s internal benchmarks show the RTX PRO 5000 72GB delivering 3.5x the performance of prior-generation hardware for image generation tasks using Stable Diffusion and similar models. Text generation workloads including LLM inference for models like Llama 2, Mistral, and GPT-style architectures see 2x performance improvements over Ada Lovelace-based GPUs. These gains come from the combination of 5th-generation Tensor Cores with FP4/FP6 precision support and the expanded memory capacity that allows larger batch sizes.

InfinitForm, a generative design software company serving clients like Yamaha Motor and NASA, reports using the RTX PRO 5000 72GB to accelerate CUDA-based optimization workflows. The company’s software combines generative AI with engineering simulation to optimize product designs for performance and manufacturability, a process that requires simultaneously holding CAD models, simulation meshes, and neural network weights in memory.

Rendering and Creative Applications

Path tracing and real-time rendering see dramatic improvements with the RTX PRO 5000 72GB. Across industry-standard renderers including Arnold, Chaos V-Ray, Blender Cycles, D5 Render, and Redshift, the GPU delivers up to 4.7x faster render times compared to previous-generation professional GPUs. This acceleration comes from the 4th-generation RT Cores, which handle ray-triangle intersection tests and bounding volume hierarchy traversal more efficiently than earlier architectures.

Versatile Media, a global virtual production company, plans to deploy the RTX PRO 5000 72GB for film-grade real-time rendering. General Manager Eddy Shen notes that “memory capacity directly translates into creative freedom,” allowing artists to work with higher-resolution textures, more complex lighting setups, and larger scene files without performance degradation. The 72GB capacity enables loading entire production environments into VRAM, eliminating the stuttering and latency that occurs when assets must stream from system memory or storage.

Engineering and CAD Performance

Computer-aided design and engineering workflows see more than 2x graphics performance improvements with the RTX PRO 5000 72GB compared to previous-generation professional GPUs. Complex assemblies with thousands of parts, detailed finite element analysis meshes, and real-time physics simulations all benefit from the expanded memory and improved compute throughput. The GPU’s support for technologies like RTX Mega Geometry enables rendering up to 100x more ray-traced triangles, allowing engineers to visualize designs at unprecedented fidelity.

RTX PRO 5000 72GB vs 48GB vs RTX 6000

| Specification | RTX PRO 5000 48GB | RTX PRO 5000 72GB | RTX PRO 6000 |

|---|---|---|---|

| Architecture | Blackwell (GB202) | Blackwell (GB202) | Blackwell (GB202) |

| CUDA Cores | 14,080 | 14,080 | ~25,344 |

| Memory Capacity | 48GB GDDR7 ECC | 72GB GDDR7 ECC | 96GB GDDR7 ECC |

| Memory Bandwidth | 1,344 GB/sec | 1,344 GB/sec | 1,792 GB/sec |

| Tensor Cores | 5th Gen | 5th Gen | 5th Gen |

| RT Cores | 4th Gen | 4th Gen | 4th Gen |

| TDP | 300W | 300W | 600W |

| Est. Price | $4,250-$4,600 | ~$5,000 | $8,300+ |

When to Choose the 72GB Variant

The 72GB configuration makes sense when your workloads regularly exceed 48GB of VRAM but don’t justify the cost jump to the RTX PRO 6000. Specific scenarios include running 70B parameter language models with extended context windows, working with 8K or higher resolution video timelines with multiple effects layers, developing multi-agent AI systems that load several models simultaneously, and manipulating CAD assemblies or 3D scenes that approach or exceed 48GB when fully loaded.

For development teams prototyping agentic AI applications, the 72GB variant provides a comfortable safety margin. Early-stage AI systems often grow more complex than initially planned, adding tools, expanding context requirements, or integrating additional models. Starting with 72GB prevents needing to upgrade hardware mid-project when your 48GB GPU becomes a bottleneck.

Price-to-Performance Analysis

At an estimated $5,000, the RTX PRO 5000 72GB offers 50% more memory than the 48GB variant for approximately 15-20% more cost. This represents strong value for memory-constrained workflows, effectively pricing the extra 24GB at roughly $750-1,000. By comparison, jumping from the RTX PRO 5000 72GB to the RTX PRO 6000 costs an additional $3,300 for 24GB more memory and higher bandwidth, a substantially worse memory-per-dollar ratio.

The RTX PRO 6000 justifies its premium through doubled CUDA cores, 33% higher memory bandwidth, and the ability to handle truly massive workloads that exceed 72GB. For most AI development and creative workflows, however, the RTX PRO 5000 72GB hits the sweet spot of capacity, performance, and cost.

Who Should Buy the RTX PRO 5000 72GB?

Ideal Use Cases

AI developers building agentic systems, training custom models, or fine-tuning open-source LLMs locally will benefit most from the RTX PRO 5000 72GB. Data scientists prototyping machine learning pipelines with large datasets, creative professionals working in virtual production or high-resolution 3D rendering, design engineers running generative design optimization, and researchers developing multimodal AI applications all represent ideal users.

Organizations prioritizing data privacy, regulatory compliance, or reduced cloud costs should strongly consider the RTX PRO 5000 72GB. Running AI workloads locally eliminates data transmission to third-party APIs, reduces ongoing inference costs, and provides consistent low-latency performance regardless of network conditions.

System Requirements

The RTX PRO 5000 72GB requires a PCIe 5.0 x16 slot for optimal performance, though it maintains backward compatibility with PCIe 4.0 and 3.0 slots at reduced bandwidth. Power requirements include a 300W TDP with recommended 850W or higher power supply units featuring appropriate PCIe power connectors. System builders should ensure adequate case cooling, as sustained AI workloads generate consistent thermal loads.

Memory capacity on the host system should ideally match or exceed GPU VRAM 72GB of system RAM minimum, with 128GB recommended for complex workflows that involve preprocessing data before GPU operations. Storage performance also matters for AI development, with NVMe SSDs recommended for model loading and dataset access.

Availability, Pricing, and Where to Buy

The NVIDIA RTX PRO 5000 72GB is now generally available through authorized partners including Ingram Micro, Leadtek, Unisplendour, and xFusion. Broader availability through global system builders is expected in early 2026. Estimated pricing sits around $5,000 based on the existing 48GB model’s $4,250-$4,600 retail price.

Buyers can purchase the GPU as a standalone component or as part of pre-configured AI workstations from system integrators. NVIDIA’s Marketplace and authorized reseller networks provide additional purchasing channels. Enterprise buyers should contact NVIDIA RTX PRO partners directly for volume pricing and integration support.

RTX PRO 5000 72GB vs Competitors

| Feature | RTX PRO 5000 72GB | RTX 6000 Ada | RTX PRO 6000 |

|---|---|---|---|

| Release Date | Oct 2025 | Oct 2023 | Q1 2025 |

| Architecture | Blackwell | Ada Lovelace | Blackwell |

| Memory | 72GB GDDR7 | 48GB GDDR6 | 96GB GDDR7 |

| Memory Bandwidth | 1,344 GB/sec | 960 GB/sec | 1,792 GB/sec |

| AI Performance | 2,142 TOPS | N/A | ~4,000 TOPS |

| Tensor Cores | 5th Gen | 4th Gen | 5th Gen |

| TDP | 300W | 300W | 600W |

| FP4 Support | Yes | No | Yes |

| Est. Price | ~$5,000 | $6,800 | $8,300+ |

- 50% more memory than 48GB variant at reasonable cost premium

- 2,142 TOPS AI performance enables local LLM deployment

- GDDR7 memory provides 30-50% better efficiency vs GDDR6

- Same 300W TDP as 48GB model no additional power requirements

- FP4/FP6 precision support doubles inference throughput for compatible models

- 4th-gen RT Cores deliver up to 4.7x faster rendering

- DisplayPort 2.1 supports 8K 240Hz and 16K 60Hz displays

- ECC memory provides error correction for mission-critical workloads

- Same memory bandwidth as 48GB (1,344 GB/sec) capacity gain only

- Limited to 110 SMs (14,080 CUDA cores) vs 192 SMs in fully-enabled GB202

- Estimated $5,000 price point may stretch smaller team budgets

- Early 2026 for broad availability through major system builders

- Lower bandwidth than RTX PRO 6000 (1,344 vs 1,792 GB/sec)

- Requires robust cooling for sustained AI workloads

Complete Specifications

GPU Architecture: NVIDIA Blackwell (GB202 silicon)

CUDA Cores: 14,080 (110 Streaming Multiprocessors)

Tensor Cores: 5th Generation with FP4, FP6, FP8, FP16, FP32 support

RT Cores: 4th Generation

AI Performance: 2,142 TOPS

Theoretical FP32 Performance: 65.6 TFLOPS

Memory Subsystem:

- Capacity: 72GB GDDR7 with ECC

- Memory Interface: 384-bit

- Memory Bandwidth: 1,344 GB/sec

- L2 Cache: 96 MB

Video Engines:

- Encoders: 3x 9th Generation NVENC

- Decoders: 3x 6th Generation NVDEC

- Supported Codecs: H.264, H.265 (HEVC), AV1

Display Support:

- DisplayPort: 2.1 (up to 8K @ 240Hz, 16K @ 60Hz)

- Maximum Displays: 4 simultaneous

- HDR Support: Yes, with dynamic metadata

Power and Cooling:

- TDP: 300W

- Recommended PSU: 850W or higher

- Power Connectors: PCIe 5.0 (specific configuration TBD)

- Cooling: Active blower-style (reference design)

Physical Specifications:

- Form Factor: Dual-slot

- Interface: PCIe 5.0 x16 (backward compatible)

- Length: ~10.5 inches (267mm, estimate based on RTX PRO series)

Software Support:

- CUDA: 12.x and later

- NVIDIA Studio Drivers: Yes

- RTX Accelerated Applications: 110+ (Blender, DaVinci Resolve, Adobe suite, etc.)

- AI Frameworks: TensorFlow, PyTorch, JAX, ONNX Runtime

Warranty and Support:

- Warranty: 3 years (standard for NVIDIA RTX PRO series)

- Enterprise Support: Available through NVIDIA partners

Frequently Asked Questions (FAQs)

What is the main difference between RTX PRO 5000 48GB and 72GB?

The RTX PRO 5000 72GB offers 50% more GDDR7 memory (72GB vs 48GB) while maintaining identical core counts, memory bandwidth, and power consumption. Both variants feature 14,080 CUDA cores, 1,344 GB/sec bandwidth, and 300W TDP. The 72GB model is ideal for workloads that exceed 48GB VRAM requirements, such as large language models, complex 3D scenes, or multi-model agentic AI pipelines.

Can the RTX PRO 5000 72GB run ChatGPT-sized models locally?

Yes. The RTX PRO 5000 72GB can run models comparable to ChatGPT (175B parameters) when using aggressive quantization techniques, though 70B parameter models like Llama 3 70B run more comfortably with headroom for context windows. The 72GB capacity accommodates model weights, KV caches for extended conversations, and intermediate activations required for inference.

Is GDDR7 memory significantly better than GDDR6 for AI workloads?

Yes. GDDR7 provides 30-50% better power efficiency, higher transfer speeds, and reduced latency compared to GDDR6. For AI inference, this means sustained performance without thermal throttling, faster model loading, and improved throughput for memory-intensive operations like attention mechanisms in transformers.

What power supply do I need for the RTX PRO 5000 72GB?

NVIDIA recommends an 850W or higher power supply with appropriate PCIe power connectors. The GPU itself consumes 300W TDP, but accounting for CPU, storage, and system overhead, an 850W PSU provides adequate headroom. High-performance workstations with multiple drives or overclocked CPUs may benefit from 1000W supplies.

How does RTX PRO 5000 72GB compare to RTX 6000 Ada?

The RTX PRO 5000 72GB offers 50% more memory (72GB vs 48GB) and 40% higher memory bandwidth (1,344 vs 960 GB/sec) compared to RTX 6000 Ada. It also features newer 5th-generation Tensor Cores with FP4 precision support, delivering superior AI inference performance. The RTX 6000 Ada has more CUDA cores (18,176 vs 14,080) and slightly higher boost clocks, potentially benefiting graphics-heavy workloads.

When will RTX PRO 5000 72GB be widely available?

The GPU is now available through partners including Ingram Micro, Leadtek, Unisplendour, and xFusion. Broader availability through major system builders and resellers is expected in early 2026. Buyers can check NVIDIA’s Marketplace and authorized partner networks for current stock.

Can I use RTX PRO 5000 72GB for gaming?

While technically capable, the RTX PRO 5000 72GB is optimized for professional workloads and costs significantly more than consumer GeForce cards. Its 4th-gen RT Cores and Blackwell architecture deliver excellent gaming performance, but the $5,000 price point makes it impractical unless you also need the 72GB memory for AI development or content creation.

Does RTX PRO 5000 72GB support multi-GPU configurations?

Yes. The Blackwell architecture supports NVLink for multi-GPU configurations, enabling GPU-to-GPU communication for scaling AI training and rendering workloads across multiple cards. However, specific NVLink connector specifications for the RTX PRO 5000 series have not been officially disclosed.

Featured Snippet Boxes

What is the RTX PRO 5000 72GB Blackwell?

The NVIDIA RTX PRO 5000 72GB Blackwell is a professional desktop GPU featuring 72GB of GDDR7 memory and 2,142 TOPS of AI performance. Built on the Blackwell architecture with 5th-generation Tensor Cores, it’s designed for agentic AI development, local LLM deployment, and memory-intensive creative workflows.

How much does RTX PRO 5000 72GB cost?

The RTX PRO 5000 72GB is estimated to cost around $5,000, positioned between the 48GB variant ($4,250-$4,600) and the flagship RTX PRO 6000 ($8,300+). It’s now available through partners like Ingram Micro and Leadtek, with broader availability expected in early 2026.

RTX PRO 5000 72GB vs 48GB: Key difference

The primary difference is memory capacity 72GB vs 48GB of GDDR7. Both variants share identical CUDA cores (14,080), memory bandwidth (1,344 GB/sec), and 300W TDP. The 72GB model provides 50% more memory for approximately 15-20% higher cost, ideal for large language models and complex 3D scenes.

What is agentic AI and why does it need 72GB?

Agentic AI refers to autonomous systems that plan, use tools, and execute multi-step workflows independently. These systems load multiple AI models simultaneously for language, vision, code generation, and retrieval while maintaining large context windows. This can require 40-60GB of active GPU memory, making the 72GB capacity essential.

GDDR7 vs GDDR6: What’s the performance gain?

GDDR7 delivers 30-50% better power efficiency compared to GDDR6, along with higher transfer speeds and reduced latency. This translates to sustained performance under thermal constraints, faster model loading, and improved throughput for AI inference and rendering workloads.

Can RTX PRO 5000 72GB run 70B parameter models?

Yes. The RTX PRO 5000 72GB can run 70-billion parameter language models locally, especially when using quantization techniques like 8-bit or 4-bit precision. Its 72GB capacity accommodates model weights, extended context windows, and intermediate activations required for agentic AI workflows.