Quick Brief

- Cursor AI introduced two usage pools: Auto + Composer gets significantly higher limits than API usage

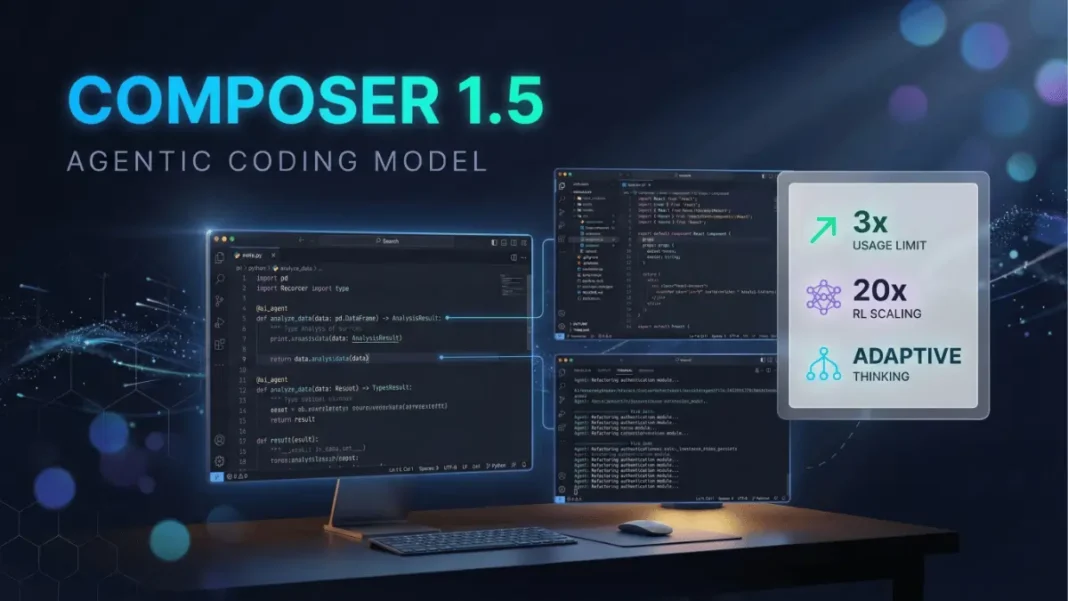

- Composer 1.5 delivers 3x usage of Composer 1, temporarily boosted to 6x through February 16, 2026

- New model uses 20x reinforcement learning scaling with adaptive thinking that adjusts based on task difficulty

- Developers shifting from autocomplete to agentic coding that modifies entire codebases autonomously

Cursor AI has fundamentally restructured its usage model to support a seismic shift in developer behavior. The company announced increased limits for Auto and Composer 1.5 across all individual plans on February 11, 2026, responding to “a major shift toward coding with agents” over recent months. This isn’t incremental developers are now asking AI to execute ambitious, multi-file changes across entire codebases rather than completing single lines.

The announcement centers on two critical changes: separate usage pools for different AI operations, and the launch of Composer 1.5, Cursor’s proprietary agentic coding model announced February 8, 2026. For developers managing resource-intensive projects, understanding these distinctions determines whether daily workflows remain affordable or hit frustrating paywalls mid-sprint.

Two Usage Pools Replace Single Credit System

Cursor has split usage tracking into distinct categories with dramatically different allowances. The Auto + Composer pool includes significantly more usage for autocomplete features and Composer 1.5 operations. The API pool charges standard model pricing for frontier models like GPT-4 or Claude, with individual plans including at least $20 monthly and options to purchase additional usage.

This separation acknowledges different developer priorities. Some teams demand cutting-edge frontier models regardless of cost. Others seek the optimal balance between speed, intelligence, and budget constraints, particularly solo developers and startups watching burn rates.

The practical impact is immediate. Developers using Composer 1.5 can execute three times more agent operations than Composer 1 users under standard limits. Through February 16, 2026, Cursor is temporarily increasing this to six times more usage as an adoption incentive. Individual plans on Pro, Pro Plus, and Ultra tiers all receive these expanded limits, with usage resetting monthly.

What qualifies as agentic coding versus autocomplete?

Agentic coding involves AI systems that plan, execute, test, and iterate across multiple files autonomously. Unlike traditional autocomplete that suggests the next line, agents interpret goals in natural language, break them into implementation plans, write or refactor code across numerous files, run tests, debug errors, and complete cycles with minimal human intervention. Cursor’s Composer 1.5 exemplifies this by adjusting thinking time based on problem difficulty.

Composer 1.5 Uses 20x Reinforcement Learning Scaling

Training proprietary models allows Cursor to offer substantially more usage sustainably. Composer 1.5 represents the company’s second-generation agentic coding model, succeeding Composer 1 which launched October 2025.

The model architecture introduces adaptive thinking that calibrates reasoning depth to task difficulty. For straightforward operations like variable renaming or typo fixes, Composer 1.5 responds quickly with minimal thinking tokens. Complex multi-step engineering tasks trigger deeper reasoning with extended processing. This speed-first design prevents the 30-second waits that frustrate developers during simple edits.

Composer 1.5 also implements trained self-summarization. When context windows overflow during lengthy coding sessions, the model intelligently summarizes prior work to maintain accuracy across long tasks. This addresses a critical pain point in agentic workflows where context loss mid-project causes logic breaks.

The model achieves these capabilities through scaling reinforcement learning 20x further on the same pretrained model used for Composer 1. Cursor expects to “continue finding ways to offer increasingly intelligent and cost-effective models, alongside the latest frontier models”. This signals ongoing investment in proprietary model development rather than exclusive reliance on third-party APIs.

Performance Claims and Benchmark Methodology

Cursor reports Composer 1.5 scores above Anthropic’s Sonnet 4.5 on Terminal-Bench 2.0, though below the best frontier models. The company emphasizes that benchmark scores vary significantly based on testing methodology and agent harness used.

Cursor computed scores using the official Harbor evaluation framework with default benchmark settings across two iterations per model-agent pair. The company notes: “For other models besides Composer 1.5, we took the max score between the official leaderboard score and the score recorded running in our infrastructure”.

Terminal-Bench 2.0, launched November 2025, measures agent capabilities for terminal use through realistic, hard tasks in isolated container environments. The benchmark addresses critical limitations of previous coding evaluations by testing agents on complex real-world scenarios rather than synthetic problems.

Third-party testing reports Composer 1.5 scores ranging from 47.9% to 48.3% on Terminal-Bench 2.0, though these use different testing harnesses than Cursor’s official evaluation. Developers should note that reported benchmark scores depend heavily on the specific agent framework, evaluation parameters, and number of iterations tested.

Agentic Coding Shifts Developer Workflows

The usage restructuring reflects behavioral data Cursor has observed. Developers increasingly request ambitious codebase-wide changes rather than isolated suggestions. This evolution from autocomplete to agent-driven development represents a fundamental workflow transformation.

Agentic AI coding assistants possess four distinguishing characteristics: autonomous decision-making about architecture and patterns, multi-step workflow execution across files, iterative testing and debugging, and minimal human supervision for routine tasks. These systems interpret natural language goals, plan implementation strategies, and complete development cycles with limited intervention.

The shift toward agentic workflows explains Cursor’s decision to separate usage pools and increase limits specifically for agent operations. Traditional autocomplete generates single-line suggestions, consuming minimal compute resources. Agent operations process entire project contexts, reason about architectural decisions, and generate multi-file changes requiring substantially more computational capacity.

New Usage Visibility Dashboard Launches

Cursor added an in-editor page where developers monitor limits across both usage pools. The dashboard displays remaining Auto + Composer usage when Composer 1.5 is selected, plus API credits with at least $20 monthly included on individual plans.

This transparency addresses developer frustration with billing clarity. When Cursor switched from request-based to credit-based billing in August 2025, the change generated discussions about usage tracking and cost predictability. The new visibility dashboard aims to prevent mid-project surprises when usage caps hit unexpectedly.

Usage limits reset monthly according to each account’s billing cycle. Higher-tier plans (Pro Plus and Ultra) include more API usage, though the February 2026 update did not change API pricing. The temporary 6x boost for Composer 1.5 applies through February 16, after which limits revert to the standard 3x multiplier versus Composer 1.

Limitations and Cost Considerations

Composer 1.5 scores below top frontier models on Terminal-Bench 2.0. Developers requiring absolute cutting-edge performance for novel problem-solving may still need API access to GPT-4 Turbo or Claude Opus despite higher costs.

The credit-based system complexity requires careful attention to model selection. Understanding which operations draw from Auto + Composer pools versus API credits affects monthly cost predictability. The billing change in August 2025 introduced this credit structure, replacing the previous request-based system.

The 6x temporary boost creates deadline pressure. Developers must evaluate Composer 1.5 capabilities before February 16, 2026, when limits drop to 3x. This time-limited promotion complicates long-term capacity planning for teams considering Cursor adoption.

Benchmark score variations across testing methodologies make direct model comparisons challenging. Cursor’s official scores use specific Harbor framework settings that may differ from other published benchmarks. Developers should test models within their own workflows rather than relying solely on standardized benchmark results.

Frequently Asked Questions (FAQs)

What is Cursor Composer 1.5?

Composer 1.5 is Cursor’s second-generation proprietary AI coding model designed for agentic workflows. It uses 20x reinforcement learning scaling and adaptive thinking to calibrate reasoning depth based on task complexity, responding quickly for simple edits while applying deep reasoning for multi-step engineering problems.

How much usage do I get with Composer 1.5?

Individual plans (Pro, Pro Plus, Ultra) receive 3x the usage limit of Composer 1 for Composer 1.5 operations. Through February 16, 2026, this temporarily increased to 6x more usage. Limits reset monthly with your billing cycle.

What’s the difference between Auto + Composer and API usage pools?

Auto + Composer pool includes significantly more usage for autocomplete features and Composer 1.5 agent operations at no additional cost. API pool charges standard model pricing for frontier models like GPT-4 or Claude, with individual plans including at least $20 monthly credit and pay-as-you-go options for additional usage.

When was Composer 1.5 announced?

Cursor announced Composer 1.5 on February 8, 2026, followed by the usage limit increase announcement on February 11, 2026.

When did Cursor change to credit-based billing?

Cursor transitioned from request-based billing to credit-based system in August 2025. This change prompted the company to add improved usage visibility dashboards in February 2026.

What is agentic coding?

Agentic coding refers to AI systems that autonomously plan, execute, test, and iterate on code across multiple files with minimal human intervention. Unlike autocomplete that suggests next lines, agents interpret natural language goals, break them into implementation plans, write or refactor entire features, run tests, debug errors, and complete development cycles independently.

How long does the 6x usage boost last?

The temporary 6x usage increase for Composer 1.5 runs through February 16, 2026. After this date, limits revert to the standard 3x multiplier versus Composer 1 usage.

Can I monitor my Cursor usage in real-time?

Yes. Cursor added a new in-editor dashboard page where you can track remaining balances across both Auto + Composer and API usage pools. The dashboard updates to show relevant limits based on which model you currently have selected.

What is Terminal-Bench 2.0?

Terminal-Bench 2.0 is a benchmark launched November 2025 that measures agent capabilities for terminal use through realistic, hard tasks in isolated container environments. It uses the Harbor evaluation framework to test agents on complex real-world scenarios.