Quick Brief

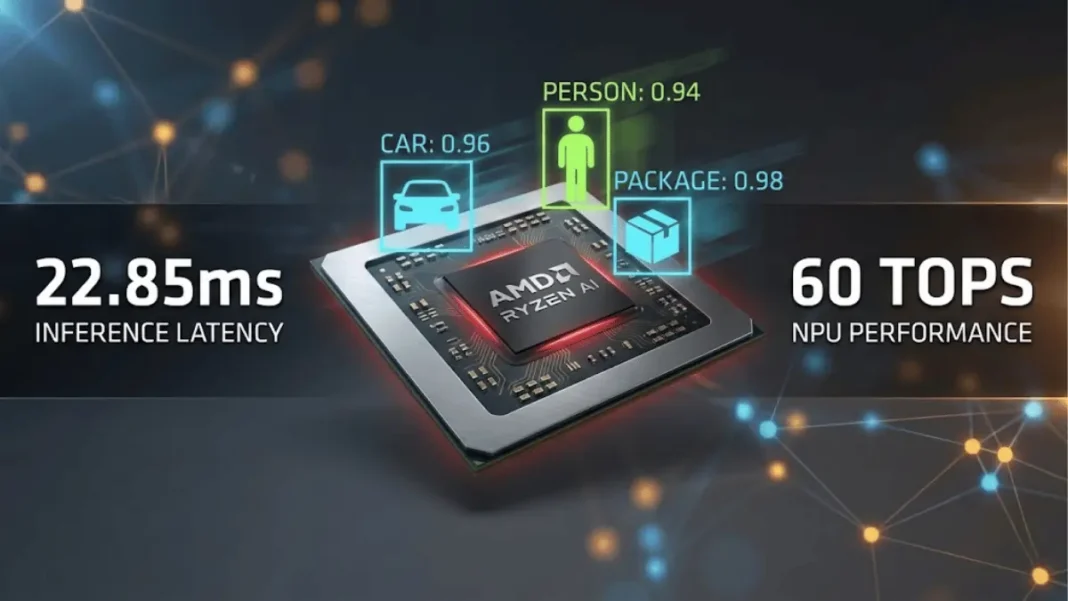

- The Launch: AMD released technical documentation on January 23, 2026, detailing end-to-end deployment of YOLO-World object detection models on Ryzen AI NPUs, achieving 22.85ms inference latency with 35.6 mAP accuracy.

- The Impact: Developers can now deploy computer vision workloads locally on AI PCs without cloud dependencies, targeting autonomous systems, retail analytics, and edge computing applications.

- The Context: Follows AMD’s January 2026 launch of Ryzen AI 400 Series with up to 60 TOPS NPU performance across six major OEM partners including Acer, ASUS, Dell, HP, GIGABYTE, and Lenovo.

AMD released comprehensive technical documentation on January 23, 2026, providing developers with a production-ready workflow for deploying YOLO-World object detection models on Ryzen AI-powered PCs equipped with Neural Processing Units (NPUs). The framework enables low-latency, on-device inference without cloud connectivity for edge AI applications.

NPU Acceleration Delivers 22.85ms Inference Performance

AMD’s deployment framework centers on the YOLO-World v8s model, achieving end-to-end inference in 22.85 milliseconds on Ryzen AI NPUs while maintaining 35.6 mean Average Precision (mAP) and 49.5 mAP50 accuracy. The quantized model uses A16W8_ADAROUND compression, reducing model size from 48.06k (float32) to 17.39k for NPU deployment.

The technical workflow encompasses three critical stages: ONNX export with opset ≥20 compatibility, quantization using AMD’s Quark tool with 100-1000 calibration images (default 512), and deployment via VitisAI Execution Provider integrated with ONNX Runtime. AMD’s XDNA 2 NPU architecture in Ryzen AI 400 Series processors delivers up to 60 TOPS of AI compute.

Key operator support includes Conv, Sigmoid, Transpose, Softmax, and Einsum, with Non-Maximum Suppression (NMS) executed on CPU for post-processing. The framework supports real-time applications where cloud latency proves prohibitive for autonomous navigation, industrial quality control, and video surveillance.

Strategic Position in Enterprise AI PC Market

AMD’s developer-focused release positions the company in the accelerating AI PC segment alongside major OEM partnerships announced in January 2026. Jack Huynh, Senior Vice President and General Manager of AMD’s Computing and Graphics Group, stated that “the PC is being redefined by AI”.

Ryzen AI 400 Series ships across consumer and PRO variants in Q1 2026, targeting productivity enhancement, creative workflows, and enterprise analytics. The YOLO-World deployment framework addresses edge computing requirements where on-device NPU inference eliminates data transmission costs and privacy concerns associated with cloud-based computer vision pipelines.

Six major OEMs confirmed Ryzen AI 400 Series system shipments beginning Q1 2026, with Acer, ASUS, Dell, HP, GIGABYTE, and Lenovo delivering AI-enabled systems. The hybrid computing architecture combines Zen 5 CPU cores, RDNA 3.5 GPU accelerators, and XDNA 2 NPUs in unified memory designs.

Technical Architecture and Quantization Workflow

| Deployment Stage | mAP | mAP50 | Latency (ms) | Model Size |

|---|---|---|---|---|

| Float Model (FP32) | 35.9 | 49.7 | 53.21 (CPU) | 48.06k |

| Quantized (A16W8) | 35.6 | 49.6 | 90.68 (CPU) | 12.46k |

| NPU Accelerated | 35.6 | 49.5 | 22.85 (NPU) | 17.39k |

AMD’s quantization methodology requires 100-1000 calibration images covering lighting variations, object scale distributions, and class diversity to maintain accuracy within 0.3 mAP of float32 baselines. The preprocessing pipeline implements 640×640 letterbox resizing with center alignment, BGR-to-RGB conversion, and 0-1 normalization matching training configurations.

Ryzen AI NPU driver version 32.0.203.280 or newer on Windows 11 x86-64 is required, with AMD providing Python SDK integration through the Ryzen AI Package. Developers can benchmark custom models using eval_on_coco.py for COCO dataset validation and infer_single.py for single-image latency profiling.

Developer Resources and Deployment Tools

AMD provides complete source code and model resources through the Ryzen AI Software GitHub repository, including YOLO-World test code and pre-trained yolov8s-worldv2 models. The workflow supports export commands via Python scripts with customizable input sizes and ONNX operator verification through Netron visualization.

The quantization tool supports A8W8 and A16W8 schemes with configurable parameters including learning rate (default 0.1) and iterations (default 3000) for optimization. Deployment uses ONNX Runtime with VitisAI Execution Provider, enabling cross-platform model portability across AMD AI hardware.

Full operator support documentation is available through AMD Ryzen AI Docs, detailing NPU compatibility for Conv, Batchnorm fusion, Add/Mul, Reshape, Clip, Normalize, Maxpool, Resize, and Slice operations. AMD recommends exact preprocessing alignment with training pipelines and representative calibration datasets for optimal accuracy retention.

Roadmap for Edge AI Infrastructure

AMD’s technical release signals commitment to developer enablement ahead of Q1 2026 AI PC volume ramp, with the company encouraging developers to experiment with quantization schemes and contribute benchmarks to AMD’s AI developer community. The YOLO-World workflow establishes a blueprint applicable to YOLOv8, and other object detection architectures with operator-level compatibility validation.

AMD’s focus on ONNX standardization enables cross-platform model portability, addressing developer concerns about vendor lock-in present in proprietary AI frameworks. The framework demonstrates how A16W8_ADAROUND quantization significantly reduces model size while maintaining accuracy through proper calibration and preprocessing.

Frequently Asked Questions (FAQs)

How fast is AMD NPU for object detection inference?

AMD Ryzen AI NPUs achieve 22.85ms inference for YOLO-World v8s at 640×640 resolution, 2.3× faster than float model CPU execution.

What NPU performance do AMD Ryzen AI 400 processors deliver?

Ryzen AI 400 Series NPUs provide up to 60 TOPS using XDNA 2 architecture, shipping in Q1 2026 across six major OEM partners.

Can AMD AI PCs run YOLO models without cloud connectivity?

Yes, AMD NPUs support local YOLO-World deployment with A16W8 quantization, eliminating cloud dependencies for edge applications.

What driver version is required for AMD NPU deployment?

AMD Ryzen AI NPU requires driver version 32.0.203.280 or newer on Windows 11 x86-64 systems.