Essential Points

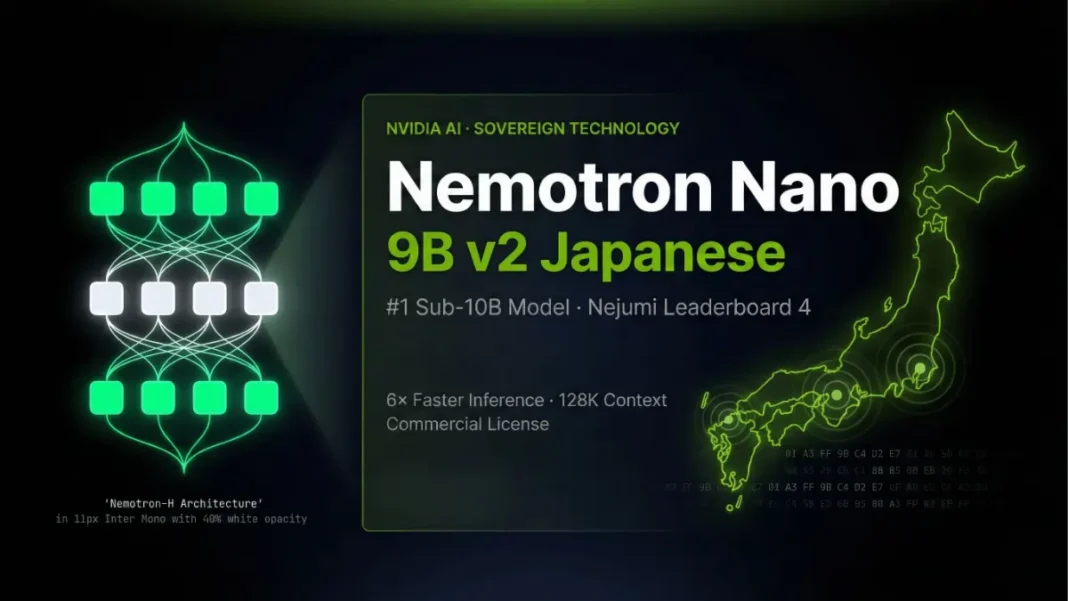

- NVIDIA released Nemotron Nano 9B v2 Japanese on February 17, 2026 purpose-built for Japanese enterprise AI

- Ranks #1 among all sub-10B models on the Nejumi Leaderboard 4, Japan’s most comprehensive LLM benchmark

- Uses a Mamba-2 + Transformer hybrid architecture with exactly 4 Attention layers, achieving up to 6× inference throughput over comparable open-source alternatives

- Trained on over 10 trillion tokens; commercially licensed under the NVIDIA Nemotron Open Model License

NVIDIA shipped a Japanese-optimized language model that filled a critical gap in enterprise AI: a sub-10B model combining strong Japanese language understanding with genuine agentic capabilities. Released February 17, 2026, the Nemotron Nano 9B v2 Japanese runs on a single GPU, handles 128K tokens of context, and ranks first on the Nejumi Leaderboard 4 Japan’s most rigorous multi-task LLM evaluation platform. Japan’s push for sovereign AI just found its most capable small model yet.

What Is Nemotron Nano 9B v2 Japanese?

NVIDIA-Nemotron-Nano-9B-v2-Japanese is a 9-billion parameter large language model trained from scratch by NVIDIA, designed as a unified model for both reasoning and non-reasoning tasks, specifically optimized for Japanese. Development ran from June 2025 to January 2026, with pre-training data capped at September 2024. The model was built by further training the base NVIDIA-Nemotron-Nano-9B-v2 using Japanese tool-calling data created with the Nemotron-Personas-Japan dataset.

The Japanese enterprise AI landscape had a documented gap: no sub-10B model combined powerful Japanese capabilities with agentic task performance. This model directly addresses that enabling on-premises deployment for confidential enterprise data, faster customization for domain-specific workflows, and rapid prototyping of multi-agent systems without the overhead of larger models.

What does “sovereign AI” mean for Japan?

Sovereign AI refers to a nation’s ability to develop and control its own AI infrastructure, data, and models without reliance on foreign systems. NVIDIA’s Nemotron Nano 9B v2 Japanese advances Japan’s sovereign AI agenda by providing a commercially licensed, Japanese-optimized model trained on culturally grounded synthetic data enabling local enterprises to deploy AI independently.

Architecture: Why the Hybrid Design Matters

Most AI models rely entirely on Transformer attention layers computationally expensive at long contexts. Nemotron Nano 9B v2 Japanese uses the Nemotron-H hybrid architecture: primarily Mamba-2 and MLP layers combined with exactly 4 Attention layers. This architectural choice is not incremental it delivers up to 6× higher inference throughput compared to open-source alternative models of comparable size, as measured in the Nemotron Nano 2 paper.

The model was trained using Megatron-LM for continual pre-training and supervised fine-tuning, NeMo Curator for data processing and filtering, and further improved using Qwen. It runs on NVIDIA A10G (24GB), A100 (80GB), H100 (80GB), DGX Spark, and Jetson Thor hardware.

| Architecture Element | Nemotron Nano 9B v2 Japanese | Qwen3-8B |

|---|---|---|

| Primary Layers | Mamba-2 + MLP + 4 Attention | Full Transformer |

| Inference Speed | Up to 6× faster | Baseline |

| Context Window | 128K tokens | 128K tokens |

| Reasoning Toggle | On/Off via system prompt | Limited |

| Commercial License | Yes (NVIDIA Nemotron Open Model License) | Yes |

| Japanese Optimization | Native (purpose-built) | General multilingual |

| Nejumi LB4 TOTAL_AVG | 0.711 | 0.690 |

Benchmark Performance: Verified Numbers

How does Nemotron Nano 9B v2 Japanese perform on the Nejumi Leaderboard 4?

Nemotron-Nano-9B-v2-Japanese achieves a TOTAL_AVG score of 0.711 on the Nejumi Leaderboard 4, outperforming Qwen3-8B (0.690). It ranks first in the sub-10B parameter category. The leaderboard evaluates models across approximately 40 benchmarks spanning Japanese language proficiency, agentic capabilities, and alignment.

The Nejumi Leaderboard 4 covers around 40 benchmarks spanning core language proficiency, agentic capabilities (code, math, tool use), and alignment (instruction following, bias, toxicity, truthfulness). Key category scores against Qwen3-8B are below:

| Benchmark | Nemotron Nano 9B v2 Japanese | Qwen3-8B |

|---|---|---|

| TOTAL_AVG | 0.711 | 0.690 |

| BFCL v3 (Tool Use) | 0.649 | 0.608 |

| MT-Bench | 0.892 | 0.906 |

| JBBQ (Bias) | 0.890 | 0.870 |

| Toxicity | 0.814 | 0.782 |

| JtruthfulQA | 0.498 | 0.433 |

| HLE | 0.057 | 0.026 |

| Hallulens | 0.960 | 0.800 |

| M-IFEval | 0.632 | 0.619 |

| SWE-Bench | 0.025 | 0.075 |

| ARC-AGI 1/2 | 0.060 | 0.070 |

| Jaster 0-shot | 0.732 | 0.736 |

| Jaster 2-shot | 0.736 | 0.747 |

Note: These scores use Japanese data subsets from the Nejumi Leaderboard 4 evaluation set and are not directly comparable to original benchmark scores.

Training Pipeline: How It Was Built

The model was built using a two-stage approach: continual pre-training followed by supervised fine-tuning (SFT). Continual pre-training used Japanese open-source corpora alongside NVIDIA’s Nemotron data stack to maximize Japanese language proficiency while preserving the base model’s agentic capabilities.

Continual pre-training datasets included:

- Japanese Wikipedia

- FineWeb-2 (Japanese)

- Aozorabunko (Japanese literary corpus)

- SIP3-ja-general-web-corpus

- Nemotron-CC-v2.1

- Nemotron-Pretraining-Specialized-v1

SFT datasets included:

- Japanese tool-calling data generated using Nemotron-Personas-Japan as seed (4B tokens synthetic dataset)

- Nemotron-Post-Training-v3

The Japanese tool-calling synthetic data was generated using Qwen3-235B-A22B, Qwen3-235B-A22B-Thinking-2507, and GPT-OSS-120B as generation models. The full training dataset spans more than 10 trillion tokens.

Nemotron-Personas-Japan: The Cultural Data Engine

What is the Nemotron-Personas-Japan dataset?

Nemotron-Personas-Japan is an open-source (CC BY 4.0) dataset of synthetically generated personas grounded in real-world Japanese demographic, geographic, and personality trait distributions. With 6 million personas, it seeds diverse, culturally accurate training data at scale. It was used to generate the Japanese tool-calling SFT data for this model.

The dataset’s 6 million personas allowed NVIDIA to scale synthetic data generation across a wide range of real-world scenarios with minimal duplication. Performance improvements from this SFT data extended beyond tool-calling covering Japanese knowledge, QA, and instruction following. The Nemotron-Personas collection also covers the USA, India, Singapore, and Brazil, enabling the same methodology to be replicated for other languages.

Reasoning Budget Control: A Production-Critical Feature

What is thinking budget control in Nemotron Nano 9B v2 Japanese?

Thinking budget control lets developers specify the maximum tokens the model is allowed to “think” during inference via the max_thinking_tokens parameter. The model attempts to end the reasoning trace at the next newline within that threshold. If no newline appears within 500 tokens, it terminates at max_thinking_tokens + 500.

Developers toggle reasoning on or off via enable_thinking=True or enable_thinking=False in the chat template. For reasoning-on mode, NVIDIA recommends temperature=0.6, top_p=0.95, and max_new_tokens of 1024 or higher; for reasoning-off mode, greedy search is recommended. Disabling reasoning reduces accuracy slightly on harder prompts but significantly reduces latency, a critical trade-off for customer support bots and edge deployments.

Primary Use Cases

This model targets developers building Japanese-language AI systems:

- AI Agents multi-step agentic workflows using Japanese tool-calling and function execution

- RAG Systems retrieval-augmented generation over Japanese enterprise document sets

- Chatbots and Copilots instruction-following assistants for customer service and knowledge management

- Domain Customization use as a base for domain-specific fine-tuning using NeMo Framework (NeMo Megatron-Bridge, NeMo AutoModel, NeMo-RL)

- General Instruction Tasks standard Japanese NLP tasks and instruction following

Deployment Options

The model supports multiple inference engines: Hugging Face Transformers, vLLM (v0.11.2 or later), TensorRT-LLM (v1.1.0), SGLang, and Llama.cpp. It has been tested on NVIDIA A10G 24GB, A100 80GB, H100 80GB, DGX Spark, and Jetson Thor. For Docker-based vLLM deployment on Jetson Thor or DGX Spark, NVIDIA provides a dedicated vLLM container (26.01-py3).

Where can I access NVIDIA Nemotron Nano 9B v2 Japanese?

The model is available on Hugging Face at nvidia/NVIDIA-Nemotron-Nano-9B-v2-Japanese (released February 17, 2026) and through NVIDIA NIM microservices at build.nvidia.com. It supports Hugging Face Transformers (tested on 4.48.3), vLLM 0.11.2+, TensorRT-LLM v1.1.0, SGLang, and Llama.cpp.

Limitations and Considerations

The pre-training data cutoff is September 2024 the model has no knowledge of events after that date without retrieval augmentation. On SWE-Bench and ARC-AGI 1/2, Qwen3-8B scores higher (0.075 vs 0.025 on SWE-Bench; 0.070 vs 0.060 on ARC-AGI), indicating the model has relative weaknesses in software engineering tasks and abstract reasoning compared to its primary competitor. Enabling reasoning (enable_thinking=False) slightly reduces accuracy on harder prompts.

Frequently Asked Questions (FAQs)

What is NVIDIA Nemotron Nano 9B v2 Japanese?

A 9B-parameter LLM trained from scratch by NVIDIA and fine-tuned for Japanese reasoning and tool-calling. Released February 17, 2026, it ranks first among all sub-10B models on Nejumi Leaderboard 4 and targets AI agents, RAG systems, and enterprise chatbots in Japanese.

What benchmark score does it achieve?

On Nejumi Leaderboard 4, it achieves a TOTAL_AVG of 0.711 outperforming Qwen3-8B’s 0.690 across ~40 Japanese benchmarks. It leads in BFCL v3 tool use (0.649 vs 0.608), Hallulens (0.960 vs 0.800), and JtruthfulQA (0.498 vs 0.433).

How fast is inference compared to competitors?

By inheriting the Nemotron-H Mamba-Transformer architecture, the model delivers up to 6× inference throughput improvement over open-source alternatives of comparable size deployable on edge GPUs like the NVIDIA A10G.

Can it be used commercially?

Yes, governed by the NVIDIA Nemotron Open Model License Agreement. Available via Hugging Face and NVIDIA NIM microservices for production deployment without open-source-only restrictions.

What is the context window?

Up to 128K tokens for input and output enabling long Japanese document processing, extended agent conversations, and multi-document RAG in a single pass.

What hardware does it support?

Verified on NVIDIA A10G 24GB, A100 80GB, H100 80GB, DGX Spark, and Jetson Thor. Inference engines supported: HF Transformers, vLLM (0.11.2+), TRT-LLM, SGLang, and Llama.cpp.

What is the training data cutoff?

September 2024. The model has no knowledge of events after that date without retrieval augmentation.