Key Takeaways

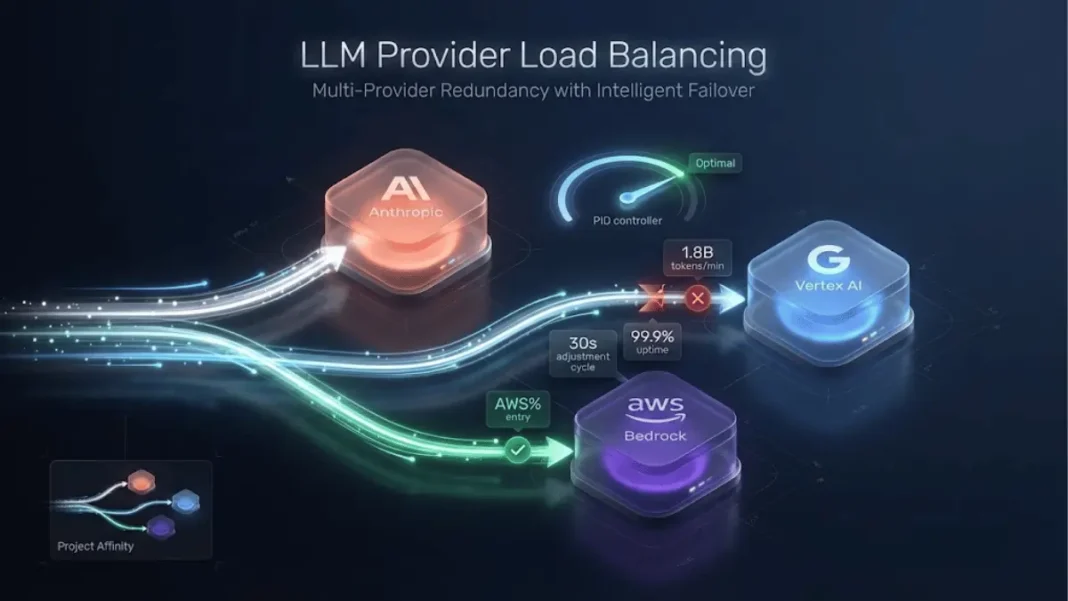

- Lovable processes 1.8 billion tokens per minute using PID-controlled dynamic load balancing across providers

- Project-level affinity preserves prompt caching benefits by routing consecutive requests to identical providers

- Automated weight adjustment responds to provider failures within 30 seconds without manual intervention

- Prefilled response mechanism recovers from mid-stream failures saving partial generations from loss

Large language model providers fail constantly at scale. When your application processes 1.8 billion tokens per minute like Lovable’s AI code generation platform, provider outages, rate limits, and streaming failures become inevitable operational realities. Traditional load balancing breaks agent workflows because it destroys prompt caching the performance optimization that lets subsequent LLM calls reuse earlier context. Lovable engineered a solution using probabilistic fallback chains with project-level affinity and PID-controlled automatic weight adjustment.

The Prompt Caching Problem That Simple Failover Creates

Prompt caching reduces API costs by 50-90% depending on implementation and improves response times significantly across providers. This optimization works by letting LLM providers reuse processed components from previous requests when the same system prompts and context appear. Agent workflows rely heavily on prompt caching because they typically perform exploration before code generation, retrieving context and state across multiple API calls.

Simple ranked fallback lists destroy caching benefits immediately. When your primary provider returns rate limits, some requests succeed while others fall back to a secondary provider. This splits consecutive requests for the same project across different providers, forcing full context reprocessing on every call. The efficiency loss cascades because LLM providers impose rate limits primarily on non-cached tokens. Poor load balancing depletes capacity across all providers simultaneously, forcing applications to rate-limit end users.

Research shows strategic cache boundary control provides measurable benefits for agent systems. Placing dynamic content at the end of system prompts and excluding tool results from cache boundaries prevents efficiency losses. Providers automatically trigger cache creation when token thresholds exceed minimums, but caching dynamic tool calls writes content that won’t be reused.

What makes prompt caching critical for agent systems?

Agent systems execute dozens of tool calls during long-running sessions. System prompts and tool definitions remain largely static while user messages and conversation history change continuously. Caching system prompts and tool definitions reuses processed components for substantial portions of each request. Heroku’s automatic prompt caching implementation only caches system prompts and tool definitions specifically because these components deliver the highest reuse rates. Anthropic’s Claude caches expire after five minutes of inactivity, while OpenAI’s caching for GPT models uses longer time-to-live windows.

Multiple Fallback Chains With Project-Level Affinity

Lovable generates multiple fallback chains with different providers as first choices rather than relying on a single global chain. Each project receives assignment to one specific chain for a short time window, ensuring consecutive requests for that project route consistently to the same provider. This project-level affinity preserves prompt caching while still handling provider failures gracefully.

The algorithm constructs fallback chains probabilistically using weighted sampling:

- Start with provider weights representing what fraction of traffic each should receive

- Sample the first provider where selection probability equals its weight proportion

- Sample the second provider from remaining options using their weight proportions

- Continue until all providers appear exactly once in the complete permutation

This approach creates a probability space where higher-weighted providers appear earlier in chains more frequently, but no provider appears twice in any single chain. The cached permutation stays with each project for several minutes, maintaining consistent routing.

Multi-provider redundancy forms the foundation of production AI reliability. Using multiple providers like OpenAI, Anthropic, and Google allows automatic switching when one fails. Salesforce’s Agentforce implements similar failover logic across OpenAI and Azure OpenAI models, with planned expansion to Anthropic and Gemini. The core principle holds that model-level volatility cannot excuse system-level fragility.

PID Controller Automatically Adjusts Provider Weights

Manual weight management proved unsustainable at Lovable’s scale. Different providers measure tokens differently, some include cached tokens in rate limits while others don’t, making direct capacity comparison impossible. Provider capacity changes constantly due to incidents, rate limit adjustments, and traffic pattern variations. When providers started returning errors, engineers had to manually reduce weights to decrease traffic, then adjust again when conditions improved.

Automated weight adjustment based on observed behavior replaced manual management. The system assigns each provider an initial availability of 1.0 representing full health. Every 30 seconds, it calculates a score using this formula:

A PID controller adjusts provider availability to keep this score near zero. When the score goes negative (error rate exceeds 0.5%), the system reduces availability so the provider receives less traffic. Positive scores trigger availability increases to send more traffic. The +1 bias term prevents permanently abandoning providers that previously failed, slowly increasing availability even when unused. Availability values remain capped between 0 and 1.0.

How does weight calculation follow provider preferences?

Provider availability values often sum beyond 1.0, indicating aggregate capacity exceeds current demand. This flexibility allows maximizing preferred providers while underutilizing others. Lovable maintains a preferred provider order based on observed speed, model behavior, and operational requirements.

Weight calculation follows this waterfall logic:

- First provider weight equals its current availability

- Second provider weight equals minimum of its availability and (1.0 – first provider weight)

- Third provider weight equals minimum of its availability and (1.0 – sum of previous weights)

- Pattern continues for remaining providers

This ensures preferred providers receive maximum traffic before less-preferred options see any load. The approach resembles usage-based routing strategies that match request complexity with appropriate model tiers. Straightforward tasks route to lighter models while complex reasoning reserves premium models.

Handling Mid-Stream Failures With Prefilled Responses

Not all failures occur before response streaming begins. Requests sometimes start generating output then fail partway through. Users may have already seen partial output, making simple retry on another provider produce duplicated or inconsistent text.

Lovable takes advantage of Claude models’ prefilled response capability. When streams fail, the system appends the already-emitted partial response to the original request and sends the combined prompt to a Claude model. This allows continuation from the exact stopping point rather than restarting generation entirely.

Not all models support prefilled responses, and continuations may differ slightly in style or wording. In practice, this approach produces substantially better results than aborting responses or restarting from scratch. The recovery logic integrates naturally into the same fallback-chain mechanism used for request-level failures.

Circuit breaker mechanisms prevent flip-flopping during partial provider recovery. Temporarily disabling failing providers avoids repeated errors while allowing exponential backoff. Model-specific Service Level Indicators (SLIs) detect degradation in specific AI models as early as possible, triggering switches to backup models. LiteLLM’s router handles load balancing across multiple Azure, Bedrock, and provider deployments with automatic regional failover.

Weighted Round-Robin vs Dynamic Availability

Weighted round-robin represents the simplest load balancing strategy. This approach assigns static weights to each model or endpoint—for example, sending 80% of GPT-4 traffic to Azure and 20% to OpenAI. The strategy works well for canary deployments or distributing load across known stable patterns.

Static weights break down when provider capacity fluctuates. Lovable’s dynamic availability approach responds to real-time conditions rather than assumptions. The PID controller automatically detects unhealthy endpoints and adjusts traffic within 30-second intervals. This reactive system removes the deployment friction that hardcoded percentages create.

Shifting from 80/20 to 50/50 distribution with static weights requires code deployment. A/B testing different providers demands feature flags. Cost optimization initiatives force rewriting routing logic. Dynamic availability eliminates these operational burdens by continuously adapting to measured performance.

What trade-offs exist between simplicity and resilience?

Weighted round-robin offers operational simplicity and predictable behavior. Organizations can reason about traffic patterns easily and test capacity planning with known distributions. Dynamic systems introduce complexity through PID tuning, scoring algorithms, and monitoring requirements.

The resilience benefits justify complexity at scale. Modern AI applications face strict SLAs requiring 99.9% uptime and tail latencies below 600 ms. Unmitigated model failures or rate-limiting events cascade across entire stacks, jeopardizing these targets. Automatic detection and ejection of unhealthy endpoints, fallback paths, and proactive balancing to avoid rate limits protect SLAs effectively.

Cost efficiency improvements reach 60% when routing easy prompts to cheaper models and reserving computational endpoints for complex queries. LLM providers bill by token and model tier, making premium model overuse expensive. Intelligent routing based on task complexity optimizes spending without sacrificing output quality.

Real-World Performance at 1.8 Billion Tokens Per Minute

Lovable’s implementation rarely experiences outages due to LLM provider issues despite massive scale. The system processes roughly 1.8 billion tokens per minute across projects under peak traffic. Routine challenges include provider outages, rate-limiting, slow responses, and streaming failures that die mid-generation.

The load balancer grew from specific constraints: spreading traffic across multiple providers while accounting for preferences and fluctuating capacities without constantly switching providers for the same project. Multiple fallback chains with project-level affinity preserve prompt caching benefits. Automatic provider re-weighting based on live traffic maximizes preferred provider capacity and backs off smoothly when errors appear.

Lovable built a simulation app that demonstrates load balancer behavior under various scenarios. The tool allows experimentation with provider capacity and failure conditions, showing how fallback chains, weights, and stickiness interact over time. The simulation reveals how the PID controller responds to capacity changes and how project affinity maintains caching across request sequences.

LLM observability platforms track these performance metrics across production systems. Monitoring solutions evaluate request latency, token usage, error rates, and provider health indicators. The top observability platforms in 2026 include Maxim AI, Langfuse, Arize, LangSmith, and Helicone for comprehensive monitoring and evaluation.

Implementation Considerations for Production Systems

Production LLM load balancing requires health monitoring, logging, and regular failover testing. Always monitor provider health metrics and log all failures for analysis. Implement exponential backoff and retries for transient errors that resolve quickly. Test failover logic regularly by simulating provider outages in staging environments.

Documentation proves critical for maintainability as teams change. Complex routing logic, PID controller tuning parameters, and fallback chain generation algorithms need clear explanations. Circuit breaker thresholds, availability score calculations, and weight adjustment formulas should include rationale documentation.

Portkey’s AI Gateway centralizes LLM traffic management across multiple providers. The platform handles routing rules automatically based on defined thresholds. Setting GPT-4 usage limits or routing simple tasks to smaller models happens through configuration rather than code. The gateway approach separates routing concerns from application logic.

TrueFoundry’s load balancing architecture emphasizes scaling levers that allow adding new endpoints with zero downtime. Key objectives include minimizing average and tail latency, providing continuous service through failure rerouting, optimizing costs, and dynamically scaling compute resources. The platform balances traffic proactively to avoid vendor-side rate limits before they impact users.

Limitations and Future Expansion

Lovable’s current implementation uses Anthropic, Vertex, and Bedrock as provider options. The prefilled response recovery mechanism depends on Claude model capabilities specifically. Organizations using different provider combinations would need to adapt the continuation logic.

The 30-second adjustment interval for PID controller updates balances responsiveness with stability. Faster intervals could react more quickly to sudden provider degradation but risk overreacting to transient issues. Slower intervals improve stability but delay response to legitimate capacity problems.

Project-level affinity windows span several minutes to preserve caching. This duration assumes typical agent workflows complete within that timeframe. Applications with longer-running sessions or extremely short burst patterns might need different affinity window tuning.

The error rate threshold of 0.5% that triggers availability reduction (200x error weight in the scoring formula) reflects Lovable’s reliability requirements. More sensitive applications might lower this threshold, while systems tolerating higher error rates could raise it. Organizations should calibrate these parameters based on their specific SLA commitments.

Frequently Asked Questions (FAQs)

What is LLM provider load balancing?

LLM provider load balancing distributes API requests across multiple language model providers to prevent outages, manage rate limits, and optimize costs. Systems route traffic to providers like Anthropic, OpenAI, and Google based on availability, performance, and preferences while handling failures automatically.

How does prompt caching improve agent performance?

Prompt caching reuses processed system prompts and tool definitions from previous requests, reducing costs by 50-90% depending on implementation. Agent workflows benefit significantly because exploration phases retrieve context repeatedly, making cached components valuable.

What is a PID controller in load balancing?

A PID controller adjusts provider availability automatically by calculating health scores every 30 seconds based on success rates and errors. When error rates exceed thresholds, the controller reduces traffic to that provider without manual intervention.

Why do simple fallback chains break prompt caching?

Simple fallback chains route requests to different providers when primary options fail, splitting consecutive requests for the same project across multiple providers. This prevents caching benefits because cached content only works when subsequent requests reach the identical provider.

How do you handle LLM streaming failures mid-response?

Append the partial response already generated to the original request and send the combined prompt to a model supporting prefilled responses. Claude models allow continuation from the exact stopping point rather than restarting generation.

What are the main benefits of multi-provider redundancy?

Multi-provider redundancy prevents complete service outages when individual providers fail, maintains SLA compliance during capacity issues, enables cost optimization through intelligent routing, and provides negotiating leverage with vendors.

How often should load balancing weights update?

Update intervals balance responsiveness with stability. Lovable uses 30-second cycles to react quickly to degradation while avoiding overreaction to transient issues. Organizations should tune intervals based on traffic patterns and reliability requirements.

What metrics indicate load balancing effectiveness?

Key metrics include error rates below 0.5%, maintained cache hit rates above baseline, p95/p99 latency within SLA targets, balanced provider utilization matching weights, and quick recovery times under simulated failures.