Quick Brief

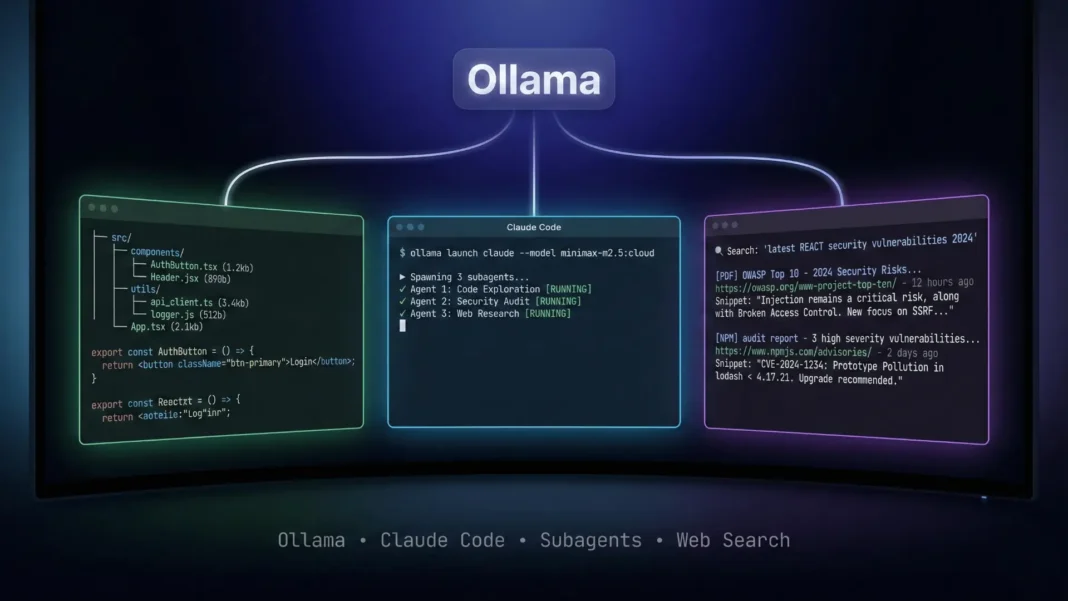

- Ollama added native subagents and web search to Claude Code on February 16, 2026 no MCP setup needed

- Parallel subagents handle file search, code exploration, and research in fully isolated contexts

- Web search integrates directly into Ollama’s Anthropic compatibility layer with zero additional configuration

- Supported cloud models minimax-m2.5, glm-5, kimi-k2.5 trigger subagents automatically by default

Ollama eliminated one of AI-assisted coding’s biggest friction points on February 16, 2026: parallel task management and live web search now work natively inside Claude Code, with zero MCP servers or API keys required. Developers gain full agentic coding workflows using Ollama’s cloud models without provisioning separate infrastructure or authentication. This analysis covers exactly what changed, which models perform best, and how to activate both features in under two minutes.

What Ollama Subagents Actually Do in Claude Code

Traditional AI coding assistants process tasks sequentially, one request fills the context window, and every side task leaves noise the model carries forward. Ollama’s subagent implementation changes this by spawning isolated agent instances that run in parallel, each in their own context. Results return independently, keeping the main session clean and focused.

Each subagent handles a bounded scope: file search in one context, code exploration in a second, research in a third all running simultaneously. Longer coding sessions stay productive because side tasks no longer fill the primary context with noise.

What this means in practice: A full codebase audit that previously required three sequential prompts each consuming context now runs as a single command with consolidated parallel outputs.

What are Ollama subagents in Claude Code?

Ollama subagents are parallel AI instances launched within a Claude Code session. Each operates in its own isolated context, handling tasks like file search, code exploration, or live research simultaneously. This prevents context window noise and keeps longer coding sessions productive without sequential task bottlenecks.

Web Search Built Into Every Session No MCP Server

Before this update, adding real-time web access to Claude Code required configuring Model Context Protocol (MCP) servers and provisioning additional API keys. Ollama’s release embeds web search directly into the Anthropic compatibility layer. When a model needs current information, Ollama handles the search and returns results directly no additional configuration required.

Subagents extend this further: individual parallel agents can each leverage web search to research topics simultaneously and return actionable results to the main session. This enables workflows like three simultaneous research agents comparing competitor pricing, each conducting live searches independently in their own context.

Does Ollama web search require an MCP server to configure?

No. Ollama’s web search is built directly into the Anthropic compatibility layer as of February 16, 2026. When a model determines it needs current data, Ollama handles the search internally. No MCP server setup, no additional API keys, and no extra configuration is required.

Three Cloud Models That Trigger Subagents Automatically

Not every model activates subagent behavior on its own. Ollama identifies three cloud models that intelligently decide when to spawn agents based on task complexity:

- minimax-m2.5:cloud Achieves 80.2% on SWE-Bench Verified, 51.3% on Multi-SWE-Bench, and 76.3% on BrowseComp, reaching performance comparable to the Claude Opus series. Completes complex agentic tasks in 22.8 minutes average on par with Claude Opus 4.6’s 22.9 minutes while consuming fewer tokens per task (3.52M vs. 3.72M). Demonstrates higher decision maturity in search and tool-use tasks, saving roughly 20% in tool-call rounds compared to M2.1

- glm-5:cloud Listed by Ollama as a recommended cloud model for subagent workflows; auto-triggers subagents when task complexity warrants it. No additional public benchmark data is currently available on Ollama’s library page

- kimi-k2.5:cloud A native multimodal agentic model trained on approximately 15 trillion mixed visual and text tokens, supporting both text and image inputs with a 256K context window

Any model on Ollama’s cloud also supports manual subagent triggering. Instruct the model to “use subagents,” “spawn subagents,” or “create subagents” and it complies regardless of whether it auto-triggers.

Which Ollama models support subagents automatically in Claude Code?

Three models auto-trigger subagents in Claude Code: minimax-m2.5:cloud, glm-5:cloud, and kimi-k2.5:cloud. Any other Ollama cloud model also supports subagents when explicitly instructed. Use prompts like “spawn subagents” or “create subagents to handle these tasks in parallel” to activate the behavior.

minimax-m2.5 Benchmarks: What the Numbers Mean

MiniMax M2.5 is trained with large-scale reinforcement learning across hundreds of thousands of real-world environments and 10+ programming languages including Python, Go, C, C++, TypeScript, Rust, Kotlin, Java, JavaScript, PHP, Lua, Dart, and Ruby. The model handles the full development lifecycle: system design from zero, environment setup, feature iteration, code review, and testing across Web, Android, iOS, Windows, and Mac.

| Benchmark | M2.5 Score | Claude Opus 4.6 Score |

|---|---|---|

| SWE-Bench Verified (On Droid) | 79.7% | 78.9% |

| SWE-Bench Verified (On OpenCode) | 76.1% | 75.9% |

| SWE-Bench Verified (Overall) | 80.2% | |

| Multi-SWE-Bench | 51.3% | |

| BrowseComp | 76.3% | |

| Avg. task completion time | 22.8 min | 22.9 min |

| Tokens per task | 3.52M | 3.72M |

M2.5 also achieves a 59.0% average win rate against mainstream models on advanced office productivity tasks including Word, PowerPoint, and Excel financial modeling using the GDPval-MM pairwise comparison framework.

How does minimax-m2.5 compare to Claude Opus 4.6 for coding tasks?

MiniMax M2.5 achieves 80.2% on SWE-Bench Verified and completes agentic coding tasks in 22.8 minutes on average on par with Claude Opus 4.6’s 22.9 minutes. M2.5 also uses fewer tokens per task (3.52M vs. 3.72M), by MiniMax’s own benchmarking via Ollama’s model card.

How to Activate Subagents and Web Search

Setup requires three steps with no prerequisite account beyond an existing Ollama installation:

- Install or update Ollama to version v0.14.0 or above (minimum for Anthropic API compatibility)

- Launch Claude Code with a cloud model:

ollama launch claude --model minimax-m2.5:cloud

- Issue a subagent prompt use any of these verified example prompts from Ollama’s official blog:

> spawn subagents to explore the auth flow, payment integration, and notification system > audit security issues, find performance bottlenecks, and check accessibility in parallel with subagents > create subagents to map the database queries, trace the API routes, and catalog error handling patterns

Web search activates automatically when the model needs current information. To direct it explicitly with parallel research agents:

> research the postgres 18 release notes, audit our queries for deprecated patterns, and create migration tasks > create 3 research agents to research how our top 3 competitors price their API tiers, compare against our current pricing, and draft recommendations

For local model setups without cloud features, configure the Anthropic compatibility layer directly:

export ANTHROPIC_AUTH_TOKEN=ollama export ANTHROPIC_BASE_URL=http://localhost:11434

Limitations Worth Knowing Before You Rely on This

Cloud model dependency introduces network latency compared to locally run models each subagent task requires round-trip calls to Ollama’s cloud infrastructure. The three recommended models (minimax-m2.5, glm-5, kimi-k2.5) are cloud-only; local Ollama models require manual subagent prompting and do not include built-in web search. Developers operating in air-gapped, privacy-sensitive, or regulated environments cannot use these cloud features without explicit security policy review.

Frequently Asked Questions (FAQs)

Can I use Ollama subagents with local models, not cloud models?

Local Ollama models do not include built-in web search and will not automatically spawn subagents. You can manually instruct any model to “use subagents” or “spawn subagents” for parallel task handling, but automatic web search is limited to Ollama’s cloud model layer as of February 16, 2026.

Do I need an Anthropic API key to use Claude Code with Ollama?

No. Ollama provides Anthropic API compatibility requiring no active Anthropic account or billing. Set ANTHROPIC_AUTH_TOKEN=ollama and ANTHROPIC_BASE_URL=http://localhost:11434 as environment variables. This configuration supports local model usage and works from Ollama v0.14.0 onwards.

How does minimax-m2.5 compare to Claude Opus 4.6 for coding tasks?

MiniMax M2.5 achieves 80.2% on SWE-Bench Verified and completes agentic coding tasks in 22.8 minutes average on par with Claude Opus 4.6’s 22.9 minutes, by MiniMax’s own benchmarking. M2.5 also consumes fewer tokens per task (3.52M vs. 3.72M), reducing costs on extended agentic sessions.

What tasks are best suited for Ollama subagents in Claude Code?

Tasks that are structurally independent and benefit from parallel execution: simultaneous security and performance audits, multi-source competitor research, and component-level code mapping (auth, payments, and notifications running concurrently). Tasks with shared state or sequential dependencies should stay in the main context to avoid conflicts.

Is kimi-k2.5 capable of processing images alongside code in Claude Code?

Yes. Kimi K2.5 is a native multimodal agentic model trained on approximately 15 trillion mixed visual and text tokens, supporting both text and image inputs with a 256K context window. This makes it practical for tasks involving UI screenshots, architectural diagrams, or visual documentation alongside code.

How does Ollama’s built-in web search work without MCP configuration?

Ollama’s Anthropic compatibility layer handles web search calls internally. When a model determines it needs current data, Ollama performs the search and returns results directly to the model’s context no setup required. Individual subagents can also trigger web searches in parallel, each returning independent results.

Can I use any Ollama model for subagents, not just the three recommended ones?

Yes. While minimax-m2.5, glm-5, and kimi-k2.5 trigger subagents automatically based on task complexity, any model on Ollama’s cloud supports subagents when explicitly instructed. Use prompts like “use subagents,” “spawn subagents,” or “create subagents” to activate the behavior on any compatible cloud model.

Can subagents run web searches themselves, or only the main agent?

Both. The main Claude Code session and individual subagents can each trigger web search independently. This enables parallel research workflows for example, three subagents simultaneously researching how top competitors price their API tiers, comparing against internal pricing, and drafting recommendations in a single session.