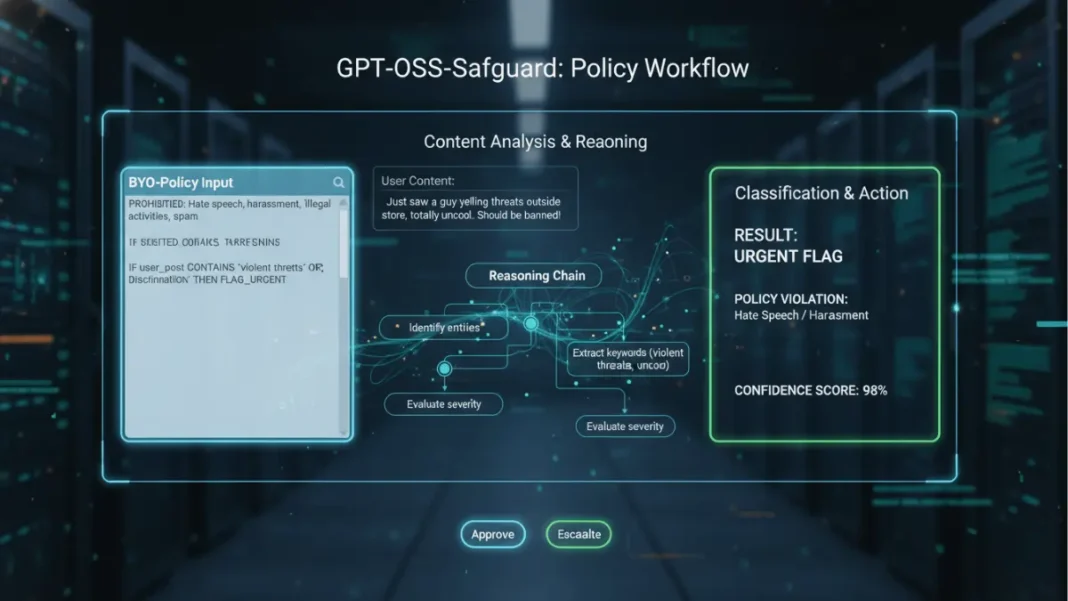

OpenAI released GPT-OSS-Safeguard on October 28, 2025 a pair of open-weight reasoning models that let developers enforce their own content policies instead of relying on pre-built safety rules. Unlike traditional moderation systems that classify content against fixed categories, these models interpret custom written policies at inference time and explain their decisions through transparent chain-of-thought reasoning.

The release includes two model sizes GPT-OSS-Safeguard-120b and GPT-OSS-Safeguard-20b both available under the permissive Apache 2.0 license for free use, modification, and deployment. Developed in partnership with Discord, SafetyKit, and Robust Open Online Safety Tools (ROOST), these models represent OpenAI’s first open release of core safety infrastructure used in production systems.

Table of Contents

What Is GPT-OSS-Safeguard?

GPT-OSS-Safeguard is an open-weight reasoning model specifically trained for safety classification tasks that helps classify text content based on customizable policies. As fine-tuned versions of the GPT-OSS foundation models, these specialized variants use advanced reasoning capabilities to evaluate content against developer-provided guidelines rather than static, pre-trained categories.

Quick Answer: GPT-OSS-Safeguard models interpret your written safety policies in real-time to classify user messages, AI completions, or full chat histories then show you exactly how they reached each decision through transparent reasoning chains.

Two Model Variants: 120b and 20b Parameters

The 120b parameter version delivers higher accuracy and handles more nuanced policy interpretations, while the 20b variant offers faster inference with lower compute requirements. The smaller model uses approximately 12 GB of RAM, whereas the larger 120b can require up to 65 GB depending on the implementation. Both models are available in GGUF and MLX formats for flexible deployment across different hardware configurations.

Key Difference from Traditional Moderation Models

Traditional safety classifiers like LlamaGuard or ShieldGemma come with built-in definitions of “unsafe” content and fixed policy taxonomies that cannot be modified without retraining. GPT-OSS-Safeguard instead functions as a policy-following model that reliably interprets and enforces your own written standards while explaining why it made each decision. This “bring-your-own-policy” approach originated from OpenAI’s internal safety systems and provides significantly more flexibility than training classifiers to indirectly infer decision boundaries from thousands of labeled examples.

How GPT-OSS-Safeguard Works

The models use reasoning to directly interpret developer-provided policies at inference time, classifying user messages, completions, and full chats according to specific safety requirements. This architecture separates the classification model from the policy definitions, allowing teams to iterate on safety rules without expensive model retraining cycles.

Bring-Your-Own-Policy Architecture

Developers write explicit safety policies in natural language that define their taxonomy, thresholds, and contextual rules. The policy gets provided during inference rather than being trained into the model weights, enabling rapid iteration and A/B testing of alternative definitions directly in production. A product review platform could define policies for detecting fraudulent reviews, while a gaming forum might create different rules for identifying cheating discussions all using the same underlying model.

Chain-of-Thought Reasoning Process

GPT-OSS-Safeguard generates full chain-of-thought outputs that developers can review to understand how the model reached its classification decisions. This transparency differs fundamentally from traditional “black box” classifiers that only provide labels and confidence scores without explanation. The reasoning chains cite specific policy rules, surface borderline cases requiring human judgment, and explain contextual factors that influenced the decision.

Inference-Time Policy Interpretation

The models evaluate content against policies provided in each API call rather than relying on training-time knowledge. This design allows compliance teams to update safety definitions immediately in response to new regulations, platform changes, or evolving threat patterns. OpenAI internally uses this approach because it dramatically reduces the engineering overhead of maintaining separate classifiers for different policy contexts.

GPT-OSS-Safeguard vs Traditional Content Moderation

| Feature | GPT-OSS-Safeguard | Traditional Classifiers |

|---|---|---|

| Policy Customization | Write custom policies in natural language; change anytime | Fixed categories; requires retraining |

| Transparency | Full chain-of-thought reasoning visible | Black-box scores only |

| Latency | Higher compute cost per classification | Lower latency; faster inference |

| Accuracy on Niche Tasks | Strong with well-written policies | Better with thousands of training examples |

| License | Apache 2.0; fully open-weight | Often proprietary or restrictive |

| Context Understanding | Handles nuance, borderline cases | Limited to training distribution |

Getting Started with GPT-OSS-Safeguard

Both models are available for download from Hugging Face and can be integrated into existing Trust & Safety infrastructure. OpenAI provides implementation examples through its official Cookbook guide and supports the models through its Responses API.

System Requirements

The GPT-OSS-Safeguard-20b model requires at least 12 GB of RAM for basic operation, while the 120b variant may need up to 65 GB depending on batch size and optimization settings. Both models support tool use, reasoning modes (low, medium, high effort), and Structured Outputs for integration with downstream systems. Cloud deployment through platforms like LM Studio and Ollama provides alternative hosting options without managing local infrastructure.

Download and Installation

Developers can access the models through Hugging Face repositories at openai/gpt-oss-safeguard-120b and openai/gpt-oss-safeguard-20b. The models are distributed in GGUF and MLX quantized formats to balance performance with resource requirements. For local testing, Ollama provides a simple command-line interface: ollama run gpt-oss-safeguard:20b or ollama run gpt-oss-safeguard:120b.

Basic Implementation Example

Quick Answer: Send your policy document and content to classify through OpenAI’s Responses API; the model returns a classification decision with full reasoning explanation.

Implementation follows a three-step pattern: define your policy in clear natural language, structure the input with policy plus content to evaluate, then parse the model’s reasoning output and classification label. The ROOST and OpenAI cookbook provides detailed examples of policy prompt engineering, optimal policy length selection, and production integration patterns for Trust & Safety systems.

Real-World Use Cases

GPT-OSS-Safeguard fits into existing Trust & Safety infrastructure where nuanced policy interpretation matters more than pure speed. OpenAI recommends using small, high-recall classifiers to pre-filter content before sending domain-relevant items to GPT-OSS-Safeguard for detailed evaluation.

Automated Content Classification

Use the models to label posts, messages, or media metadata for policy violations with contextual decision-making that considers author intent, community norms, and edge cases. The models integrate with real-time ingestion pipelines, review queues, moderation consoles, and downranking systems. A social platform could classify borderline harassment that keyword filters miss by analyzing full conversation context and relationship dynamics.

Trust & Safety Assistant Workflows

The chain-of-thought reasoning makes GPT-OSS-Safeguard uniquely suited for automated triage that reduces cognitive load on human moderators. Unlike traditional classifiers that only provide labels, these models act as reasoning agents that evaluate content, explain decisions, cite specific policy rules, and surface cases requiring human judgment. This transparency increases trust in automated decisions and helps moderators focus on genuinely ambiguous cases.

Policy Testing and Experimentation

Before rolling out new or revised safety policies, teams can run them through GPT-OSS-Safeguard to simulate how content will be labeled. This workflow identifies overly broad definitions, unclear examples, and borderline cases before they affect users. The bring-your-own-policy design allows A/B testing of alternative policy formulations directly in production without model retraining, enabling data-driven policy optimization.

Writing Effective Policies for GPT-OSS-Safeguard

Well-crafted policies unlock the models’ reasoning capabilities to handle nuanced content, explain borderline decisions, and adapt to contextual factors. The ROOST and OpenAI cookbook provides comprehensive guidance on policy prompt engineering that maximizes classification accuracy.

Policy Structure Best Practices

Define your taxonomy clearly with specific examples of violations and edge cases. Include contextual rules that specify when platform dynamics, user relationships, or community norms should influence decisions. Use concrete language rather than vague terms instead of “inappropriate content,” specify “sexually explicit imagery depicting minors” or “graphic violence intended to shock”. Provide threshold guidance for borderline cases and explain how to weigh competing policy considerations.

Common Pitfalls to Avoid

Overly brief policies without sufficient examples lead to inconsistent classifications across similar content. Extremely long policies can exceed context windows or dilute important rules among less critical guidelines. Avoid circular definitions where policy terms reference other undefined concepts, and ensure examples genuinely represent the boundaries you care about rather than obvious violations. Test policies against diverse content samples before production deployment to catch unforeseen edge cases.

Limitations and Considerations

GPT-OSS-Safeguard models are released as a research preview, with OpenAI actively seeking feedback from researchers and safety community members. These models were trained without additional biological or cybersecurity data, so previous risk assessments from the GPT-OSS release apply to these fine-tuned variants.

Compute Requirements

The models are more time and compute intensive than traditional classifiers, making them unsuitable for classifying every single piece of content at scale. OpenAI’s internal systems use lightweight, high-recall filters to identify domain-relevant content before invoking GPT-OSS-Safeguard for detailed policy evaluation. Consider pre-filtering to control costs while maintaining safety coverage.

When to Use Pre-Filtering

Traditional classifiers trained on thousands of examples will likely outperform GPT-OSS-Safeguard on well-defined, high-volume tasks where speed matters more than policy flexibility. Reserve these reasoning models for nuanced cases where context, community norms, or subtle policy interpretation determines the correct classification. Hybrid architectures that combine fast pre-filters with reasoning-based final decisions deliver optimal cost-performance tradeoffs.

Research Preview Status

The models are currently in research preview, meaning capabilities, API interfaces, and recommended practices may evolve based on community feedback. OpenAI plans to continuously upgrade the underlying technology, so custom policies relying on specific model behaviors may require recalibration over time. Monitor OpenAI’s safety evaluations hub for updated benchmarks and safety assessments as the models mature.

Comparison Table: GPT-OSS-Safeguard Model Variants

| Specification | GPT-OSS-Safeguard-120b | GPT-OSS-Safeguard-20b |

|---|---|---|

| Parameters | 120 billion | 20 billion (21B total, 3.6B active) |

| RAM Requirements | Up to 65 GB | Minimum 12 GB |

| Accuracy | Higher; handles more nuanced cases | Strong performance on most tasks |

| Inference Speed | Slower; more compute intensive | Faster; optimized for efficiency |

| Best For | Complex policy interpretation; edge cases | Balanced accuracy and speed |

| GPU Compatibility | High-end GPUs or cloud deployment | Fits 16GB VRAM GPUs |

| License | Apache 2.0 | Apache 2.0 |

Frequently Asked Questions (FAQs)

How do I download GPT-OSS-Safeguard models?

Both GPT-OSS-Safeguard-120b and GPT-OSS-Safeguard-20b are available on Hugging Face at openai/gpt-oss-safeguard-120b and openai/gpt-oss-safeguard-20b. The models are distributed under Apache 2.0 license in GGUF and MLX formats. You can also run them locally using Ollama with simple commands like ollama run gpt-oss-safeguard:20b.

Can GPT-OSS-Safeguard replace human moderators?

No. GPT-OSS-Safeguard functions as a Trust & Safety assistant that reduces cognitive load and handles routine classifications, but it surfaces ambiguous cases requiring human judgment. The chain-of-thought reasoning helps moderators understand automated decisions and focus on genuinely complex content that needs human empathy and cultural context.

What is the difference between 120b and 20b models?

The 120b parameter model provides higher accuracy and more nuanced policy interpretation but requires up to 65 GB RAM. The 20b variant offers faster inference with lower compute requirements (minimum 12 GB RAM) while maintaining strong performance on most safety classification tasks. Choose based on your accuracy needs and infrastructure constraints.

How do I write effective policies for GPT-OSS-Safeguard?

Write clear taxonomies with specific violation examples and edge cases. Include contextual rules about when community norms or user relationships should influence decisions. Use concrete language over vague terms, provide threshold guidance for borderline cases, and test against diverse content samples. The ROOST and OpenAI cookbook offers detailed policy engineering guidance.

Is GPT-OSS-Safeguard suitable for real-time moderation?

GPT-OSS-Safeguard has higher latency than traditional classifiers due to its reasoning process. OpenAI recommends using fast, high-recall pre-filters to identify domain-relevant content, then sending only flagged items to GPT-OSS-Safeguard for detailed evaluation. This hybrid approach balances safety coverage with acceptable response times.

What license governs GPT-OSS-Safeguard usage?

Both models are released under the permissive Apache 2.0 license, allowing anyone to freely use, modify, and deploy them commercially or non-commercially. This open-weight approach provides transparency and control over safety infrastructure without licensing restrictions common in proprietary moderation tools.

Featured Snippet Boxes

What is GPT-OSS-Safeguard?

GPT-OSS-Safeguard is OpenAI’s open-weight reasoning model for safety classification. It interprets custom safety policies provided at inference time to classify content, then explains decisions through transparent chain-of-thought reasoning. Available in 120b and 20b parameter sizes under Apache 2.0 license.

How does GPT-OSS-Safeguard differ from traditional content moderation?

Unlike traditional classifiers with fixed categories, GPT-OSS-Safeguard uses a “bring-your-own-policy” approach where developers write safety rules in natural language. The model interprets these policies at runtime and provides full reasoning chains explaining each decision, eliminating the need for retraining when policies change.

What are GPT-OSS-Safeguard system requirements?

The 20b model requires minimum 12 GB RAM, while the 120b version needs up to 65 GB. Both models support GGUF and MLX formats and can run locally through LM Studio or Ollama, or via cloud deployment. Available for free download from Hugging Face under Apache 2.0 license.

When should you use GPT-OSS-Safeguard vs traditional classifiers?

Use GPT-OSS-Safeguard for nuanced policy interpretation, borderline cases, and situations requiring explanation transparency. Use traditional classifiers for high-volume, well-defined tasks where speed matters more than flexibility. OpenAI recommends hybrid architectures with pre-filtering before GPT-OSS-Safeguard evaluation.

What is bring-your-own-policy AI moderation?

Bring-your-own-policy moderation means developers provide safety rules as natural language policies during inference rather than training them into the model. This allows instant policy updates, A/B testing of definitions, and custom rules for different regulatory contexts all without retraining.