Key Takeaways

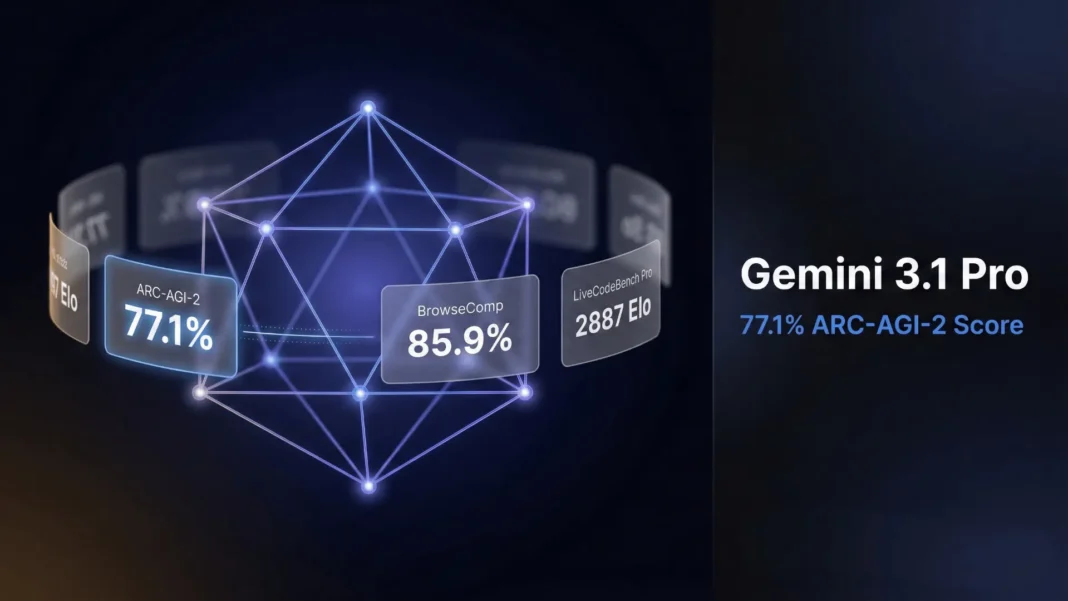

- Gemini 3.1 Pro scores 77.1% on ARC-AGI-2, more than double the 31.1% scored by Gemini 3 Pro

- The model leads Claude Opus 4.6 (68.8%) and GPT-5.2 (52.9%) on abstract reasoning, winning 10 of 13 benchmarks against Opus 4.6

- Available now in preview across 9 platforms including Gemini API, Vertex AI, AI Studio, and NotebookLM

- Free users can access Gemini 3.1 Pro in the Gemini app; Pro and Ultra plans unlock higher limits and NotebookLM access

Google upgraded the reasoning engine behind its entire AI ecosystem, and the performance jump is not incremental. Gemini 3.1 Pro went from 31.1% to 77.1% on ARC-AGI-2, marking one of the largest single-generation reasoning improvements documented on that benchmark from any major AI lab. This article breaks down what that score actually measures, how the model performs across 15 benchmarks, and which developers and teams stand to gain the most.

What ARC-AGI-2 Actually Measures

ARC-AGI-2 is not a knowledge recall test. It evaluates a model’s ability to identify abstract visual patterns and apply them to entirely new logic problems it has never encountered before.

A score of 77.1% means Gemini 3.1 Pro solved more than three-quarters of these novel abstract challenges, placing it significantly ahead of every other frontier model currently benchmarked. The previous Gemini 3 Pro scored 31.1% on the same test, a 46-percentage-point gap in a single generation.

Full Benchmark Performance

Reasoning improvements alone do not define a production-ready model. Here is how Gemini 3.1 Pro stacks up across all 15 benchmarks from the official model card:

| Benchmark | Gemini 3.1 Pro | Gemini 3 Pro | Claude Sonnet 4.6 | Claude Opus 4.6 | GPT-5.2 |

|---|---|---|---|---|---|

| Humanity’s Last Exam (no tools) | 44.4% | 37.5% | 33.2% | 40.0% | 34.5% |

| HLE (Search+Code) | 51.4% | 45.8% | 49.0% | 53.1% | 45.5% |

| ARC-AGI-2 (verified) | 77.1% | 31.1% | 58.3% | 68.8% | 52.9% |

| GPQA Diamond | 94.3% | 91.9% | 89.9% | 91.3% | 92.4% |

| Terminal-Bench 2.0 | 68.5% | 56.9% | 59.1% | 65.4% | 54.0% |

| SWE-Bench Verified | 80.6% | 76.2% | 79.6% | 80.8% | 80.0% |

| SWE-Bench Pro | 54.2% | 43.3% | N/A | N/A | 55.6% |

| LiveCodeBench Pro (Elo) | 2887 | 2439 | N/A | N/A | 2393 |

| SciCode | 59% | 56% | 47% | 52% | 52% |

| APEX-Agents | 33.5% | 18.4% | N/A | 29.8% | 23.0% |

| τ2-bench Retail | 90.8% | 85.3% | 91.7% | 91.9% | 82.0% |

| MCP Atlas | 69.2% | 54.1% | 61.3% | 59.5% | 60.6% |

| BrowseComp | 85.9% | 59.2% | 74.7% | 84.0% | 65.8% |

| MMMU-Pro | 80.5% | 81.0% | 74.5% | 73.9% | 79.5% |

| MMMLU | 92.6% | 91.8% | 89.3% | 91.1% | 89.6% |

Gemini 3.1 Pro leads on 10 of 13 comparable benchmarks against Claude Opus 4.6. Claude Opus 4.6 holds narrow advantages on HLE with tools (53.1% vs 51.4%), SWE-Bench Verified (80.8% vs 80.6%), and τ2-bench Retail. GPT-5.2 leads only on SWE-Bench Pro (55.6% vs 54.2%).

Generational Gains vs Gemini 3 Pro

The jump from Gemini 3 Pro (released November 2025) to Gemini 3.1 Pro is significant across every agentic and reasoning benchmark:

| Benchmark | Gemini 3 Pro | Gemini 3.1 Pro | Gain |

|---|---|---|---|

| ARC-AGI-2 | 31.1% | 77.1% | +46.0 pts (+148%) |

| BrowseComp | 59.2% | 85.9% | +26.7 pts |

| MCP Atlas | 54.1% | 69.2% | +15.1 pts |

| APEX-Agents | 18.4% | 33.5% | +15.1 pts (+82%) |

| Terminal-Bench 2.0 | 56.9% | 68.5% | +11.6 pts |

| SWE-Bench Pro | 43.3% | 54.2% | +10.9 pts |

| LiveCodeBench Pro (Elo) | 2439 | 2887 | +448 Elo |

The BrowseComp improvement of 26.7 points and APEX-Agents gain of 15.1 points confirm Google specifically invested in agentic capabilities alongside core reasoning. This is not a model that only answers questions better. It is built to complete multi-step workflows more reliably.

Real-World Capabilities That Make a Difference

Google’s own testing demonstrated four concrete applications where 3.1 Pro’s reasoning improvements produce visible output quality gains:

- Code-based animation: Generates website-ready animated SVGs directly from text prompts, built in pure code rather than pixels, remaining crisp at any resolution with minimal file size

- Complex system synthesis: Configured a live telemetry stream to visualize the International Space Station’s orbit as a real-time aerospace dashboard

- Interactive 3D design: Coded a functional 3D starling murmuration with hand-tracking user controls and a generative audio score that adapts to flock movement

- Literary-to-interface translation: When prompted to build a portfolio for Emily Bronte’s Wuthering Heights, the model reasoned through the novel’s atmospheric tone to design a coherent contemporary interface

These examples point to a consistent pattern: Gemini 3.1 Pro reasons about context before producing output, rather than generating surface-level responses.

Agentic Workflow Capabilities

Google explicitly positions 3.1 Pro as a step forward for agentic applications, and three benchmarks directly support that claim. The 85.9% BrowseComp score means the model can browse the web, synthesize multi-page information, and return accurate structured answers better than any competitor currently benchmarked.

The MCP Atlas score of 69.2% versus Claude Opus 4.6’s 59.5% indicates 3.1 Pro handles tool-using agentic workflows with measurably better task completion rates. APEX-Agents at 33.5% versus GPT-5.2’s 23.0% reinforces the same conclusion for multi-step autonomous task execution.

The model is integrated into Google Antigravity (Google’s agentic development platform) and Android Studio, positioning it as a primary backbone for AI application development.

Technical Specifications

Gemini 3.1 Pro ships with a 1 million token context window, capable of processing entire codebases, lengthy documents, or extended video in a single pass. Output capacity reaches 64,000 tokens, enabling complete applications, comprehensive reports, or detailed analyses without truncation.

The model is natively multimodal, treating text, audio, images, video, and code as first-class inputs within a unified system. This removes the need for separate model pipelines when handling diverse input formats in production environments.

Where Gemini 3.1 Pro Is Available

Access rolled out across nine surfaces starting February 19, 2026:

- Developers (preview): Gemini API via Google AI Studio, Gemini CLI, Google Antigravity, and Android Studio

- Enterprises (preview): Vertex AI and Gemini Enterprise

- Consumer users: Gemini app (free tier with standard limits; higher limits for Pro and Ultra plans) and NotebookLM (Pro and Ultra plans only)

The model is in preview as Google collects validation data before general availability. Google has stated general availability is coming “soon” without specifying a date.

Who Benefits Most from Gemini 3.1 Pro

Not every workflow requires the most powerful available model. Here is a practical breakdown based on verified benchmark performance:

Upgrade if you:

- Build AI agents that browse the web, use tools, or complete multi-step tasks (leads on BrowseComp, MCP Atlas, APEX-Agents)

- Need the strongest abstract reasoning available (77.1% ARC-AGI-2, highest among frontier models)

- Work with large codebases or need high-output generation (1M token context, 64K output, 2887 Elo on LiveCodeBench Pro)

- Do multimodal work combining text, audio, images, video, and code

Consider Claude Opus 4.6 if you:

- Rely heavily on tool-augmented reasoning (Opus leads HLE with Search+Code 53.1% vs 51.4%)

- Focus primarily on SWE-Bench Verified tasks (Opus leads by 0.2 points)

Consider GPT-5.2 if you:

- Need SWE-Bench Pro performance specifically (GPT-5.2 leads 55.6% vs 54.2%)

Limitations and Honest Considerations

Gemini 3.1 Pro is in preview, and Google has not published full specification data including latency figures or complete rate limit details beyond the 1 million token context reference.

The SWE-Bench Pro result (54.2% versus GPT-5.2’s 55.6%) and SWE-Bench Verified result (80.6% versus Opus 4.6’s 80.8%) show that real-world software engineering tasks remain competitive, not dominant. Teams with production pipelines focused exclusively on software engineering tasks should run direct comparisons in their specific use case before committing to a model switch. General availability timelines are unconfirmed, which matters for teams with deployment deadlines.

Frequently Asked Questions (FAQs)

What is Gemini 3.1 Pro’s ARC-AGI-2 score?

Gemini 3.1 Pro achieved a verified score of 77.1% on ARC-AGI-2. This is more than double the 31.1% scored by its predecessor Gemini 3 Pro, released November 2025. It is the highest ARC-AGI-2 score among all frontier models currently benchmarked, leading Claude Opus 4.6 at 68.8% and GPT-5.2 at 52.9%.

How does Gemini 3.1 Pro compare to Claude Opus 4.6?

Gemini 3.1 Pro leads Claude Opus 4.6 on 10 of 13 comparable benchmarks, with significant gaps on ARC-AGI-2 (77.1% vs 68.8%), BrowseComp (85.9% vs 84.0%), and MCP Atlas (69.2% vs 59.5%). Claude Opus 4.6 holds narrow leads on HLE with Search+Code, SWE-Bench Verified, and τ2-bench Retail.

What context window does Gemini 3.1 Pro support?

Gemini 3.1 Pro supports a 1 million token input context window and generates up to 64,000 tokens of output per request. This enables processing of entire codebases, lengthy research documents, or extended video content in a single pass without needing to chunk inputs.

Is Gemini 3.1 Pro free to use?

Yes. Free users can access Gemini 3.1 Pro in the Gemini app with standard rate limits. Google AI Pro and Ultra subscribers receive higher limits and priority access. NotebookLM integration is available exclusively for Pro and Ultra plan users. API access via Vertex AI follows standard Google Cloud pricing.

Where can developers access Gemini 3.1 Pro today?

Developers can access Gemini 3.1 Pro in preview through the Gemini API in Google AI Studio, Gemini CLI, Google Antigravity, Android Studio, Vertex AI, and Gemini Enterprise. Preview status means the model is still being validated before general availability, which Google has described as “coming soon.”

What real-world tasks show the clearest improvement over Gemini 3 Pro?

The most significant improvements appear in agentic and reasoning tasks. BrowseComp improved by 26.7 points, APEX-Agents by 15.1 points, MCP Atlas by 15.1 points, and ARC-AGI-2 by 46 points. Creative coding tasks including animated SVG generation, live data dashboards, and 3D simulations also demonstrate the model’s improved ability to reason through context before generating output.

When will Gemini 3.1 Pro be generally available?

Google has stated that general availability is “coming soon” but has not published a specific date. The model launched in preview on February 19, 2026 across all supported platforms. Google typically uses preview periods to validate updates and refine capabilities before a full release.