Quick Brief

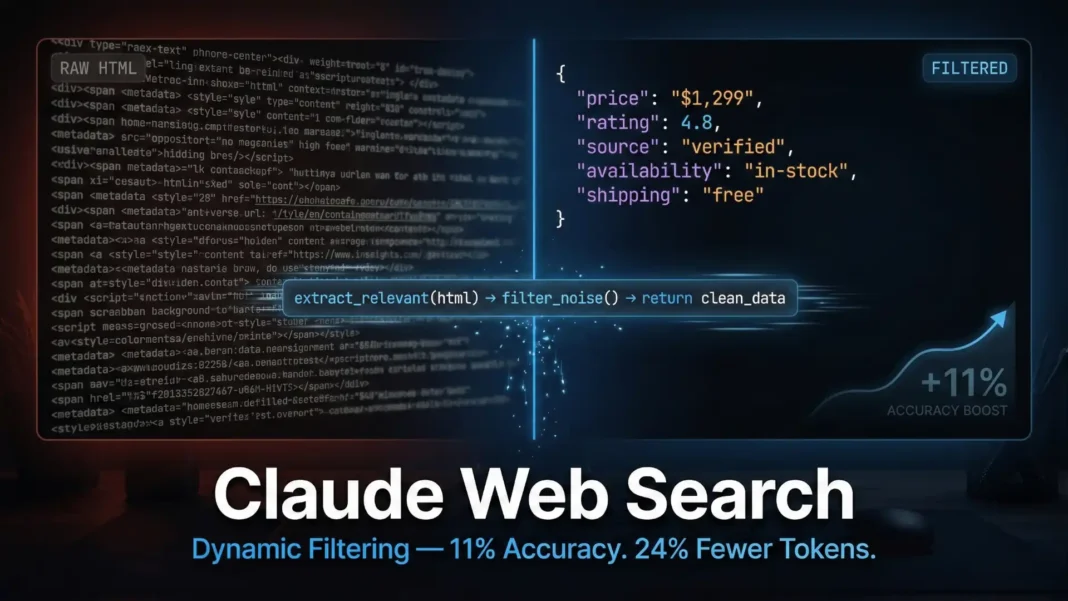

- Claude’s updated web search and web fetch tools automatically write and execute code to filter results before loading them into context.

- Dynamic filtering improved search accuracy by an average of 11% across BrowseComp and DeepsearchQA benchmarks while using 24% fewer input tokens.

- Claude Opus 4.6 reached 61.6% on BrowseComp and 77.3% F1 on DeepsearchQA with dynamic filtering enabled, up from 45.3% and 69.8% respectively.

- The feature is on by default for Sonnet 4.6 and Opus 4.6 via the Claude API using tool version

web_search_20260209.

Web search is one of the most token-intensive operations an AI agent performs and most of those tokens are wasted. Anthropic’s updated web search and web fetch tools for Claude now address this at the source, letting the model write and execute code to strip irrelevant content from results before reasoning even begins. The benchmark gains are specific, verifiable, and immediately relevant to every developer building agentic workflows on the Claude API.

Why Web Search Consumes So Many Tokens

A typical agentic web search follows a linear path: query → fetch full HTML from multiple sites → load everything into context → reason over it. The problem is that most of what comes back navigation menus, script tags, layout markup, advertisements has nothing to do with the question. Anthropic describes this plainly in their February 2026 blog: “the context being pulled in from search is often irrelevant, which degrades the quality of the response.”

Dynamic filtering inserts a new step between fetching and reasoning. Claude writes and executes code to post-process query results, keeps only the relevant portions, and discards the rest before those results enter the context window. Anthropic notes they had already applied this technique successfully across other agentic workflows before extending it to web search and web fetch.

What is Claude’s web search dynamic filtering?

Claude’s dynamic filtering allows the model to automatically write and execute code to post-process web search and web fetch results before they reach the context window. Instead of loading full HTML into context, Claude filters results to retain only relevant content. Anthropic reports this improves accuracy by an average of 11% and reduces input token usage by 24% benchmark evaluations.

Benchmark Results: BrowseComp and DeepsearchQA

Anthropic evaluated Sonnet 4.6 and Opus 4.6 with and without dynamic filtering, using only web search tools and no other tools in the evaluation environment. Two benchmarks were used:

BrowseComp tests whether an agent can navigate many websites to find a specific piece of information that is deliberately hard to find online. It was developed by OpenAI.

DeepsearchQA presents agents with research queries that have many correct answers, all of which must be found via web search. It tests systematic multi-step search planning and is measured by an F1 score that balances precision and recall. The benchmark paper is published by Google DeepMind.

| Model | Benchmark | Without Filtering | With Filtering | Gain |

|---|---|---|---|---|

| Sonnet 4.6 | BrowseComp | 33.3% | 46.6% | +13.3 pts |

| Opus 4.6 | BrowseComp | 45.3% | 61.6% | +16.3 pts |

| Sonnet 4.6 | DeepsearchQA F1 | 52.6% | 59.4% | +6.8 pts |

| Opus 4.6 | DeepsearchQA F1 | 69.8% | 77.3% | +7.5 pts |

The average gain across both benchmarks and both models: 11% accuracy improvement, with 24% fewer input tokens consumed.

What is BrowseComp?

BrowseComp is a benchmark developed by OpenAI that tests whether an AI agent can navigate multiple websites to find a single, deliberately hard-to-locate piece of information. Anthropic used BrowseComp to evaluate dynamic filtering and reports Claude Opus 4.6 improved from 45.3% to 61.6% accuracy with the feature enabled.

What is DeepsearchQA?

DeepsearchQA is a benchmark published by Google DeepMind that presents agents with research queries requiring many correct answers, all found through systematic web search. Performance is measured using an F1 score that balances precision and recall. Claude Opus 4.6 with dynamic filtering scored 77.3% F1, up from 69.8% without it.

The Token Cost Nuance Developers Must Understand

The 24% reduction in input tokens is real but Anthropic is explicit that this does not mean universal cost savings. Price-weighted token usage decreased for Sonnet 4.6 on both benchmarks, but increased for Opus 4.6.

The reason: Claude generates code to perform the filtering, and that code generation consumes output tokens. For Opus 4.6, which writes more complex filtering logic, the output token cost can outweigh input token savings. Anthropic’s guidance is direct: “To better understand your own costs, we recommend evaluating this tool against a representative set of web search queries your agent is likely to encounter in production.”

Does dynamic filtering reduce API costs?

Dynamic filtering reduces input tokens by 24% and lowers price-weighted costs for Sonnet 4.6 workloads. For Opus 4.6, price-weighted token costs increased in Anthropic’s tests because the model generates more complex filtering code, consuming additional output tokens. Anthropic recommends testing against your specific production queries to measure the actual cost impact.

How to Enable Dynamic Filtering: API Implementation

Dynamic filtering is on by default when using the web_search_20260209 and web_fetch_20260209 tool versions with Claude Sonnet 4.6 and Opus 4.6 on the Claude API. Developers must include Anthropic’s beta header in their requests.

anthropic-beta: code-execution-web-tools-2026-02-09

{

"model": "claude-opus-4-6",

"max_tokens": 4096,

"tools": [

{ "type": "web_search_20260209", "name": "web_search" },

{ "type": "web_fetch_20260209", "name": "web_fetch" }

],

"messages": [

{

"role": "user",

"content": "Search for the current prices of AAPL and GOOGL, then calculate which has a better P/E ratio."

}

]

}

Anthropic notes that for complex queries such as sifting through technical documentation or verifying citations developers can expect performance improvements similar to those shown in the benchmarks above.

How do I enable Claude’s dynamic filtering?

Enable dynamic filtering by specifying the web_search_20260209 tool version (and the corresponding web_fetch_20260209) in your API call and including the anthropic-beta: code-execution-web-tools-2026-02-09 header. The feature activates by default with Claude Sonnet 4.6 and Opus 4.6 once those tool versions are used. Full implementation details are available in Anthropic’s Claude Developer Platform documentation.

Customer Spotlight: Quora’s Poe Platform

Quora’s Poe platform is described by Anthropic as “one of the largest multi-model AI platforms, giving millions of users access to over 200 models through a single interface.” Gareth Jones, Product and Research Lead at Quora, is quoted in Anthropic’s announcement: “Opus 4.6 with dynamic filtering achieved the highest accuracy on our internal evals when tested against other frontier models.”

Jones further describes the behavioral shift: “The model behaves like an actual researcher, writing Python to parse, filter, and cross-reference results rather than reasoning over raw HTML in context.” This quote is published as a customer spotlight within Anthropic’s official blog and reflects Quora’s internal evaluation findings.

Five Tools Now Generally Available on the Claude API

Alongside the dynamic filtering announcement, Anthropic confirmed that five tools have reached General Availability on the Claude Developer Platform. All are available now:

- Code execution – a sandbox for agents to run code during a conversation to filter context, analyze data, or perform calculations

- Memory – stores and retrieves information across conversations through a persistent file directory, so agents retain context without keeping everything in the context window

- Programmatic tool calling – executes complex multi-tool workflows in code, keeping intermediate results out of the context window

- Tool search – dynamically discovers tools from large libraries without loading all definitions into the context window

- Tool use examples – provides sample tool calls directly inside tool definitions to demonstrate usage patterns and reduce parameter errors

These tools share the same design goal: reduce what the model must hold in context by offloading repetitive or structural work to code and tools.

Limitations and Considerations

This article is based exclusively on Anthropic’s official February 2026 blog post and Claude API documentation. Benchmark results for BrowseComp and DeepsearchQA reflect Anthropic’s own controlled evaluations using only web search tools, and performance on production workloads may vary. Anthropic explicitly states that cost impact differs between Sonnet 4.6 and Opus 4.6 and recommends developers test their specific query sets before drawing conclusions about cost savings. Dynamic filtering availability applies to the Claude API; no claims are made here about availability on the Claude.ai consumer interface.

Frequently Asked Questions (FAQs)

What is Claude’s web search dynamic filtering?

Claude’s dynamic filtering is a capability of the updated web_search_20260209 and web_fetch_20260209 tools where Claude writes and executes code to post-process search results before they enter the context window. This removes irrelevant HTML and retains only relevant content. Anthropic reports it improved average search accuracy by 11% and reduced input tokens by 24% in their February 2026 benchmark tests.

Which Claude models support dynamic filtering?

Dynamic filtering is supported by Claude Sonnet 4.6 and Claude Opus 4.6 when using the web_search_20260209 and web_fetch_20260209 tool versions on the Anthropic API. Anthropic states the feature is turned on by default when these tool versions are used with those models, requiring only the correct beta header in the API request.

Does dynamic filtering always reduce API costs?

Anthropic reports that dynamic filtering reduced price-weighted token costs for Sonnet 4.6 on both benchmarks but increased them for Opus 4.6. The cost difference is driven by how much filtering code the model needs to write more complex filtering generates more output tokens. Anthropic recommends evaluating the tool on a representative sample of production queries to understand the cost impact for your specific workload.

What are BrowseComp and DeepsearchQA?

BrowseComp, developed by OpenAI, tests whether an agent can navigate many websites to find a single hard-to-find piece of information. DeepsearchQA, from Google DeepMind, evaluates systematic multi-step research by measuring F1 scores across queries with many correct answers. Anthropic used both to benchmark dynamic filtering in February 2026.

What other tools did Anthropic make generally available alongside dynamic filtering?

Alongside dynamic filtering, Anthropic confirmed five tools have reached General Availability on the Claude Developer Platform: code execution, memory, programmatic tool calling, tool search, and tool use examples. All are available now and are designed to help agents handle complex, token-intensive tasks by keeping intermediate data out of the context window.

How is DeepsearchQA’s F1 score measured?

DeepsearchQA measures performance using an F1 score that balances precision and recall — capturing both how accurate the returned answers are and how complete the search coverage is. Claude Opus 4.6 with dynamic filtering scored 77.3% F1, up from 69.8% without filtering, per Anthropic’s February 2026 benchmarks.