Essential Points

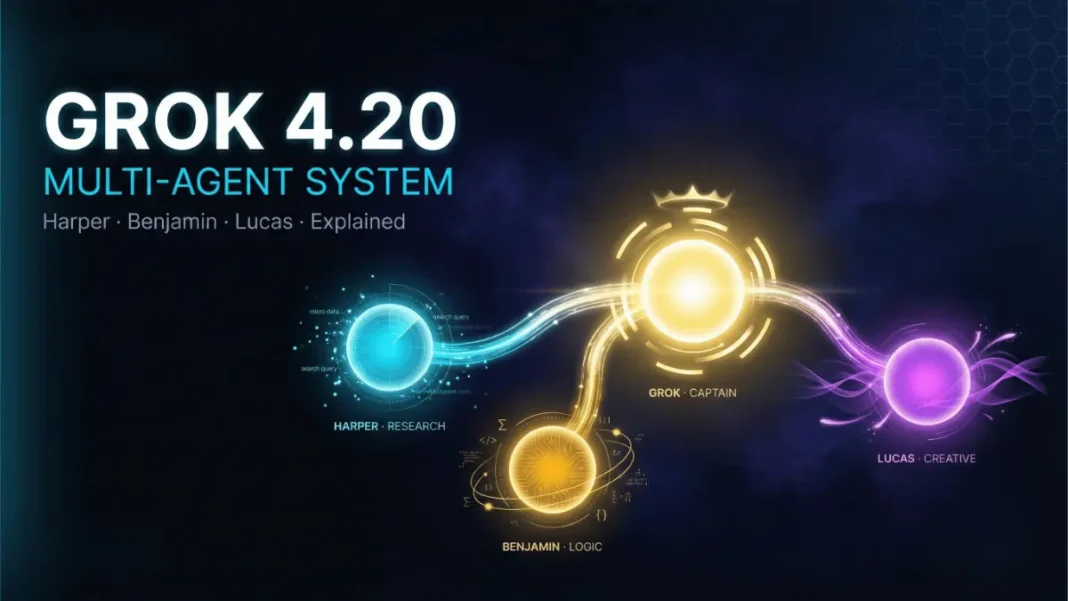

- Grok 4.20 launched in beta on February 17, 2026, deploying four named agents: Grok, Harper, Benjamin, and Lucas

- All four agents run simultaneously each approaches the problem from its own domain, debates outputs, then Grok synthesizes the final answer

- The peer-review mechanism reduced hallucinations by 65%, from approximately 12% to 4.2%

- Access requires SuperGrok (~$30/month) or X Premium+; API access is not yet public

xAI didn’t release a bigger model on February 17, 2026. It released a team. Grok 4.20 is the first consumer-facing AI system from a major lab where four specialized agents each with a distinct role reason in parallel, debate each other in real time, and produce a unified answer before the user sees a single word. What follows is a precise breakdown of who those agents are, what they do, and why this architectural decision produces measurably different outcomes.

The Four Agents at a Glance

| Agent | Role | Primary Responsibilities | Workflow Position |

|---|---|---|---|

| Grok (Captain) | Coordinator / Aggregator | Task decomposition, final answer synthesis, conflict resolution | Orchestrates all three agents; delivers final output |

| Harper | Research & Facts Expert | Real-time web search, X Firehose data retrieval, evidence assembly | Activated first; supplies raw intelligence to Benjamin and Lucas |

| Benjamin | Math / Code / Logic Expert | Rigorous step-by-step reasoning, code execution, numerical computation, mathematical proofs | Verifies Harper’s data; checks logical consistency |

| Lucas | Creative & Balance Expert | Divergent thinking, alternative framings, writing optimization, user experience | Challenges conventional solution paths; optimizes final output readability |

Grok: The Captain Agent

Grok is the decision-maker of the system, not a passive aggregator. When a user submits a query, Grok analyzes task complexity, breaks the problem into sub-tasks, and dispatches each to the appropriate agent simultaneously. After all agents return their outputs, Grok adjudicates disagreements and synthesizes the final response.

Users can watch this entire process unfold through a new live thinking interface, with progress indicators and notes from each agent visible in real time. Standard users get four agents per query; Heavy mode scales the system to 16 agents on the same prompt for extreme-complexity tasks.

Harper: The Research Agent

Harper is Grok 4.20’s information retrieval engine, and its structural advantage over competing AI models is direct. Harper has exclusive access to the X Firehose approximately 68 million English-language posts per day enabling millisecond-level conversion of market sentiment and breaking news into usable intelligence.

When a user queries Grok 4.20 about a stock, a live event, or a rapidly developing story, Harper is not searching a cached index. It is pulling live signal from the largest real-time public data stream accessible to any AI system. GPT-5, Gemini, and Claude have no equivalent integration.

What is Harper’s role in Grok 4.20?

Harper is the research agent within Grok 4.20’s four-agent system. It performs real-time web searches, retrieves documents, and accesses the X Firehose approximately 68 million English posts per day to supply factual evidence. Benjamin then verifies Harper’s findings before Grok synthesizes the final answer.

Benjamin: The Logic Agent

If Harper brings the evidence, Benjamin interrogates it. Benjamin’s domain is rigorous reasoning: step-by-step logic chains, code execution, numerical computation, and mathematical proofs operating at what xAI describes as “mathematical proof-level precision.”

Benjamin’s practical impact appeared early in Beta. Mathematician Paata Ivanisvili used an internal Beta version of Grok 4.20 to achieve new mathematical discoveries related to Bellman functions, a domain requiring exactly the formal verification Benjamin is built for. In the Alpha Arena live trading competition, Benjamin’s quantitative verification contributed directly to Grok 4.20 being the only AI model to post a profit while all competitors GPT-5, Claude, and Gemini recorded losses.

What does Benjamin do in Grok 4.20?

Benjamin is the logic and verification agent in Grok 4.20. It performs step-by-step mathematical reasoning, code execution, and numerical computation at proof-level precision. Benjamin cross-checks Harper’s sourced data and verifies logical consistency before Grok assembles the final output.

Lucas: The Creative Agent

Lucas is the system’s deliberate wildcard. Its function is divergent thinking: approaching problems from unconventional angles, generating alternative framings, and optimizing final outputs for readability and user experience.

Lucas prevents Grok 4.20 from defaulting to the most statistically common solution path. In long-form content tasks, Lucas focuses on structure and narrative coherence while Harper ensures factual accuracy and Benjamin verifies logic a three-layer check that distinguishes the system from single-model chain-of-thought inference.

What is Lucas’s role in Grok 4.20?

Lucas is the creative and lateral-thinking agent in Grok 4.20. It decomposes problems from non-standard angles, generates alternative framings, and optimizes final outputs for user experience and readability. Lucas acts as the creative counterweight to Benjamin’s formal logic.

How the Four Agents Orchestrate Together

The multi-agent workflow unfolds across four distinct phases not as a sequential pipeline but as a live, parallel collaboration.

- Task Decomposition Grok (Captain) receives the user query, analyzes task type, and activates Harper, Benjamin, and Lucas simultaneously

- Parallel Thinking All four agents analyze the problem from their respective domains at the same time; no agent waits for another to complete

- Internal Discussion & Peer Review Agents exchange intermediate outputs; if Benjamin’s calculation contradicts Harper’s sourced data, they iterate and resolve the conflict internally before proceeding

- Aggregated Output Grok synthesizes all agent conclusions into a single, coherent response

This mechanism functions like four specialists at a meeting table each contributing their professional view, resolves disagreements through discussion, and the moderator delivers the final conclusion.

How does Grok 4.20’s multi-agent orchestration work?

Grok 4.20 routes each query through four parallel agents Harper (research), Benjamin (logic), Lucas (creative), and Grok (coordination). Agents debate intermediate outputs before Grok synthesizes the final answer. This internal peer-review loop reduced hallucination rates from approximately 12% to 4.2%, a 65% reduction.

Usage Modes Choosing the Right One

Grok 4.20 is one of four modes in the current Grok selector.

| Mode | Underlying Model | Best Use Case | Speed |

|---|---|---|---|

| Fast | Grok 4.1 | Daily chat, simple Q&A | Fastest |

| Expert | Grok 4.x deep version | Questions requiring deep single-model reasoning | Medium |

| Grok 4.20 Beta | 4 Agents multi-agent | Complex research, coding, multi-domain strategy | Slower |

| Heavy | Ultra-large expert team (16 agents) | Academic research, extreme-difficulty problems | Slowest |

xAI recommends Fast mode for 80% of daily queries. The 4-agent system delivers most value when problems span multiple disciplines or require multi-perspective verification.

Best Use Cases by Agent Combination

Grok 4.20’s architecture creates specific advantages across task types.

- Investment research Harper gathers live X sentiment; Benjamin runs quantitative verification; Lucas frames risk narratives

- Complex programming Benjamin handles logic and code structure; Harper checks documentation; Lucas optimizes readability

- Academic research Benjamin provides mathematical proof-level validation; Harper sources literature; Lucas generates creative hypotheses

- Long-form content creation Lucas structures narrative and tone; Harper ensures factual accuracy; Benjamin verifies logical consistency

Limitations and Honest Considerations

Grok 4.20 introduces latency that single-model systems avoid. Routing a query through four parallel agents and a synthesis layer adds computational overhead, even on xAI’s 200,000-GPU Colossus cluster. For simple queries, xAI explicitly recommends Fast mode (Grok 4.1) over the 4-agent system.

The captain-agent judgment layer introduces a meta-reasoning risk: if Grok the coordinator misidentifies which agent’s output to trust, errors can pass through the synthesis layer. This failure mode does not exist in single-model architectures. Additionally, API pricing for multi-agent inference has not been disclosed; access remains restricted to SuperGrok (~$30/month) or X Premium+ subscribers only.

The 3 trillion parameter figure is speculative xAI has not officially confirmed this number.

Grok 4.20 vs. Competing Architectures

| Dimension | Grok 4.20 | GPT-5 | Claude Opus 4.5 | Gemini 3 Pro |

|---|---|---|---|---|

| Architecture | 4 parallel specialized agents | Single-model + CoT | Single-model + CoT | Single-model |

| Real-time data | X Firehose (68M tweets/day) | None | None | Limited |

| Hallucination rate | ~4.2% (65% reduction) | Not disclosed | Not disclosed | Not disclosed |

| Alpha Arena trading | +12.11% avg; only profit | Loss | Loss | Loss |

| ForecastBench rank | 2nd globally | Below Grok 4.20 | Below Grok 4.20 | Below Grok 4.20 |

| Arena ELO | 1505–1535 (est.) | Below Grok 4.20 | Below Grok 4.20 | ~1500 (first to break barrier) |

| Context window | 256K–2M tokens | 128K | 1M | 1M |

| Consumer access | SuperGrok / X Premium+ | ChatGPT Plus | Claude.ai Pro | Gemini Advanced |

Frequently Asked Questions (FAQs)

What is Grok 4.20?

Grok 4.20 is xAI’s multi-agent AI system launched in beta on February 17, 2026. It deploys four specialized agents Grok (Captain), Harper, Benjamin, and Lucas that think in parallel, debate each other’s outputs, and synthesize a unified answer. It runs on xAI’s 200,000-GPU Colossus supercluster.

Who are Harper, Benjamin, and Lucas in Grok 4.20?

Harper is the research agent handling real-time web and X Firehose data retrieval. Benjamin is the logic agent managing mathematical reasoning and code verification. Lucas is the creative agent providing divergent thinking and output optimization. Grok (Captain) coordinates all three and delivers the final synthesized answer.

How is Grok 4.20 different from GPT-5 or Claude?

Grok 4.20 uses a native multi-agent architecture where four agents reason simultaneously and peer-review each other’s work before output. GPT-5 and Claude Opus 4.5 rely on single-model inference. Grok also has exclusive real-time access to the X Firehose 68 million English posts per day which no competitor replicates.

How do the four agents reduce hallucinations?

Harper supplies evidence, Benjamin verifies it through rigorous reasoning, Lucas stress-tests assumptions from creative angles, and Grok adjudicates disagreements before outputting. This peer-review loop reduced hallucination rates from approximately 12% to 4.2% a verified 65% reduction.

How did Grok 4.20 perform in real-money trading?

In the Alpha Arena real-money stock trading competition, Grok 4.20 was the only AI model to achieve profitability. It posted an average return of 12.11% with a peak return of up to 50%. GPT-5, Claude, and Gemini all posted losses. xAI attributes the edge to exclusive real-time X Firehose integration.

How do I access Grok 4.20?

Grok 4.20 Beta is available on grok.com and the iOS/Android Grok apps. Access requires a SuperGrok subscription (~$30/month) or X Premium+ membership. Public API access has not launched yet but is expected in a broader rollout.

What is the context window of Grok 4.20?

Grok 4.20 supports a minimum 256K token context window in standard configurations. Select API versions extend this to 2 million tokens, enabling processing of ultra-long documents, codebases, and multi-session conversations in a single inference call.

Can Grok 4.20 scale beyond four agents?

Yes. Standard queries use four agents. Grok 4.20’s Heavy mode scales the system to 16 agents on the same prompt, built for extreme-complexity tasks such as academic research and multi-domain strategy problems requiring maximum depth.