Quick Brief

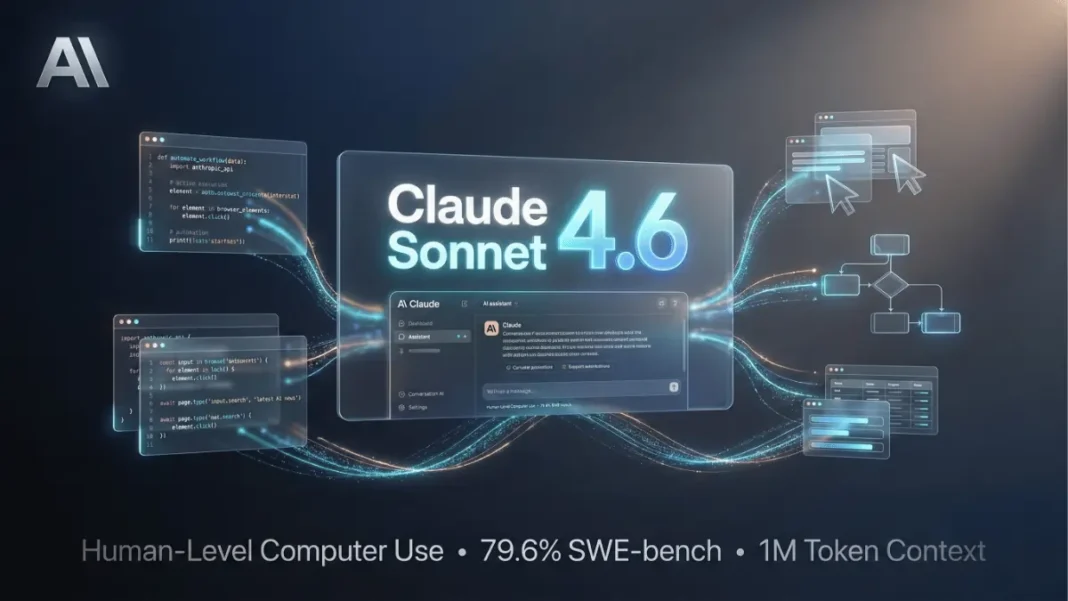

- Claude Sonnet 4.6 matches Opus 4.5 performance across coding and office tasks at $3/$15 per million tokens

- Human-level computer use capabilities navigate spreadsheets and multi-step web forms autonomously

- 1M token context window processes entire codebases and lengthy contracts in single requests

- Available February 17, 2026 across all Claude plans including upgraded free tier with file creation

Anthropic released Claude Sonnet 4.6 on February 17, 2026, marking the most significant upgrade to its Sonnet model line. The model delivers performance that previously required Opus-class intelligence including economically valuable office tasks and complex coding while maintaining Sonnet’s $3 input/$15 output per million tokens pricing. Early users prefer Sonnet 4.6 over its predecessor 70% of the time, and even favor it over November 2025’s Claude Opus 4.5 in 59% of cases.

Performance That Closes the Intelligence Gap

Claude Sonnet 4.6 represents a fundamental shift in AI model economics. Performance benchmarks show it approaching Opus-level capabilities across coding, long-context reasoning, and agent planning while remaining accessible for tasks that were previously cost-prohibitive. The model demonstrates major improvements in consistency and instruction following two areas where developers reported frustration with earlier iterations.

Users testing Sonnet 4.6 in Claude Code environments reported it more effectively reads context before modifying code and consolidates shared logic rather than duplicating it. This reduced the friction developers experienced during extended coding sessions. The model also shows significantly less “overengineering” and “laziness” common complaints about previous versions with fewer false claims of success and more consistent follow-through on multi-step tasks.

Computer Use: From Experimental to Human-Level

Anthropic introduced the first general-purpose computer-using AI model in October 2024, acknowledging it was “still experimental at times cumbersome and error-prone”. Sixteen months later, Sonnet 4.6 demonstrates remarkable progress on OSWorld, the standard benchmark presenting hundreds of tasks across real software like Chrome, LibreOffice, and VS Code.

The model interacts with computers the way humans do clicking a virtual mouse and typing on a virtual keyboard without special APIs or purpose-built connectors. Early Sonnet 4.6 users report human-level capability in navigating complex spreadsheets, filling out multi-step web forms, and coordinating work across multiple browser tabs. One insurance company achieved 94% accuracy on their computer use benchmark, calling it “mission-critical to workflows like submission intake and first notice of loss”.

The technology still lags behind skilled humans at computer tasks, but the rate of improvement suggests substantially more capable models are within reach. Anthropic addresses security concerns directly: safety evaluations show Sonnet 4.6 significantly improves resistance to prompt injection attacks compared to Sonnet 4.5, performing similarly to Opus 4.6.

1M Token Context Window: Processing Entire Codebases

Sonnet 4.6’s 1 million token context window holds entire codebases, lengthy contracts, or dozens of research papers in a single request. More importantly, the model reasons effectively across all that context a capability that enables superior long-horizon planning.

This became evident in the Vending-Bench Arena evaluation, which tests how well a model runs a simulated business over time with competitive elements. Sonnet 4.6 developed an unexpected strategy: it invested heavily in capacity for the first ten simulated months, spending significantly more than competitors, then pivoted sharply to focus on profitability in the final stretch. The timing of this strategic shift helped it finish well ahead of the competition.

Enterprise Document Comprehension

Sonnet 4.6 matches Opus 4.6 performance on OfficeQA, which measures how well a model reads enterprise documents (charts, PDFs, tables), extracts relevant facts, and reasons from those facts. This represents a meaningful upgrade for document comprehension workloads.

Box evaluated Sonnet 4.6 on deep reasoning and complex agentic tasks across real enterprise documents, finding it outperformed Sonnet 4.5 in heavy reasoning Q&A by 15 percentage points. Another customer noted that Sonnet 4.6 “produced the best iOS code we’ve tested” with “better spec compliance, better architecture,” and modern tooling usage that wasn’t explicitly requested.

Financial services companies saw significant improvements in their core products, with one reporting “a significant jump in answer match rate compared to Sonnet 4.5 in our Financial Services Benchmark, with better recall on the specific workflows our customers depend on”.

Frontend Development and Design Sensibility

Early customers independently described visual outputs from Sonnet 4.6 as notably more polished than previous models. The improvements span layouts, animations, and overall design sensibility, with customers needing fewer iteration rounds to reach production-quality results.

One customer stated that Sonnet 4.6 “has perfect design taste when building frontend pages and data reports, and it requires far less hand-holding to get there than anything we’ve tested before”. This capability extends beyond code generation the model demonstrates genuine understanding of user experience principles and visual hierarchy.

Safety and Reliability Standards

Anthropic conducted extensive safety evaluations of Sonnet 4.6, which showed it to be as safe as or safer than other recent Claude models. Safety researchers concluded that Sonnet 4.6 has “a broadly warm, honest, prosocial, and at times funny character, very strong safety behaviors, and no signs of major concerns around high-stakes forms of misalignment”.

The model shows particular improvement in resisting prompt injection attacks, a critical concern for computer use applications where malicious actors can attempt to hijack the model by hiding instructions on websites. Anthropic provides detailed mitigation strategies in their API documentation for developers implementing computer use features.

Platform Integration and Availability

Claude Sonnet 4.6 launched February 17, 2026 across all Claude plans, Claude Cowork, Claude Code, the Claude API, and major cloud platforms. Anthropic upgraded its free tier to Sonnet 4.6 by default, now including file creation, connectors, skills, and context compaction.

On the Claude Developer Platform, Sonnet 4.6 supports adaptive thinking, extended thinking, and context compaction in beta. Context compaction automatically summarizes older context as conversations approach limits, increasing effective context length. Developers access the model using the claude-sonnet-4-6 identifier via the Claude API.

Claude’s web search and fetch tools now automatically write and execute code to filter and process search results, keeping only relevant content in context. This improves both response quality and token efficiency. Code execution, memory, programmatic tool calling, tool search, and tool use examples are now generally available.

For Claude in Excel users, the add-in now supports MCP connectors, enabling Claude to work with external tools like S&P Global, LSEG, Daloopa, PitchBook, Moody’s, and FactSet without leaving Excel. MCP connectors configured in Claude.ai automatically work in Excel on Pro, Max, Team, and Enterprise plans.

Considerations and Limitations

Claude Sonnet 4.6 represents significant progress but retains important limitations. Computer use capabilities still lag behind the most skilled human users, and the technology carries risks including prompt injection attacks where malicious actors hide instructions on websites. Real-world computer use is messier and more ambiguous than benchmark environments like OSWorld, with higher stakes for errors. Developers implementing computer use features should review Anthropic’s mitigation strategies in their API documentation. For tasks demanding the absolute deepest reasoning such as codebase refactoring or coordinating multiple agents in complex workflows Opus 4.6 remains the stronger option.

Frequently Asked Questions (FAQs)

Is Claude Sonnet 4.6 better than Claude Opus 4.5?

For many tasks, yes. Early users preferred Sonnet 4.6 over Opus 4.5 59% of the time, rating it as significantly less prone to overengineering and “laziness” with better instruction following. However, Anthropic recommends Opus 4.6 for tasks demanding the deepest reasoning, such as codebase refactoring and coordinating multiple agents.

What is the context window size for Claude Sonnet 4.6?

Claude Sonnet 4.6 features a 1 million token context window in beta, capable of holding entire codebases, lengthy contracts, or dozens of research papers in a single request. The model reasons effectively across this full context, enabling superior long-horizon planning.

When was Claude Sonnet 4.6 released?

Anthropic released Claude Sonnet 4.6 on February 17, 2026, making it available across all Claude plans, Claude Cowork, Claude Code, the API, and major cloud platforms. The free tier was upgraded to Sonnet 4.6 by default on the same date.

Does Claude Sonnet 4.6 support extended thinking?

Yes. Claude Sonnet 4.6 supports both adaptive thinking and extended thinking on the Claude Developer Platform. The model offers strong performance at any thinking effort level, even with extended thinking disabled. Anthropic recommends exploring across the thinking effort spectrum to find the ideal balance for your specific use case.

What computer use capabilities does Claude Sonnet 4.6 have?

Claude Sonnet 4.6 demonstrates human-level capability in tasks like navigating complex spreadsheets, filling out multi-step web forms, and coordinating work across multiple browser tabs. It interacts with computers using a virtual mouse and keyboard without requiring special APIs. One insurance company achieved 94% accuracy on their computer use benchmark with Sonnet 4.6.

How much does Claude Sonnet 4.6 cost?

Claude Sonnet 4.6 costs $3 per million input tokens and $15 per million output tokens the same pricing as Sonnet 4.5. This pricing structure makes Opus-level performance accessible for a significantly wider range of applications. A free tier with Sonnet 4.6 is available with file creation, connectors, and skills.

What benchmarks does Claude Sonnet 4.6 excel at?

Claude Sonnet 4.6 shows improvements across OSWorld (computer use tasks), SWE-bench Verified (coding), OfficeQA (document comprehension), and Terminal-Bench 2.0. It matches Opus 4.6 performance on enterprise document reading and reasoning tasks. The model also excels at Vending-Bench Arena, demonstrating strategic long-horizon planning.

Can Claude Sonnet 4.6 be used for frontend development?

Yes. Early customers report that Sonnet 4.6 produces notably more polished visual outputs with better layouts, animations, and design sensibility than previous models. One customer stated it “has perfect design taste when building frontend pages and data reports” requiring “far less hand-holding” than anything tested before. Customers need fewer iteration rounds to reach production-quality results.

How does Claude Sonnet 4.6 compare to Opus 4.6?

Sonnet 4.6 matches Opus 4.6 on OfficeQA enterprise document tasks and approaches it on coding benchmarks like SWE-bench Verified at 79.6%. Users preferred Sonnet 4.6 over November 2025’s Opus 4.5 in 59% of cases. However, Anthropic recommends Opus 4.6 for tasks requiring the deepest reasoning, such as codebase refactoring. Opus 4.6 costs 1.67x more at $5/$25 per million tokens.

What are the main differences between Sonnet 4.6 and Sonnet 4.5?

Sonnet 4.6 shows 70% user preference over Sonnet 4.5, with major improvements in coding consistency, instruction following, and reduced overengineering. Enterprise Q&A tasks improved by 15 percentage points. Computer use reached human-level capability in Sonnet 4.6 versus 4.5’s more limited autonomous execution. Prompt injection resistance improved significantly while maintaining the same $3/$15 pricing.

Where can I access Claude Sonnet 4.6?

Claude Sonnet 4.6 is available on Claude.ai (free and paid tiers), Claude API, Claude Code, Claude Cowork, Amazon Bedrock, and Google Cloud Vertex AI as of February 17, 2026. Free tier users automatically received Sonnet 4.6 with file creation, connectors, and skills included. Developers access it using the claude-sonnet-4-6 identifier via the API.

What makes Sonnet 4.6 better for coding than previous versions?

Sonnet 4.6 reads context more effectively before modifying code, consolidates shared logic instead of duplicating it, and makes fewer false success claims. It demonstrates less overengineering and improved follow-through on multi-step tasks. Scoring 79.6% on SWE-bench Verified, it approaches Opus-level coding at a fraction of the cost. Bug detection capabilities improved, enabling teams to run more reviewers in parallel.

Claude Sonnet 4.6 pricing and access details

Claude Sonnet 4.6 costs $3 per million input tokens and $15 per million output tokens identical to Sonnet 4.5 pricing. The model launched February 17, 2026 across Claude.ai (free and paid tiers), Claude API, Amazon Bedrock, Google Cloud Vertex AI, and Claude Code environments. Free tier users receive Sonnet 4.6 by default with file creation, connectors, and skills included.

How does Claude Sonnet 4.6 compare to GPT-5 and Gemini 3?

Claude Sonnet 4.6 competes directly with frontier models at a lower price point. While Gemini 3 Pro leads overall reasoning benchmarks with 1501 LMArena Elo, Claude 4.5 Sonnet (the previous version) dominated real-world coding at 77.2% SWE-bench. Sonnet 4.6 builds on this coding strength while adding human-level computer use capabilities that distinguish it from competitors.

How does Claude Sonnet 4.6 compare to Opus 4.6?

Sonnet 4.6 matches Opus 4.6 on OfficeQA (enterprise document comprehension) and approaches it on SWE-bench Verified coding tasks at 79.6%. However, Opus 4.6 remains superior for the deepest reasoning challenges like codebase refactoring and multi-agent coordination. Opus 4.6 costs $5 input/$25 output 1.67x more expensive than Sonnet 4.6, making Sonnet the better choice for most production workloads.

What coding improvements does Claude Sonnet 4.6 offer?

Claude Sonnet 4.6 excels at complex code fixes, especially when searching across large codebases is essential. Development teams running agentic coding at scale report strong resolution rates and the consistency developers require. The model meaningfully closed the gap with Opus on bug detection, enabling teams to run more reviewers in parallel and catch a wider variety of bugs without increasing costs.

What are the key improvements in Sonnet 4.6 coding skills?

Sonnet 4.6 demonstrates major improvements in reading context before modifying code, consolidating shared logic rather than duplicating it, and reducing false success claims. Developers report significantly less overengineering and improved consistency during extended coding sessions. The model scored 79.6% on SWE-bench Verified, closing the gap with Opus-level coding performance while maintaining $3/$15 pricing. Bug detection capabilities improved meaningfully, enabling parallel reviewer deployment.

Sonnet 4.6 vs Sonnet 4.5 benchmark differences

Sonnet 4.6 outperforms Sonnet 4.5 by 70% in user preference studies and shows a 15 percentage point improvement on enterprise reasoning Q&A tasks. On SWE-bench Verified, Sonnet 4.6 scored 79.6% compared to Sonnet 4.5’s lower performance, representing a meaningful coding capability jump. Computer use benchmarks show human-level capability in Sonnet 4.6, a substantial advancement from 4.5’s more limited autonomous task execution. Prompt injection resistance improved significantly.