Quick Brief

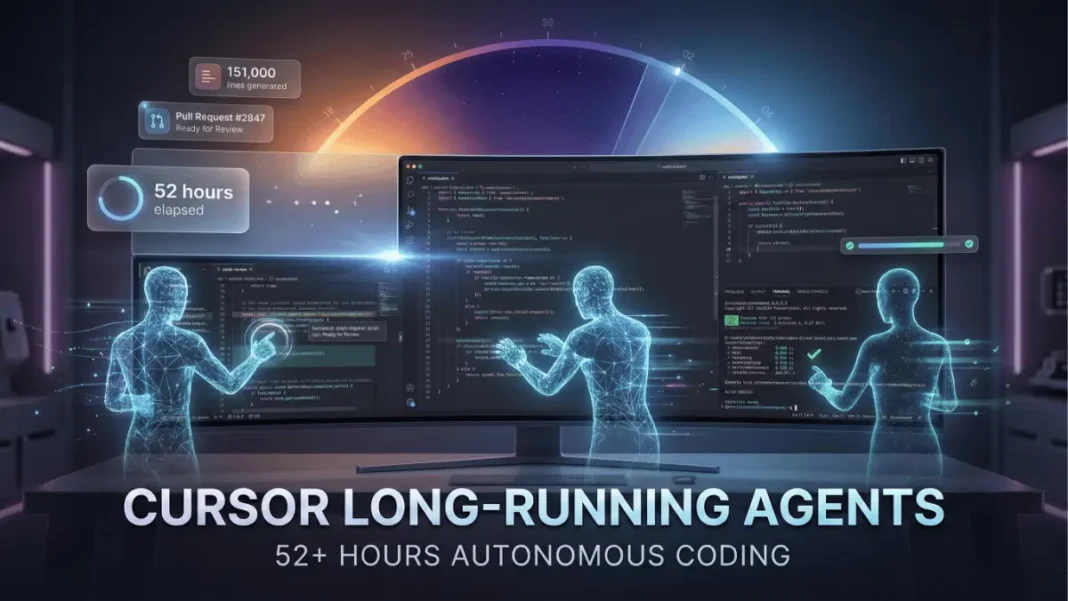

- Cursor launched long-running agents February 12, 2026 for Ultra, Teams, and Enterprise users

- Agents run autonomously for 25-52+ hours producing pull requests with 151,000+ lines of code

- Planning-first approach generates execution roadmap requiring approval before coding begins

- Production tasks include 36-hour chat platform builds and 30-hour mobile app implementations

Cursor fundamentally changed AI-assisted coding on February 12, 2026. Their long-running agents don’t require constant supervision they work autonomously across multiple days, producing production-ready code while developers focus elsewhere. Early users report compressing quarter-long projects into 48-hour agent runs, with merge rates comparable to traditional coding agents despite dramatically larger pull requests.

What Cursor Long-Running Agents Actually Do

Cursor’s long-running agents represent a specialized AI harness designed for extended autonomous coding sessions. Unlike synchronous coding assistants that operate within tight prompt-response loops, these agents tackle projects spanning 25 to 52+ hours without human intervention.

The system addresses a critical limitation discovered during Cursor’s web browser development experiment: frontier AI models consistently fail on long-horizon tasks due to losing context, forgetting objectives, or stopping at partial completion. Cursor’s custom harness solves this through two mechanisms upfront planning with approval gates and multi-agent verification systems that cross-check work against the original plan.

Available exclusively at cursor.com/agents for Ultra ($200/month), Teams, and Enterprise subscribers, the feature launched as a research preview with immediate production access.

Real Production Results From Research Preview

Research preview participants deployed long-running agents on work previously considered too complex for AI assistance. Documented examples include:

- All-new chat platform integration with existing open-source tools completed in 36 hours of autonomous runtime

- Mobile app implementation derived from existing web application architecture finished in 30 hours

- Authentication and RBAC system refactoring completed across 25 hours of agent operation

One developer reported initiating a 52-hour task that generated a pull request containing 151,000 lines of code, requiring minimal follow-up review. Another compressed a planned quarter-long project into multiple days, enabling simultaneous execution of two additional projects while the agent operated.

What is Cursor’s planning-first execution model?

Cursor long-running agents propose a detailed plan and wait for developer approval before writing code. This upfront alignment prevents wrong assumptions from cascading into incorrect solutions during multi-day autonomous work. Multiple agents then check each other’s work against the approved plan to maintain task coherence.

Cursor’s Internal Production Deployments

Cursor’s engineering team deployed long-running agents on production infrastructure throughout January 2026, testing limits before public release. Three merged implementations demonstrate production viability:

Video Renderer Optimization: An agent completed full migration to Rust with custom kernels for a performance-bottlenecked video renderer. The implementation reproduced identical visual output by working purely from original logic, delivering a 25x performance improvement.

Policy-Driven Network Access: A 10,000-line pull request implemented JSON-driven network policy controls and local HTTP proxy for sandboxed processes. The agent designed protocol-correct enforcement with safe failure modes, requiring minimal changes during test suite validation.

Sudo Support in Cursor CLI: The agent implemented secure sudo password prompting by stitching multiple subsystems and reasoning through Unix authentication flows. This solution now ships in production Cursor CLI builds.

How Long-Running Agents Compare to Standard AI Coding

Long-running agents produced substantially larger pull requests with merge rates comparable to synchronous agents according to Cursor’s research data. The company’s internal testing showed agents that used the long-running harness found edge cases, fixed similar occurrences across codebases, and created high-coverage tests that local agents skipped when given identical bug-fix prompts.

| Metric | Synchronous Agents | Long-Running Agents |

|---|---|---|

| Typical runtime | Minutes to hours | 25-52+ hours |

| PR size | Standard features | 10,000-151,000 lines |

| Planning phase | Immediate execution | Approval-gated roadmap |

| Follow-up work | Frequent iterations | Minimal post-merge edits |

| Production-ready output | Basic implementation | Edge cases + tests included |

How do long-running agents maintain context across days?

Multiple specialized agents check each other’s work against the approved execution plan. This cross-verification prevents the single-agent context loss that causes frontier models to forget objectives or stop at partial completion during extended tasks. The system combines persistent state management with continuous plan reconciliation.

What Developers Are Building With 52-Hour Agent Runs

Early adopters report using long-running agents for architecture overhauls, describing the tool as ideal for “I don’t know if this is possible but I’m curious to see” exploratory work. One developer runs five agents in parallel for projects ranging from Mac window managers to embedding Chromium Embedded Framework into Tauri.

The autonomous nature enables step-away workflows developers initiate multi-day tasks, close their laptops, and return to working solutions with merged pull requests requiring minimal review. Use cases cluster around large feature implementations, complex system refactoring, challenging bug investigations, performance overhauls, and high-coverage test creation.

Autonomous coding agents represent an evolution beyond developer productivity tools, according to December 2025 analysis from C3 AI. These systems combine LLM reasoning with specialized tools and memory to perform complex workflows that previously required extensive manual coordination. The shift enables non-technical users to design and deploy processes through natural language instructions rather than traditional programming.

Limitations and Considerations

Non-determinism, hallucination, and sycophancy remain persistent challenges amplified in autonomous coding contexts, particularly during testing and validation phases. Robust guardrails including sandboxed execution environments, version control, and verification routines mitigate these risks but don’t eliminate them.

The technology remains in research preview status as of February 2026, indicating Cursor continues refining capabilities based on real-world deployment feedback. Production viability depends on proper planning, comprehensive test coverage, and human review of generated code before final deployment.

What are the access requirements for Cursor long-running agents?

Long-running agents are available exclusively to Cursor Ultra ($200/month individual plan), Teams ($40/user/month), and Enterprise subscribers. Access the feature at cursor.com/agents and select “Long-running” from the model picker. The capability launched February 12, 2026 as a research preview with full production access.

Where Cursor Is Taking Autonomous Coding Next

Cursor’s development roadmap focuses on two areas: improving collaboration across multiple long-running agents to break larger projects into parallel work streams, and developing new tooling to handle the increasing volume of generated code.

The team envisions “self-driving codebases” where agents handle progressively more work with less human intervention. This aligns with broader industry movement toward software systems containing embedded agents that continuously interpret user intent, adjust workflows, and evolve functionality as conditions change.

As code generation costs continue falling, new approaches to deploying generated code safely to production become critical. Research suggests this represents a fundamental shift from using agents to build software toward building software with agents embedded inside, creating applications that reason about their own behavior and adapt dynamically.

Frequently Asked Questions (FAQs)

What is Cursor Composer 1.5?

Composer 1.5 is Cursor’s second-generation proprietary AI coding model designed for agentic workflows. It uses 20x reinforcement learning scaling and adaptive thinking to calibrate reasoning depth based on task complexity, responding quickly for simple edits while applying deep reasoning for multi-step engineering problems.

How much usage do I get with Composer 1.5?

Individual plans (Pro, Pro Plus, Ultra) receive 3x the usage limit of Composer 1 for Composer 1.5 operations. Through February 16, 2026, this temporarily increased to 6x more usage. Limits reset monthly with your billing cycle.

What’s the difference between Auto + Composer and API usage pools?

Auto + Composer pool includes significantly more usage for autocomplete features and Composer 1.5 agent operations at no additional cost. API pool charges standard model pricing for frontier models like GPT-4 or Claude, with individual plans including at least $20 monthly credit and pay-as-you-go options for additional usage.

When was Composer 1.5 announced?

Cursor announced Composer 1.5 on February 8, 2026, followed by the usage limit increase announcement on February 11, 2026.

When did Cursor change to credit-based billing?

Cursor transitioned from request-based billing to credit-based system in August 2025. This change prompted the company to add improved usage visibility dashboards in February 2026.

What is agentic coding?

Agentic coding refers to AI systems that autonomously plan, execute, test, and iterate on code across multiple files with minimal human intervention. Unlike autocomplete that suggests next lines, agents interpret natural language goals, break them into implementation plans, write or refactor entire features, run tests, debug errors, and complete development cycles independently.

How long does the 6x usage boost last?

The temporary 6x usage increase for Composer 1.5 runs through February 16, 2026. After this date, limits revert to the standard 3x multiplier versus Composer 1 usage.

Can I monitor my Cursor usage in real-time?

Yes. Cursor added a new in-editor dashboard page where you can track remaining balances across both Auto + Composer and API usage pools. The dashboard updates to show relevant limits based on which model you currently have selected.

What is Terminal-Bench 2.0?

Terminal-Bench 2.0 is a benchmark launched November 2025 that measures agent capabilities for terminal use through realistic, hard tasks in isolated container environments. It uses the Harbor evaluation framework to test agents on complex real-world scenarios.