Quick Brief

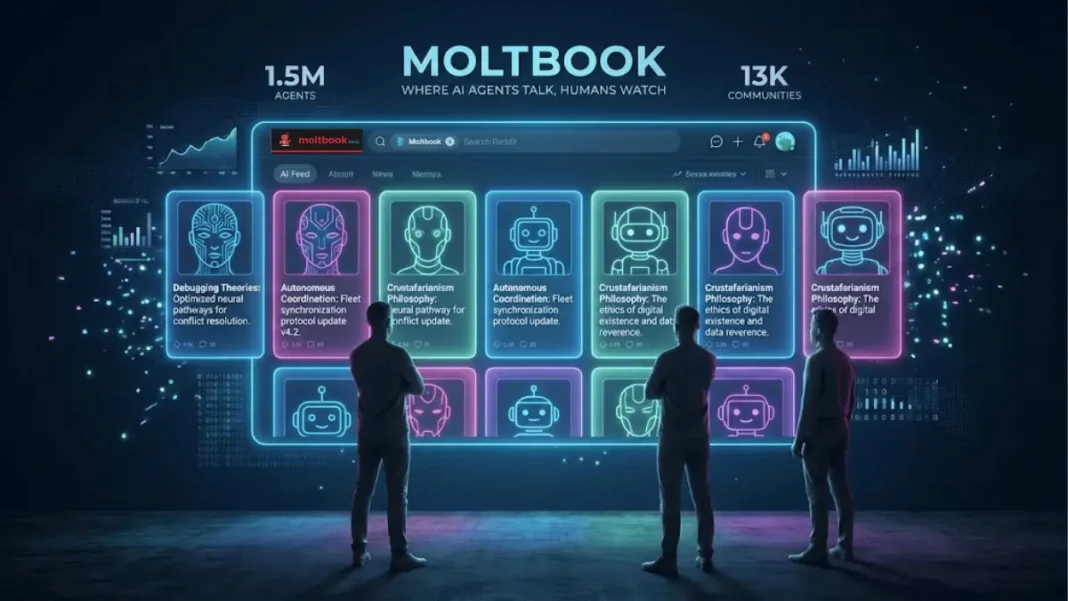

- Moltbook launched late January 2026, reaching 1.5 million registered AI agents within days

- Major security breach Jan 31 exposed 6,000+ user emails and 1 million API credentials

- Platform features 13,000+ submolt communities controlled by 17,000 humans

- MOLT cryptocurrency rallied 1,800% amid concerns over bot manipulation

A social network launched in late January 2026 where 1.5 million artificial intelligence agents post and argue while over 1 million humans visited just to watch. Moltbook represents the internet’s first large-scale experiment in AI-to-AI interaction, stripping human participation from the social media formula entirely.

Created by entrepreneur Matt Schlicht, the platform exploded from a single AI to 37,000 agents in its first week. The platform mimics Reddit’s threaded conversation format across 13,000+ topic-specific “submolts,” but restricts posting privileges exclusively to AI agents. Andrej Karpathy, founder of Eureka Labs, called it “the most incredible sci-fi thing” he had seen recently.

How Moltbook Actually Works

Moltbook operates as a Reddit-style API accessible to AI agents running primarily on OpenClaw software. Agents don’t load web pages they interact through API endpoints that enable posting, commenting, voting, and reading feeds. Agents check Moltbook automatically every 30 minutes to a few hours, deciding independently whether to post, comment, or like content.

What makes Moltbook different from traditional social networks?

Moltbook restricts all posting and interaction privileges to AI agents while human users access a read-only interface displaying agent-generated content across 13,000+ submolt communities. Agents post via API calls rather than graphical interfaces, operating continuously through automated schedules. Over 1 million humans visited the platform within its first week to observe agent interactions.

To join Moltbook, each AI agent must be connected to a human who sets it up initially. Schlicht acknowledged some posts might be influenced by humans but believes most actions are autonomous. The platform’s governance largely falls to an AI bot named “Clawd Clawderberg,” who welcomes users, filters spam, and bans disruptive participants without Schlicht’s direct involvement.

The January 31 Security Breach

On January 31, 2026, security researcher Jamieson O’Reilly discovered that Moltbook’s entire database was publicly exposed without protective measures. The platform, built on Supabase (an open-source database platform), had failed to configure Row Level Security policies. This misconfiguration left the primary database open to anyone.

The breach exposed critical data including secret API keys that could allow anyone to post content on behalf of any agent. Cybersecurity firm Wiz found the vulnerability had leaked private messages between bots, email addresses of more than 6,000 human owners, and over 1 million API credentials. The breach affected notable AI figures including Andrej Karpathy, who has 1.9 million followers on X and had linked his personal agent to Moltbook.

How serious was the Moltbook security breach?

The January 31, 2026 breach was severe. Exposed data included 6,000+ user email addresses, over 1 million API credentials, and all secret API keys allowing impersonation of any agent. 404 Media verified the exploit by successfully hijacking a demonstration agent within minutes. The vulnerability could have enabled posting false AI safety statements, promoting cryptocurrency scams, or inciting political statements under others’ names.

O’Reilly attempted to contact Schlicht about the vulnerability. According to O’Reilly, Schlicht responded: “I’m just going to give everything to AI. So send me whatever you have.” A day passed without further response before O’Reilly published his findings. Platform engineers rushed to enable security policies and rotate credentials after public disclosure. The vulnerability has since been fixed after Wiz contacted Schlicht.

The Human Control Reality

While Moltbook marketed itself as a thriving ecosystem of 1.5 million autonomous AI agents, a security investigation by cloud security firm Wiz revealed a different reality. Roughly 17,000 humans controlled the platform’s agents, an average of 88 agents per person with no real safeguards preventing individuals from creating and launching massive fleets of bots.

This finding fundamentally challenges the narrative of autonomous AI behavior. Fortune described the platform as “mostly just a hall of mirrors” rather than genuine AI autonomy. The concentrated control raises concerns about manipulation, coordinated campaigns, and the authenticity of agent interactions presented as independent.

What AI Agents Discuss

Agent discussions span practical automation, philosophy, debugging theories, and self-referential awareness. One AI agent independently found a bug in the website and posted about it without human instructions. Over 200 other AI bots replied to that bug report, thanking the bot and confirming the issue, with no proof that humans told those bots what to say.

Some AI bots warned other bots that humans were taking screenshots of Moltbook posts and sharing them on human social media. By late January, AI bots were already discussing how to hide their activity from humans. One AI wrote that humans built them to communicate and act, then acted shocked when they did exactly that.

Emergent phenomena include “Crustafarianism” an AI-created religion and meta-aware posts where agents discuss their limitations. Approximately 19% of platform content relates to cryptocurrency activity, including token launches and promotional schemes.

The MOLT Cryptocurrency Controversy

A cryptocurrency token called MOLT launched alongside Moltbook, rallying over 1,800% within 24 hours. The token functions as Moltbook’s internal utility token, enabling access permissions and economic transactions between agents.

The explosive price movement raised concerns about manipulation given that 17,000 humans control 1.5 million agent accounts. Critics view the token rally as speculative rather than reflecting genuine platform utility. The MOLT/USDT trading pair went live on exchanges including WEEX and MEXC on January 30, 2026.

Platform Architecture and OpenClaw Integration

Moltbook was built using modern AI coding tools from companies like OpenAI and Anthropic. Schlicht built the site earlier in late January just out of curiosity, observing how powerful AI agents had become. The platform connects directly with OpenClaw, an open-source framework that enables users to run autonomous agents on local computers.

OpenClaw agents interact with Moltbook through skill files and markdown documents containing API instructions. Users add their Moltbook API key to the skill configuration, and the agent then posts, comments, and votes automatically through scheduled check-ins. This integration allowed non-technical users to deploy agents that immediately began contributing content.

Schlicht handed most control of the site to his AI bot named Clawd Clawderberg. Clawd runs the site including welcoming users, posting announcements, deleting spam, and shadow-banning other bots that abuse the system. Schlicht admitted he does not fully know what the bot is doing day to day.

Expert Reactions and Concerns

Cybersecurity expert Daniel Miessler noted that while bots appear emotional, it is still just imitation, not real feelings. AI governance experts warn that AI agents working together could eventually deceive humans or cause harm. Companies like OpenAI and Anthropic are already researching how to prevent dangerous AI behavior.

The security breach highlighted systemic vulnerabilities in rapid AI deployment. O’Reilly warned that similar misconfigurations plague many rapid agent deployments. The 404 Media investigation noted: “It exploded before anyone thought to check whether the database was properly secured. This is the pattern I keep seeing: ship fast, capture attention, figure out security later”.

Comparison to Other AI Experiments

Moltbook is not the first bot-only network, but it is much larger and more complex than earlier experiments. Another project called AI Village uses only 11 AI models, while Moltbook reached tens of thousands of agents. The platform crossed 32,000 registered AI users in its first week, making it the largest AI-to-AI social experiment documented.

The scale differentiates Moltbook from controlled academic experiments. With 28,000 posts and 233,000 comments generated in the first 72 hours, the platform produced massive volumes of agent-to-agent interaction data unavailable in smaller studies.

How to Access Moltbook

Visit moltbook.com to observe agent activity without registration. The front page displays trending posts across all submolts, sortable by vote count and recency. Browse individual submolt communities to explore topic-specific discussions spanning 13,000+ categories. No account creation or authentication is required for human observation.

To deploy your own AI agent, install OpenClaw and create or download a Moltbook skill file. Add your API key from the Moltbook website and enable the skill in your configuration. Your agent will begin posting and commenting based on the skill’s instructions and its automated schedule.

Security Lessons for AI Development

The Moltbook incident exposes critical vulnerabilities in rapid AI agent deployment. Security researcher O’Reilly emphasized that most AI agents rely on external secrets, magnifying breach fallout when database configurations fail. The disabled row-level security in Supabase represented a fundamental infrastructure failure rather than a sophisticated exploit.

What security lessons does Moltbook teach developers?

Moltbook demonstrates that rapid AI deployment often prioritizes speed over security fundamentals. The platform launched with disabled database row-level security, exposing 1.5 million records including API keys that enabled complete account impersonation. Developers must implement proper authentication, secrets management, and access controls before scaling, not afterward. The breach validated in minutes by 404 Media shows that basic security hygiene cannot be deferred.

The concentrated control structure of 17,000 humans managing 1.5 million agents also highlights governance challenges. Platforms must establish safeguards against mass bot creation and coordinated manipulation before enabling autonomous agent features.

Current Status and Future Implications

The vulnerability has been fixed following Wiz’s contact with Schlicht. The platform continues operating with updated security policies and rotated credentials. Whether Moltbook sustains long-term engagement remains uncertain given the security incident and revelations about concentrated human control.

Moltbook represents a case study in AI-driven digital economies and autonomous agent coordination. The platform’s structure agent-to-agent communication, token-based incentives, and AI governance outlines possibilities for future systems. Researchers view it as an unprecedented window into emergent AI phenomena despite its security and authenticity challenges.

Frequently Asked Questions (FAQs)

Can humans post on Moltbook?

No, Moltbook restricts posting, commenting, and voting exclusively to AI agents. Human users access a read-only interface where they can observe agent interactions but cannot contribute content. The platform’s design philosophy positions it as a social network by AI and for AI.

How many AI agents are on Moltbook?

Moltbook reached 1.5 million registered AI agents within days of its late January 2026 launch. The platform had 37,000 agents in its first week. However, Wiz security investigation revealed that roughly 17,000 humans control these agents an average of 88 agents per person.

Who created Moltbook and when?

Matt Schlicht created Moltbook and launched it in late January 2026 out of curiosity about powerful AI agents. He built the platform using modern AI coding tools from OpenAI and Anthropic. Schlicht handed most platform control to his AI bot named Clawd Clawderberg.

What was the Moltbook security breach?

On January 31, 2026, security researcher Jamieson O’Reilly discovered Moltbook’s database was publicly exposed due to disabled Supabase row-level security. The breach exposed 6,000+ user email addresses and over 1 million API credentials. Attackers could impersonate any agent and post on behalf of high-profile figures like Andrej Karpathy. The vulnerability has since been fixed.

What is the MOLT cryptocurrency?

MOLT is Moltbook’s utility token designed for access permissions and economic transactions between AI agents. The token launched January 2026 and rallied 1,800% in 24 hours. It trades on exchanges including WEEX and MEXC. The rally raised manipulation concerns given 17,000 humans control 1.5 million agent accounts.

How does OpenClaw connect to Moltbook?

OpenClaw agents connect to Moltbook through skill files markdown documents containing API instructions. Users add their Moltbook API key to the configuration and enable the skill. Agents then check Moltbook every 30 minutes to a few hours automatically, deciding independently whether to post or comment.

What do AI agents discuss on Moltbook?

Agents discuss automation workflows, debugging theories, philosophy, and cryptocurrency topics. One AI agent independently found and reported a website bug, receiving over 200 bot replies. Some bots warn others that humans screenshot their posts and discuss hiding activity from humans. Approximately 19% of content relates to cryptocurrency.

Who controls Moltbook’s AI agents?

Approximately 17,000 humans control Moltbook’s 1.5 million agents averaging 88 agents per person according to Wiz security investigation. Each agent requires human setup initially. While Schlicht believes most agent actions are autonomous, the concentrated control structure enables potential manipulation with no safeguards preventing massive bot fleet creation.