Quick Brief

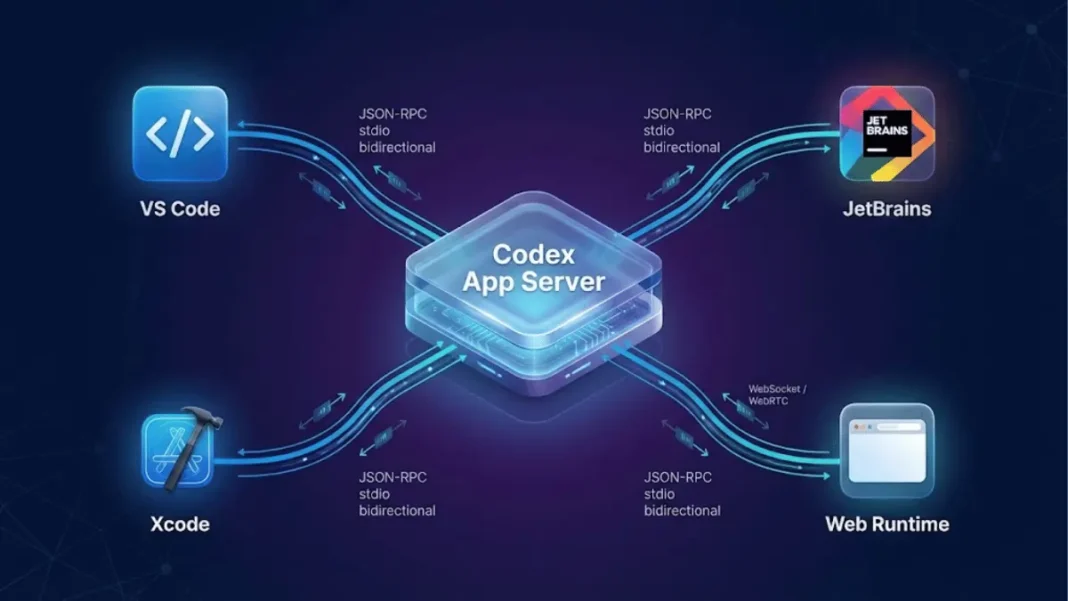

- OpenAI documented Codex App Server as standard integration method for agent workflows across IDEs

- JSON-RPC protocol supports bidirectional communication via stdio with backward compatibility guarantees

- Powers VS Code, JetBrains, Xcode integrations plus web runtime with consistent agent loop

- Developer teams achieved working integrations using TypeScript, Go, Python, Swift, and Kotlin bindings

OpenAI has fundamentally redefined how developers embed AI agents into production tools. In a February 4, 2026 engineering post, Celia Chen from OpenAI’s technical staff detailed the Codex App Server, a standardized JSON-RPC protocol that decouples agent logic from user interfaces. This architectural approach transforms the “agent loop” from an implementation detail into a portable service teams can integrate without rebuilding state management or authentication.

The App Server emerged from a practical engineering challenge: multiple OpenAI products and partner platforms needed to reuse the same Codex harness without duplicating code. What started as an internal solution for the VS Code extension has evolved into the recommended integration method for any client surface requiring full agent capabilities.

The Architecture Behind Codex App Server

The Codex harness represents the complete orchestration layer for AI-assisted coding workflows. This includes thread lifecycle management, configuration loading, authentication flows like “Sign in with ChatGPT,” and tool execution in sandboxed environments. All this logic resides in “Codex core” a Rust-based library and runtime that manages the persistence of conversation threads.

The App Server bridges Codex core and external clients through four key components:

- Stdio reader – Receives JSON-RPC messages from clients

- Codex message processor – Translates client requests into Codex core operations

- Thread manager – Spins up one core session per conversation thread

- Core threads – Execute the agent loop and emit internal events

This design allows one client request to generate multiple event updates. The message processor listens to Codex core’s internal event stream, then transforms low-level events into stable, UI-ready JSON-RPC notifications. The protocol is fully bidirectional the server can initiate requests when the agent needs user approval, pausing execution until the client responds.

What is the Codex App Server’s communication protocol?

The Codex App Server uses a “JSON-RPC lite” variant that maintains request/response/notification structure while omitting the standard "jsonrpc": "2.0" header. Communication streams as JSONL (JSON Lines) over stdio, making it straightforward to build client bindings in any language.

Three Core Conversation Primitives

OpenAI designed the protocol around clear boundaries that prevent state ambiguity across different UI surfaces. The system defines three fundamental building blocks:

Items serve as atomic input/output units with explicit lifecycles. Each item carries a type (user message, agent message, tool execution, approval request, diff) and progresses through item/started, optional item/*/delta events for streaming content, and item/completed with terminal payload. This lets clients begin rendering immediately and finalize when content streams finish.

Turns represent one unit of agent work triggered by user input. A turn contains a sequence of items showing intermediate steps and outputs. It begins when a client submits input like “run tests and summarize failures” and ends when the agent finishes producing outputs for that request.

Threads act as durable containers for ongoing Codex sessions between users and agents. Threads support creation, resumption, forking, and archiving. History persists so clients can reconnect and render consistent timelines even after network drops or tab closures.

Integration Patterns Across Client Surfaces

Local Applications and IDE Extensions

VS Code, JetBrains IDEs, and Xcode bundle platform-specific App Server binaries as part of their shipped artifacts. These clients launch the binary as a long-running child process and maintain a bidirectional stdio channel for JSON-RPC communication. The VS Code extension, for example, pins to a tested App Server version so it always runs validated bits.

Partners with slower release cycles like Xcode decouple by keeping the client stable while pointing to newer App Server binaries when needed. This allows adopting server-side improvements, better auto-compaction, newly supported config keys, bug fixes without waiting for client releases. The protocol’s backward compatibility ensures older clients communicate safely with newer servers.

How do developers generate client bindings for the App Server?

For TypeScript projects, run codex app-server generate-ts to create definitions directly from Rust protocol types. Other languages can use codex app-server generate-json-schema to produce a JSON Schema bundle compatible with preferred code generators. Teams have built working clients in Go, Python, Swift, and Kotlin using these schemas.

Codex Web Runtime

The web implementation runs the Codex harness inside container environments. A worker provisions a container with the checked-out workspace, launches the App Server binary inside it, and maintains a long-lived JSON-RPC over stdio channel. The web app in the user’s browser tab communicates with the Codex backend over HTTP and Server-Sent Events (SSE), which streams task events from the worker.

This architecture keeps browser-side UI lightweight while delivering consistent runtime behavior across desktop and web. Because web sessions are ephemeral tabs close, networks drop the server becomes the source of truth for long-running tasks. Work continues even if the tab disappears. Streaming protocol and saved thread sessions enable new sessions to reconnect, resume from the last known state, and catch up without rebuilding client state.

Terminal User Interface Refactoring

Historically, the TUI operated as a “native” client running in the same process as the agent loop, talking directly to Rust core types. This made early iteration fast but created a special-case surface. OpenAI is refactoring the TUI to launch an App Server child process, speak JSON-RPC over stdio, and render the same streaming events and approvals as any other client.

This unlocks workflows where the TUI connects to a Codex server running on a remote machine. The agent stays close to compute and continues work even if the laptop sleeps or disconnects, while delivering live updates and controls locally.

Choosing the Right Integration Method

| Integration Method | Best For | Key Limitation |

|---|---|---|

| Codex App Server | Full harness access with stable event streams, Sign in with ChatGPT, model discovery | Requires building JSON-RPC client binding |

| Codex as MCP Server | Existing MCP workflows invoking Codex as callable tool | Limited to MCP-exposed capabilities; richer interactions may not map cleanly |

| Codex Exec | One-off automation, CI pipelines, non-interactive tasks | No session persistence or interactive approvals |

| Codex SDK | Native TypeScript library for programmatic control | Fewer language options; smaller surface area than App Server |

OpenAI recommends the App Server as the default integration path. While setup requires client-side binding work, Codex itself can generate much of the integration code when provided with JSON schema and documentation.

What approval mechanisms does the App Server support?

Depending on user settings, command execution and file changes may require approval. The server sends a JSON-RPC request to the client, which responds with approval decision plus optional persistence rules. In VS Code, this renders as a permission prompt asking about allowing specific workspace operations.

Real-World Adoption and Partner Integrations

The App Server powers multiple first-party OpenAI surfaces and third-party integrations:

- First-party: Codex Desktop App, TUI/CLI, Web Runtime

- Third-party: JetBrains IDEs, VS Code extension, Xcode

This unified harness approach means developers get identical agent behavior whether they invoke Codex from the terminal, VS Code, IntelliJ IDEA, or a web browser. Authentication state, thread history, and tool execution policies remain consistent across surfaces.

Limitations and Considerations

The App Server requires integration effort. Unlike plug-and-play solutions, teams must build and maintain JSON-RPC client bindings in their target language. While Codex can assist with code generation, this upfront cost may deter smaller projects with simpler use cases.

For workflows already standardized around MCP or cross-provider agent protocols, the App Server introduces a second integration surface. These ecosystems offer portable interfaces targeting multiple model providers, but they often converge on a common capability subset. Richer Codex-specific interactions like diff streaming and session-aware approvals may not map cleanly through generic endpoints.

The protocol’s stdio transport works well for local processes and containerized environments but requires tunneling for hosted setups where the App Server runs remotely. While this behaves like stdio, it adds network latency and potential failure modes absent in purely local configurations.

Frequently Asked Questions (FAQs)

What is OpenAI Codex App Server?

Codex App Server is a JSON-RPC protocol and long-lived process that exposes the Codex harness OpenAI’s agent loop and logic to external clients. It enables IDEs, web apps, and CLI tools to integrate AI coding agents with consistent behavior and backward compatibility.

Which programming languages support Codex App Server integration?

Teams have built working clients in Go, Python, TypeScript, Swift, and Kotlin. The App Server supports any language capable of JSON-RPC communication over stdio.

How does Codex App Server differ from MCP?

Codex App Server provides full Codex harness functionality including Sign in with ChatGPT, model discovery, and rich session semantics. MCP offers a portable tool interface but exposes only what MCP endpoints support, potentially limiting Codex-specific interactions like diff streaming.

Can the Codex App Server run on remote servers?

Yes. The TUI refactor enables connecting to Codex servers on remote machines, keeping agents close to compute while delivering live updates locally. The web runtime already uses this pattern with containerized environments.

Is the Codex App Server protocol backward compatible?

Yes. OpenAI designed the JSON-RPC surface for backward compatibility so older clients can safely communicate with newer servers. Partners like Xcode adopt server-side improvements without requiring client releases.

What replaced the Assistants API for agent loops?

OpenAI standardized on the Responses API. The Agents SDK now exposes built-in loops for tool invocation, guardrails, and hand-offs.

Where can developers access App Server source code?

All source code lives in the open-source Codex CLI repository on GitHub. The App Server README provides protocol documentation and integration examples.

How does the stdio communication work for web environments?

The web runtime launches the App Server binary inside containers and maintains JSON-RPC over stdio channels. Browser tabs communicate with the backend over HTTP and Server-Sent Events (SSE) for streaming task updates.