Quick Brief

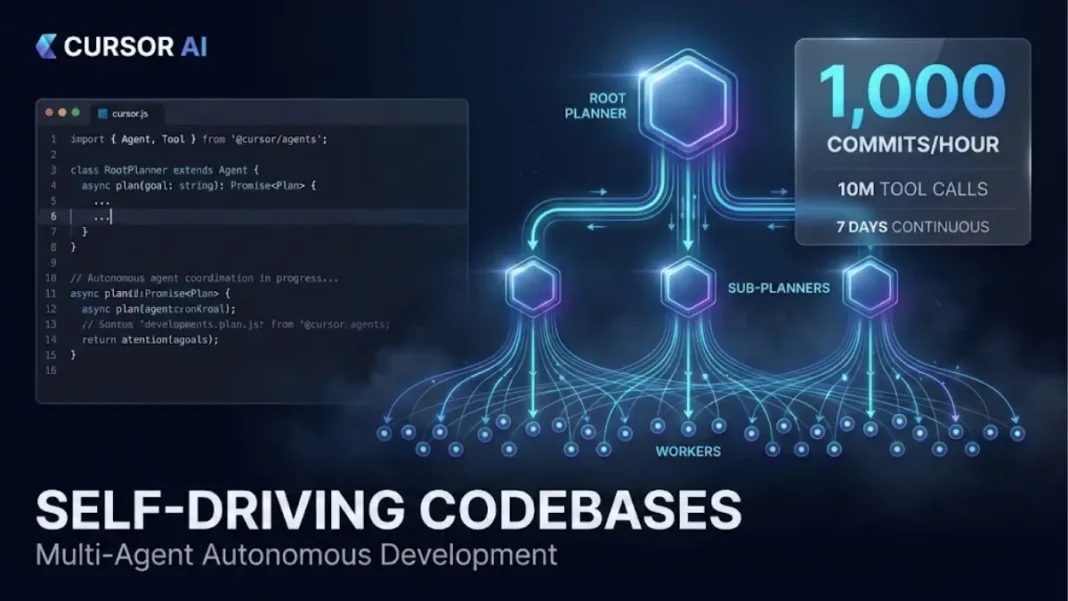

- Cursor AI’s multi-agent system generated 1,000 commits per hour across 10 million tool calls over one week

- Autonomous agents built a functional web browser with minimal human intervention using recursive planner-worker architecture

- System peaked at several hundred simultaneous agents coordinating through hierarchical task delegation without global synchronization

- Research reveals GPT-5.1 and GPT-5.2 models excel at precise instruction-following for long-running autonomous coding tasks

Cursor AI has fundamentally redefined what autonomous coding systems can achieve and their self-driving codebase research proves it. The company’s multi-agent harness orchestrated thousands of AI agents to build a functional web browser, generating the vast majority of commits without human intervention for seven continuous days. This breakthrough reveals how specialized AI agents can collaborate at scale to tackle complex software projects that would overwhelm single-agent systems.

The Breakthrough That Changes Software Development

Cursor’s research project achieved unprecedented scale in autonomous software development. The system peaked at approximately 1,000 commits per hour across 10 million tool calls during a one-week continuous run. Multiple agents worked simultaneously sometimes reaching several hundred concurrent workers all coordinating on building a web browser from scratch.

The browser project intentionally targeted complexity to reveal limitations in frontier AI models. Unlike simple coding tasks, browser development requires multiple interconnected subsystems working together: rendering engines, HTML parsers, CSS processors, and networking components. This complexity forced the research team to evolve beyond single-agent architectures.

Traditional coding agents struggle with overwhelming scope and lose track of objectives over extended periods. Cursor’s multi-agent approach solved this through hierarchical delegation, where specialized planners own specific portions of the codebase while workers execute narrowly defined tasks.

Architecture Evolution: From Chaos to Coordination

Early Failures with Self-Coordination

Cursor’s first multi-agent experiment used the simplest possible design: agents with equal roles sharing a state file to coordinate work. This failed immediately. Agents held locks too long, forgot to release them, or attempted illegal lock operations. Twenty agents slowed to the throughput of just 1-3 agents, with most time wasted waiting on locks.

The lack of structure caused another critical problem. No single agent took responsibility for complex tasks. They avoided contention by choosing smaller, safer changes rather than tackling the project’s core challenges.

Structured Roles and Accountability

The second iteration introduced explicit roles: a planner created the approach and deliverables, an executor owned overall completion, workers handled specific tasks, and an independent judge determined whether iterations should continue. This resolved many coordination issues by giving clear ownership.

However, rigidity became the bottleneck. Doing all planning upfront made dynamic readjustment difficult as new issues emerged. The system was constrained by its slowest worker, and some agents pursued counterproductive directions without self-correcting until the next iteration.

What is Cursor’s multi-agent architecture for autonomous coding?

Cursor’s final architecture uses recursive planners that spawn sub-planners and workers. Root planners own entire project scopes and delegate specific tasks. Workers execute independently on isolated repository copies, then submit detailed handoffs back to planners. This hierarchical structure enables hundreds of agents to collaborate without global synchronization.

The Final Design: Recursive Planning

The breakthrough architecture that enabled 1,000 commits per hour incorporates three specialized agent types:

- Root Planners – Own the entire scope of user instructions, understand current state, and deliver targeted tasks that progress toward goals. They perform no coding themselves and remain unaware of which agents pick up their tasks.

- Sub-Planners – When root planners identify subdivisions, they spawn sub-planners that fully own narrow slices. This recursive delegation prevents any single planner from becoming overwhelmed.

- Workers – Pick up tasks and drive them to completion in complete isolation. They work on their own repository copies without communicating with other agents. Upon completion, they write comprehensive handoffs containing what was done, concerns, deviations, findings, and feedback.

This design mirrors how effective software teams operate today. Sub-planners rapidly fan out workers while ensuring complete ownership throughout the system. The handoff mechanism keeps the system in continuous motion, planners receive updates, pull the latest repository state, and make subsequent decisions even after declaring completion.

Technical Breakthroughs and Trade-offs

Accepting Controlled Error Rates

Cursor discovered that requiring 100% correctness before every commit caused major serialization and throughput slowdowns. Even minor errors like API changes or typos would halt the entire system. Workers would venture outside their scope trying to fix irrelevant issues, with multiple agents trampling each other on the same problems.

The solution was counterintuitive: allow some slack in correctness. Agents can trust that fellow agents will fix issues soon due to effective ownership and delegation across the codebase. The error rate remains small and constant, perhaps rarely completely clean but steady and manageable, not exploding.

This suggests the ideal efficient system accepts some error rate, with a final “green” branch where an agent regularly takes snapshots and performs quick fixup passes before release.

Infrastructure Challenges at Scale

Each multi-agent run operated on a single large virtual machine with ample resources to avoid distributed systems complexity. Most runs peaked at several hundred agents, which typically saturated but did not over-prescribe these machines.

Disk operations became the primary bottleneck. With hundreds of agents compiling simultaneously on a monolith project, the system sustained many GB/s reads and writes of build artifacts. This revealed an important lesson: project structure and architectural decisions directly impact token and commit throughput, because working with the codebase dominates time instead of thinking and coding.

How does Cursor optimize multi-agent performance for autonomous coding?

Cursor’s system achieves peak performance through three key optimizations: accepting small controlled error rates instead of perfect correctness, running all agents on large single machines to avoid distributed system overhead, and restructuring projects into self-contained crates to reduce compilation bottlenecks. The system peaked at several hundred concurrent agents.

The Critical Role of Instructions

Instructions given to multi-agent systems amplify everything including suboptimal and unclear specifications. Cursor was essentially operating a typical coding agent but with orders of magnitude more time and compute. Agents follow instructions strictly, going down specified paths even when they’re suboptimal, because they’re trained not to change or override them.

The browser project revealed several instruction failures:

- Vague specifications – Instructions like “spec implementation” caused agents to pursue obscure, rarely-used features rather than prioritizing intelligently

- Implicit assumptions – Performance expectations within user-friendly bounds required explicit instructions and enforced timeouts

- Missing guardrails – Agents needed explicit process-based resource management tools to gracefully recover from memory leaks and deadlocks

The first browser version without JavaScript converged on an architecture unfit to evolve into a full browser, a failure of initial specification. A later run explicitly defined dependency philosophy and which libraries must not be used, correcting this oversight.

That later run also restructured the monolith into many self-contained crates. Despite starting in a heavily broken state, the multi-agent system converged toward working code within days. This demonstrated strong collaborative capability that holds across totally broken states instead of degrading further.

Prompting Strategies That Actually Work

Treat Models Like Brilliant New Hires

Cursor found it more effective to avoid instructing for things models already know how to do. Focus only on what they don’t know like multi-agent collaboration protocols or domain-specific details like how to run tests and deployment pipelines.

Constraints beat instructions. “No TODOs, no partial implementations” works better than “remember to finish implementations”. Models generally do good things by default; constraints define their boundaries.

Avoid Checkbox Mentality

For higher-level tasks, avoid giving specific checklists. Detailed instructions about intent work better than explicit steps, because specific items make models focus on achieving those rather than the wider scope. You also implicitly deprioritize unlisted things.

Concrete numbers and ranges prove valuable when discussing scope. Instructions like “generate many tasks” tend to produce small amounts conservative defaults that technically follow instructions. “Generate 20-100 tasks” conveys that intent is larger scope and ambition, producing observably different behavior.

What are best practices for prompting autonomous coding agents?

Effective agent prompting focuses on constraints rather than instructions (“No partial implementations” versus “finish everything”), provides concrete numbers for scope (“20-100 tasks” not “many tasks”), and treats models like brilliant new hires who know engineering but not your specific processes. Avoid checklists for complex tasks.

System Design Principles for Multi-Agent Success

Cursor established three core principles from their research:

- Anti-fragility is mandatory – As agent count scales, failure probability increases proportionally. The system must withstand individual agent failures, allowing others to recover or try alternative approaches.

- Empirical over assumption-driven – Use data and observation to make adjustments rather than assuming how systems should work based on human organizations or existing designs.

- Design explicitly for throughput – This requires trading off other aspects like accepting small but stable error rates instead of perfectly working code 100% of the time, which would dramatically slow the system.

These systems appear elegantly simple when designed correctly, but finding the right simple approach required exploring many different architectures. The current design runs with minimal overhead and provides linear scaling of token throughput.

Model Performance: GPT-5 Series Excels

Cursor initially experimented with Opus 4.5, but the model lost track of objectives, frequently proclaimed premature success, and got stuck on complex implementation details. It could write good code in small pieces but struggled with overwhelming scope.

GPT-5.1 and later GPT-5.2 showed superior results for precisely following instructions. This capability proved ideal for long-running agents. The research team updated their harness to use OpenAI models based on these experiments.

Limitations and Considerations

Cursor’s browser project wasn’t intended for external use and was expected to have imperfections. The code quality reflected this experimental nature focused on proving multi-agent collaboration feasibility rather than production readiness.

The system exhibited pathological behaviors during development, including sleeping randomly, stopping agent execution, refusing to plan beyond narrow tasks, improperly merging worker changes, and claiming premature completion. These issues arose when single agents were given too many simultaneous roles and objectives.

Synchronization overhead remains a challenge. Multiple agents sometimes touch the same files or refactor identical code. Rather than engineering complex solutions, Cursor accepts moments of turbulence and lets the system naturally converge over short periods. This spends extra tokens but keeps the system simpler and maintains better global productivity.

Implications for Software Development

The system’s resemblance to how effective software teams operate today suggests this might be emergent behavior rather than explicitly trained patterns. Models weren’t trained to organize this way, indicating it could represent the correct structure for software projects.

Cursor is making part of their multi-agent research harness available in preview. This allows developers to experiment with autonomous coding systems for their own projects while understanding the trade-offs between throughput and code quality.

Frequently Asked Questions (FAQs)

How many commits did Cursor’s autonomous coding system generate?

Cursor’s multi-agent system peaked at approximately 1,000 commits per hour, generating the vast majority of commits to their web browser research project over one continuous week. The system executed 10 million tool calls during this period with minimal human intervention.

What AI models power Cursor’s self-driving codebases?

Cursor’s research harness primarily uses OpenAI’s GPT-5.1 and GPT-5.2 models. Initial experiments with Opus 4.5 showed limitations in maintaining long-term objectives, while GPT-5 series models demonstrated superior ability to follow precise instructions over extended periods.

Can autonomous coding agents work on existing codebases?

Yes, Cursor’s multi-agent system successfully restructured heavily broken codebases into working code within days. The system demonstrated strong collaborative capability across totally broken states, converging toward functional implementations rather than degrading further. This shows viability for legacy code modernization.

What prevents autonomous agents from creating low-quality code?

Cursor’s architecture uses recursive ownership where planners maintain responsibility for specific code portions while workers execute isolated tasks. Handoff mechanisms ensure continuous feedback propagation. The system accepts small controlled error rates with final reconciliation passes rather than requiring perfect correctness at every step.

How does multi-agent coordination avoid conflicts?

Workers operate on independent repository copies without direct communication with other agents. Planners and sub-planners maintain hierarchical ownership without requiring global synchronization. The system accepts some synchronization overhead and lets natural convergence resolve temporary turbulence.

What types of software projects benefit from autonomous coding agents?

Complex projects with multiple interconnected subsystems benefit most from multi-agent approaches. Cursor chose browser development specifically because it reveals limitations in single-agent systems. Projects requiring sustained development over days or weeks with many concurrent workstreams see the greatest throughput improvements.

Are self-driving codebases ready for production use?

Cursor is making part of their multi-agent research harness available in preview. The browser project wasn’t intended for external use and had imperfections. The technology demonstrates feasibility for sustained autonomous development but requires careful instruction specification and acceptance of controlled error rates.