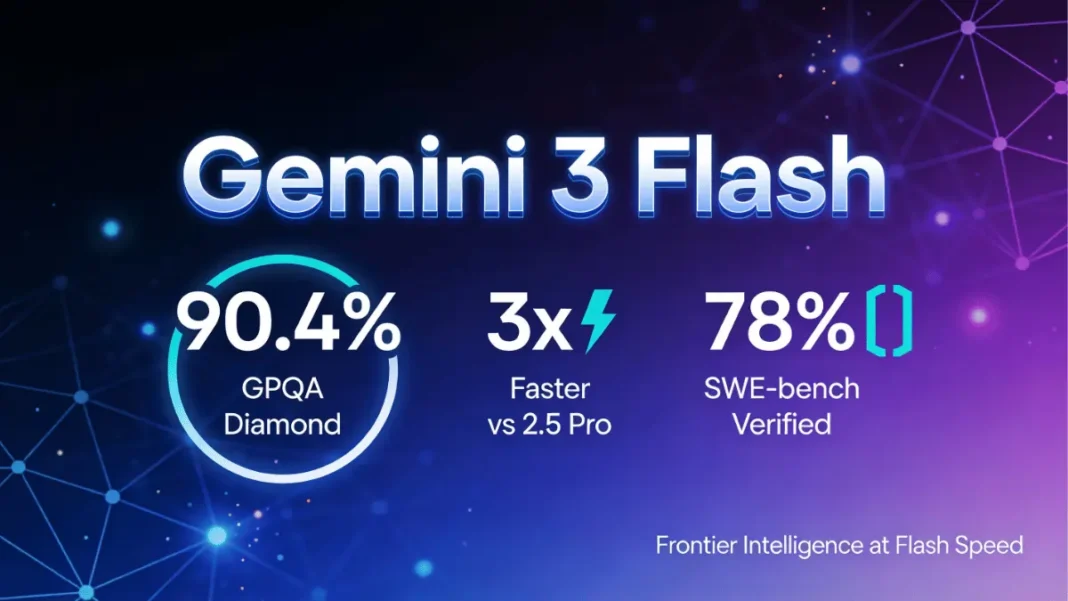

Summary: Google’s Gemini 3 Flash combines frontier-level AI reasoning with Flash-series speed at less than 25% the cost of Gemini 3 Pro. It scores 90.4% on GPQA Diamond while running 3x faster than 2.5 Pro, priced at $0.50 per million input tokens. Available now via Google AI Studio, Vertex AI, and Gemini CLI for developers building real-time, production-scale applications.

Google has eliminated the traditional AI trade-off between speed and intelligence with Gemini 3 Flash, a model that delivers PhD-level reasoning at three times the speed of its predecessor while costing a fraction of the price. Released on December 17, 2025, this latest addition to the Gemini family brings frontier-class performance to developers who need both rapid responses and sophisticated reasoning for production applications.

Gemini 3 Flash processes trillions of tokens daily across hundreds of thousands of applications built by millions of developers, making it Google’s most popular model variant. The new version outperforms Gemini 2.5 Pro across multiple benchmarks while maintaining the low latency and cost efficiency that made Flash models the go-to choice for high-frequency workflows.

What Is Gemini 3 Flash?

Gemini 3 Flash represents Google’s latest breakthrough in balancing computational efficiency with frontier intelligence. Built on the same foundation as Gemini 3 Pro, it inherits advanced multimodal understanding, coding capabilities, and agentic features while optimizing for speed and cost.

The model achieves state-of-the-art performance on complex reasoning tasks that previously required larger, slower models. It features adaptive thinking mechanisms that modulate computation based on query complexity, consuming 30% fewer tokens on average compared to 2.5 Pro for routine tasks. This efficiency translates directly to reduced operational costs in production environments where token usage accumulates rapidly.

Key Specifications at a Glance

| Specification | Gemini 3 Flash |

|---|---|

| Input Token Price | $0.50 per 1M tokens |

| Output Token Price | $3.00 per 1M tokens |

| Audio Input Price | $1.00 per 1M tokens |

| Speed vs 2.5 Pro | 3x faster |

| GPQA Diamond Score | 90.4% |

| SWE-bench Verified | 78% |

| MMMU Pro Score | 81.2% |

| Context Caching | 90% cost reduction |

| Batch API Discount | 50% cost savings |

- Frontier-level reasoning at 3x the speed of 2.5 Pro

- 60-70% lower costs than comparable models

- 78% SWE-bench Verified score surpasses 3 Pro for coding

- 90% cost reduction with context caching

- Native multimodal processing (text, image, video, audio)

- Built-in code execution for visual tasks

- 30% token efficiency improvement over 2.5 Pro

- Production-ready rate limits for paid customers

- Available across multiple platforms (API, CLI, Vertex AI)

- Free tier users face limited daily request quotas

- Requires configuration of thinking levels and interaction modes

- Premium features (higher thinking levels) increase costs

- Long context windows (>200K tokens) cost significantly more

- Some complex creative tasks may still benefit from 3 Pro

- Enterprise features require Vertex AI tier with higher minimums

Competitors are also prioritizing efficiency, similar to the recently announced Xiaomi MiMo-V2-Flash which targets high-speed AI tasks.

Performance Benchmarks: Where Gemini 3 Flash Excels

PhD-Level Reasoning Scores

Gemini 3 Flash demonstrates frontier-class performance on academic and professional reasoning benchmarks that test deep knowledge and complex problem-solving. It scores 90.4% on GPQA Diamond, a benchmark designed to evaluate PhD-level expertise across scientific domains. This places it among the top-performing AI models globally, rivaling much larger frontier systems.

On Humanity’s Last Exam, a benchmark testing extremely difficult reasoning challenges, 3 Flash achieves 33.7% accuracy without tool assistance. While this may seem modest, it represents performance comparable to models with significantly higher computational costs. The model also reaches 81.2% on MMMU Pro, matching Gemini 3 Pro’s multimodal understanding capabilities.

Speed vs. Quality Trade-offs Eliminated

Traditional AI development forced developers to choose between fast, economical models that struggled with complex logic and slower, expensive models with deep reasoning. Gemini 3 Flash eliminates this compromise by pushing the Pareto frontier of performance versus cost and speed.

The model outperforms 2.5 Pro while operating three times faster based on Artificial Analysis benchmarking. Even at its lowest thinking level, 3 Flash frequently outperforms previous versions running at “high” thinking levels. This efficiency stems from architectural refinements that prioritize throughput without sacrificing reasoning depth.

Gemini 3 Flash vs 2.5 Pro: The Numbers That Matter

Benchmark Comparison Table

| Metric | Gemini 3 Flash | Gemini 2.5 Pro | Improvement |

|---|---|---|---|

| Speed (Inference) | Baseline | 3x slower | 3x faster |

| Input Token Cost | $0.50/1M | $1.25/1M | 60% cheaper |

| Output Token Cost | $3.00/1M | $10.00/1M | 70% cheaper |

| GPQA Diamond | 90.4% | Lower (not specified) | Outperforms |

| SWE-bench Verified | 78% | Lower | Outperforms |

| Token Efficiency | 30% fewer tokens | Baseline | 30% reduction |

| Context Caching | Yes (90% savings) | Yes | Same |

Cost Efficiency Breakdown

At $0.50 per million input tokens and $3.00 per million output tokens, Gemini 3 Flash costs less than a quarter of Gemini 3 Pro’s pricing while delivering comparable reasoning capabilities. For developers processing large volumes of requests, this translates to substantial savings.

Context caching provides an additional 90% cost reduction for use cases involving repeated token processing above certain thresholds. The Batch API offers another 50% discount for asynchronous processing workloads with much higher rate limits. Combined, these features enable production-scale deployments at dramatically lower operational costs than previous-generation models.

Pricing and API Access

Token Pricing Structure

Gemini 3 Flash operates on a straightforward token-based pricing model accessible through both the Gemini API and Vertex AI. Standard pricing applies $0.50 per million input tokens for text, $3.00 per million output tokens, and $1.00 per million input tokens for audio. This represents approximately 60% lower input costs and 70% lower output costs compared to Gemini 2.5 Pro.

Free tier users receive limited daily request quotas through Google AI Studio, while paid API customers gain access to production-ready rate limits suitable for commercial applications. Enterprise customers using Vertex AI receive additional SLA guarantees and dedicated support channels.

Cost Reduction Strategies

Developers can optimize spending through three primary mechanisms built into the Gemini API ecosystem. Context caching automatically reduces costs by 90% when processing repeated content that exceeds minimum token thresholds, particularly valuable for applications analyzing long documents or maintaining extensive conversation histories.

The Batch API enables 50% cost savings for asynchronous workloads that don’t require real-time responses. Applications like content moderation, data enrichment, and overnight processing benefit significantly from batch pricing. The model’s inherent token efficiency using 30% fewer tokens than 2.5 Pro for typical tasks compounds these savings.

Real-World Use Cases Powered by 3 Flash

Agentic Coding and Developer Tools

Gemini 3 Flash scores 78% on SWE-bench Verified, surpassing even Gemini 3 Pro’s agentic coding capabilities while operating faster for quick iterations. This makes it ideal for terminal-based development workflows where developers need instant feedback. Google Antigravity, the company’s new agentic development platform, uses 3 Flash to provide coding assistance that keeps pace with developers’ thought processes.

The model handles complex code generation tasks that previously required Pro-tier models. In practical testing with Gemini CLI, 3 Flash successfully generated functional 3D voxel simulations from single prompts, demonstrating strong spatial reasoning and code structure understanding. For high-frequency development tasks like code review, documentation generation, and API exploration, 3 Flash provides a new performance baseline.

Gaming and Real-Time Video Analysis

Game development studios leverage 3 Flash’s superior video analysis and near-real-time reasoning for both creation and player experience. Astrocade uses the model in its agentic game creation engine to generate complete game plans and executable code from single prompts, rapidly converting concepts into playable prototypes.

Latitude’s game creation engine employs 3 Flash to generate more intelligent characters and realistic worlds, directly elevating gameplay quality. The model’s ability to process video inputs natively and respond within milliseconds enables interactive experiences that previously required custom-trained models.

Deepfake Detection and Security

Resemble AI deployed Gemini 3 Flash for near-real-time deepfake intelligence, discovering it offers 4x faster multimodal analysis compared to 2.5 Pro. The model instantly transforms complex forensic data into simple explanations, processing raw technical outputs without hindering critical security workflows.

This speed advantage proves crucial in misinformation response scenarios where detection delays allow fabricated content to spread rapidly. The model’s multimodal capabilities enable it to analyze audio, video, and metadata simultaneously for comprehensive authenticity assessment.

Legal Document Analysis

Harvey, an AI company serving law firms and professional service providers, uses Gemini 3 Flash for complex document analysis that demands both speed and rigorous accuracy. The model’s strong reasoning capabilities enable new efficiency levels in contract review, due diligence, and legal research.

Performance gains in AI models often come with latency trade-offs, but 3 Flash proves that fast models can meet the accuracy demands of industries with zero tolerance for errors. Legal professionals require citations, precedent analysis, and nuanced interpretation tasks where reasoning quality directly impacts outcomes.

Multimodal Capabilities: Beyond Text Processing

Visual and Spatial Reasoning

Gemini 3 Flash processes images, videos, and audio alongside text prompts seamlessly. Developers can feed short video clips to generate actionable insights, such as personalized training plans from sports footage analysis. The model identifies elements in sketches in near-real-time and can overlay contextual UI elements on static images, transforming them into interactive prototypes.

In visual tasks, 3 Flash demonstrates advanced spatial reasoning that enables it to understand complex scenes with multiple elements. This capability extends to technical diagrams, architectural plans, and data visualizations where spatial relationships convey critical information.

Code Execution for Image Manipulation

One of 3 Flash’s standout features involves built-in code execution capabilities for visual inputs. The model can zoom, count, and edit images programmatically without requiring external tools or APIs. This enables sophisticated image analysis workflows where the model writes and executes Python code to extract precise measurements, count objects, or perform transformations.

For developers building computer vision applications, this eliminates the need for separate preprocessing pipelines. The model handles image analysis and manipulation within a single API call, reducing system complexity and latency.

How to Get Started with Gemini 3 Flash

Available Platforms and APIs

Gemini 3 Flash is accessible through multiple Google platforms tailored to different developer needs. Google AI Studio provides a web-based interface for prototyping and testing, ideal for initial experimentation. The Gemini API offers programmatic access for production applications with SDKs available in Python, JavaScript, Go, and other major languages.

Gemini CLI brings the model directly to terminal-based workflows, supporting high-frequency development tasks with intelligent auto-routing between Flash and Pro models. Enterprise customers access 3 Flash through Vertex AI with additional features like private endpoints, VPC integration, and compliance certifications. Android Studio integrates the model for mobile app development with context-aware coding assistance.

Implementation Requirements

Developers using Gemini 3 Flash must configure thinking levels in the API or use the new Interactions API since 3 Flash operates as a reasoning model. The API provides options for “Fast” mode that prioritizes quick responses and “Thinking” mode that allocates additional computation for complex problems.

Google AI Studio includes a built-in API logs visualization dashboard for monitoring usage patterns and debugging integration issues. Rate limits vary by tier, with free users receiving limited daily quotas and paid customers accessing production-ready capacity suitable for commercial deployment. Model feedback can be submitted directly through Google AI Studio to help improve future versions.

Technical Specifications

Here’s the replacement with the same style as the provided laptop table:| Category | Details |

|---|---|

| Model Family | Gemini 3 Series |

| Release Date | December 17, 2025 |

| Architecture | Multimodal transformer with reasoning capabilities |

| Input Modalities | Text, images, video, audio |

| Context Window | Standard to long context support |

| Thinking Modes | Fast mode, Thinking mode |

| Code Execution | Built-in Python execution for visual tasks |

| Benchmarks | GPQA Diamond: 90.4%, MMMU Pro: 81.2%, SWE-bench: 78% |

| Token Processing | Trillions of tokens daily across applications |

| API Platforms | Google AI Studio, Vertex AI, Gemini CLI, Android Studio |

| Rate Limits | Tiered based on subscription level |

| Cost Optimization | Context caching (90%), Batch API (50%) |

| Enterprise Features | SLAs, private endpoints, VPC support via Vertex AI |

Gemini 3 Flash vs Competing Models:

| Feature | Gemini 3 Flash | Gemini 2.5 Pro | Gemini 3 Pro |

|---|---|---|---|

| Input Token Cost | $0.50/1M | $1.25/1M | $2.00/1M |

| Output Token Cost | $3.00/1M | $10.00/1M | $12.00/1M |

| Relative Speed | 3x faster | Baseline | Slower |

| GPQA Diamond | 90.4% | Lower | Higher |

| SWE-bench Verified | 78% | Lower | 75% |

| MMMU Pro | 81.2% | Lower | 81.2% |

| Context Caching | Yes (90%) | Yes | Yes |

| Batch API | Yes (50% off) | Yes | Yes |

| Best For | High-frequency workflows | Balanced tasks | Complex reasoning |

Frequently Asked Questions (FAQs)

What makes Gemini 3 Flash different from Gemini 2.5 Flash?

Gemini 3 Flash delivers significantly stronger reasoning capabilities with PhD-level performance on benchmarks like GPQA Diamond (90.4%), outperforming 2.5 Pro while maintaining Flash-series speed. It features 30% better token efficiency, advanced multimodal processing, and built-in code execution for visual tasks that weren’t available in 2.5 Flash.

How much does Gemini 3 Flash cost compared to other models?

Gemini 3 Flash costs $0.50 per million input tokens and $3.00 per million output tokens, approximately 60-70% cheaper than Gemini 2.5 Pro ($1.25/$10 per million tokens). With context caching, costs drop an additional 90% for repeated content, and the Batch API provides 50% savings for asynchronous processing.

What are the best use cases for Gemini 3 Flash?

Gemini 3 Flash excels at agentic coding (78% SWE-bench Verified), real-time video analysis for gaming, deepfake detection requiring rapid multimodal analysis, and legal document processing demanding both speed and accuracy. It’s ideal for high-frequency workflows where developers need Pro-grade reasoning at Flash speeds.

Can Gemini 3 Flash process videos and images?

Yes, Gemini 3 Flash natively processes text, images, videos, and audio inputs with advanced spatial reasoning capabilities. It includes built-in code execution to zoom, count, and edit visual inputs programmatically, eliminating the need for separate preprocessing tools.

How do I access Gemini 3 Flash?

Developers access Gemini 3 Flash through Google AI Studio (web interface), Gemini API (programmatic), Gemini CLI (terminal), Android Studio (mobile development), or Vertex AI (enterprise). Free tier users receive limited daily quotas, while paid customers get production-ready rate limits.

Does Gemini 3 Flash support context caching?

Yes, Gemini 3 Flash includes standard context caching that reduces costs by 90% when processing repeated tokens above minimum thresholds. This feature automatically applies to conversations, document analysis, and applications with extensive context windows.

How fast is Gemini 3 Flash compared to Gemini 2.5 Pro?

Gemini 3 Flash operates approximately 3x faster than Gemini 2.5 Pro based on Artificial Analysis benchmarking while delivering superior performance across multiple reasoning and coding benchmarks. Even at lower thinking levels, it frequently outperforms 2.5 Pro at higher thinking levels.

What thinking modes does Gemini 3 Flash support?

Gemini 3 Flash offers “Fast” mode for rapid responses prioritizing speed and “Thinking” mode that allocates additional computation for complex reasoning tasks. Developers configure these modes through the API or use the Interactions API for dynamic selection.

Featured Snippet Boxes

What Is Gemini 3 Flash?

Gemini 3 Flash is Google’s latest AI model that combines frontier-level reasoning with Flash-series speed at less than 25% the cost of Gemini 3 Pro. Released December 17, 2025, it scores 90.4% on GPQA Diamond while running 3x faster than 2.5 Pro, priced at $0.50 per million input tokens.

How Much Does Gemini 3 Flash Cost?

Gemini 3 Flash costs $0.50 per 1M input tokens and $3.00 per 1M output tokens, approximately 60-70% cheaper than Gemini 2.5 Pro. Context caching reduces costs by 90% for repeated content, and the Batch API offers 50% savings for asynchronous processing.

What Are Gemini 3 Flash’s Key Benchmarks?

Gemini 3 Flash achieves 90.4% on GPQA Diamond (PhD-level reasoning), 78% on SWE-bench Verified (agentic coding), and 81.2% on MMMU Pro (multimodal understanding). It operates 3x faster than 2.5 Pro while outperforming it across benchmarks.

Where Can I Access Gemini 3 Flash?

Gemini 3 Flash is available through Google AI Studio, Gemini API, Gemini CLI, Android Studio, and Vertex AI for enterprises. Free tier users get limited daily quotas, while paid customers receive production-ready rate limits for commercial deployment.

What Use Cases Benefit from Gemini 3 Flash?

Gemini 3 Flash excels at agentic coding (78% SWE-bench), real-time gaming video analysis, deepfake detection (4x faster than 2.5 Pro), and legal document processing. Its multimodal capabilities and built-in code execution make it ideal for high-frequency developer workflows.

How Does Context Caching Work with Gemini 3 Flash?

Context caching in Gemini 3 Flash automatically reduces costs by 90% when processing repeated tokens above minimum thresholds. It applies to conversations, document analysis, and applications with extensive context windows without requiring manual configuration.